Issue

-

I would like to use Kubernetes Pod Security Policies with CloudBees Core on Modern Cloud Platforms.

Resolution

Pod Security Policies are an optional Kubernetes feature (and still beta but very stable and available for all major cloud providers) so they are not enabled by default on most Kubernetes distributions - to include GCP GKE, and Azure AKS. PSPs can be created and applied to a ClusterRole or a Role resource definition without enabling the PodSecurityPolicy admission controller. This is important, because once you enable the PodSecurityPolicy admission controller any pod that does not have a PSP applied to it will not get scheduled.

It is recommended that you define at least two Pod Security Policies for your Core Modern Kubernetes cluster:

-

A restrictive Pod Security Policy used for all CloudBees components, additional Kubernetes services being leveraged with Core Modern and the majority of dynamic ephemeral Kubernetes based agents used by you Core Modern cluster

-

The second Pod Security Policy will be almost identical except for

RunAsUserwill be set toRunAsAnyto allow running asroot- this is specifically to run Kaniko containers (Please refer to Using Kaniko with CloudBees Core), but you may have other uses cases that require containers to run as root

Restrictive Pod Security Policy

-

Create the following restrictive Pod Security Policy (PSP) (or one like it):

apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: cb-restricted annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: 'docker/default' apparmor.security.beta.kubernetes.io/allowedProfileNames: 'runtime/default' seccomp.security.alpha.kubernetes.io/defaultProfileName: 'docker/default' apparmor.security.beta.kubernetes.io/defaultProfileName: 'runtime/default' spec: # prevents container from manipulating the network stack, accessing devices on the host and prevents ability to run DinD privileged: false fsGroup: rule: 'MustRunAs' ranges: # Forbid adding the root group. - min: 1 max: 65535 runAsUser: rule: 'MustRunAs' ranges: # Don't allow containers to run as ROOT - min: 1 max: 65535 seLinux: rule: RunAsAny supplementalGroups: rule: RunAsAny # Allow core volume types. But more specifically, don't allow mounting host volumes to include the Docker socket - '/var/run/docker.sock' volumes: - 'emptyDir' - 'secret' - 'downwardAPI' - 'configMap' # persistentVolumes are required for CJOC and Managed controller StatefulSets - 'persistentVolumeClaim' - 'projected' # NFS may be used for OC and Managed controller persistent volumes - 'nfs' hostPID: false hostIPC: false hostNetwork: false # Ensures that no child process of a container can gain more privileges than its parent allowPrivilegeEscalation: false -

Create a

ClusterRolethat uses thecb-restrictedPSP (this can be applied to as manyServiceAccountsas necessary):kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: psp-restricted-clusterrole rules: - apiGroups: - extensions resources: - podsecuritypolicies resourceNames: - cb-restricted verbs: - use -

Bind the restricted

ClusterRoleto all theServiceAccountsin thecloudbees-coreNamespace(or whateverNamespaceyou deployed CloudBees Core). The followingRoleBindingwill apply to both of the CloudBees Core Modern definedRoles- thecjocServiceAccountfor provisioning Managed/Team controllers StatefulSets from CJOC and thejenkinsServiceAccountfor scheduling dynamic ephemeral agent pods from Managed/Team controllers:apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: cb-core-psp-restricted namespace: cloudbees-core roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: psp-restricted-clusterrole subjects: # All service accounts in ingress-nginx namespace - apiGroup: rbac.authorization.k8s.io kind: Group name: system:serviceaccounts

Kaniko Pod Security Policy (RunAsRoot)

-

Create the following PSP for running Kaniko jobs and other

Podsthat must run asroot:apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: kaniko-psp annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: 'docker/default' apparmor.security.beta.kubernetes.io/allowedProfileNames: 'runtime/default' seccomp.security.alpha.kubernetes.io/defaultProfileName: 'docker/default' apparmor.security.beta.kubernetes.io/defaultProfileName: 'runtime/default' spec: # prevents container from manipulating the network stack, accessing devices on the host and prevents ability to run DinD privileged: false fsGroup: rule: 'RunAsAny' runAsUser: rule: 'RunAsAny' seLinux: rule: RunAsAny supplementalGroups: rule: RunAsAny # Allow core volume types. But more specifically, don't allow mounting host volumes to include the Docker socket - '/var/run/docker.sock' volumes: - 'emptyDir' - 'secret' - 'downwardAPI' - 'configMap' # persistentVolumes are required for CJOC and Managed controller StatefulSets - 'persistentVolumeClaim' - 'projected' hostPID: false hostIPC: false hostNetwork: false # Ensures that no child process of a container can gain more privileges than its parent allowPrivilegeEscalation: false -

Create a

ServiceAccount,RoleandRoleBindingsfor use with KanikoPods:--- apiVersion: v1 kind: ServiceAccount metadata: name: kaniko --- kind: Role apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: kaniko rules: - apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: - kaniko-psp --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: RoleBinding metadata: name: kaniko roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kaniko subjects: - kind: ServiceAccount name: kaniko --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: cjoc-kaniko-role-binding roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: cjoc-agents subjects: - kind: ServiceAccount name: kaniko -

Update all Kaniko related Pod Templates and/or Pod Template yaml to use the kaniko

ServiceAccountinstead of the default jenkinsServiceAccount. Here is an example yaml based Jenkins Kubernetes Pod Template configuration:kind: Pod metadata: name: kaniko spec: serviceAccountName: kaniko containers: - name: kaniko image: gcr.io/kaniko-project/executor:debug-v0.10.0 imagePullPolicy: Always command: - /busybox/cat tty: true volumeMounts: - name: kaniko-secret mountPath: /secret env: - name: GOOGLE_APPLICATION_CREDENTIALS value: /secret/kaniko-secret.json volumes: - name: kaniko-secret secret: secretName: kaniko-secret securityContext: runAsUser: 0

Bind Restrictive PSP Role for Ingress Nginx

CloudBees recommends the ingress-nginx Ingress controller to manage external access to Core Modern. The NGINX Ingress Controller is a top-level Kubernetes project and provides an example for using Pod Security Policies with the ingress-nginx Deployment. Basically, all you have to do is run the following command before installing the NGINX Ingress controller:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/docs/examples/psp/psp.yaml

However, the PSP provided by the ingress-nginx project may not be sufficiently restrictive enough for some organizations. Specifically, the provided ingress-nginx PSP includes adding NET_BIND_SERVICE as an allowedCapabilities which in turn requires that allowPrivilegeEscalation be set to true. The reason this is necessary is because the nginx-ingress-controller container defaults to binding to ports 80 and 443. If you are only using ingress-nginx with CloudBees Core Modern you can use the cb-restricted PSP from above if you modify the Deployment of the nginx-ingress-controller to bind to non-privileged ports for http and https and update the container args to specify those ports. An example of a modified nginx-ingress-controller Deployment that works with the cb-restricted PSP is provided below:

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-ingress-controller namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx spec: ... spec: # wait up to five minutes for the drain of connections terminationGracePeriodSeconds: 300 serviceAccountName: nginx-ingress-serviceaccount nodeSelector: kubernetes.io/os: linux containers: - name: nginx-ingress-controller image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1 args: - /nginx-ingress-controller ... - --http-port=8080 - --https-port=8443 securityContext: # www-data -> 33 runAsUser: 33 ... ports: - name: http containerPort: 8080 - name: https containerPort: 8443

Specifically, you are adding the --http-port=8080 and --https-port=8443 args for the nginx-ingress-controller container and updating the ports to 8080 for http and 8443 for https.

If you are using Helm to install ingress-nginx then you will need to use the helm template feature to generate the YAML, make the necessary changes to the nginx-ingress-controller Deployment and then apply with kubectl -f.

Making these changes to the nginx-ingress-controller Deployment have only been tested with CloudBees Core Modern and may not work with other Kubernetes applications.

|

Enable the Pod Security Policy Admission Controller

Once PSPs have been applied to all the ServiceAccounts in your Kubernetes cluster you can enable the Pod Security Policy Admission Controller:

External documentation on enabling and using Pod Security Policies:

-

AWS EKS (enabled by default for version 1.13 or later): https://docs.aws.amazon.com/eks/latest/userguide/pod-security-policy.html

-

GCP GKE (beta feature): https://cloud.google.com/kubernetes-engine/docs/deprecations/podsecuritypolicy

-

Azure AKS (preview feature): https://learn.microsoft.com/en-us/azure/aks/use-pod-security-policies

-

PKS: https://docs.pivotal.io/pks/1-5/pod-security-policy.html

Restrictive Pod Security Policies and Jenkins Kubernetes Pod Template Agents

The Jenkins Kubernetes plugin (for ephemeral K8s agents) defaults to using a K8s emptyDir volume type for the Jenkins agent workspace. This causes issues when using a restrictive PSP such at the cb-restricted PSP above. Kubernetes defaults to mounting emptyDir volumes as root:root with permissions set to 750 - as detailed by this GitHub issue. In order to run containers in a Pod Template as a non-root user you must specify a securityContext at the container or pod level. There are at least two ways to do this (in both cases at the pod level):

-

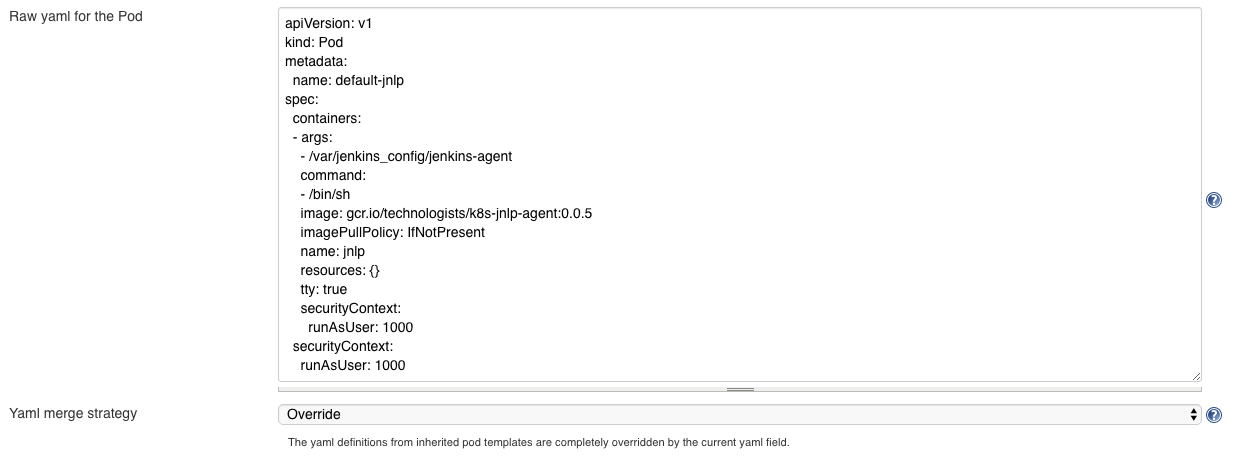

In the Kubernetes cloud configuration UI via the Raw yaml for Pod field:

-

In the raw yaml of a

podspec that you embed or load into your Jenkins job from a file:kind: Pod metadata: name: nodejs-app spec: containers: - name: nodejs image: node:10.10.0-alpine command: - cat tty: true - name: testcafe image: gcr.io/technologists/testcafe:0.0.2 command: - cat tty: true securityContext: runAsUser: 1000