Issue

Your Jenkins instance keeps shutting down, or it shut down one time, and it’s not clear what is the root cause.

Your controller goes down with the following message:

INFO winstone.Logger#logInternal: JVM is terminating. Shutting down Jetty

Causes

The message log indicates Jenkins received a SIGTERM from the system. This command (like pressing Ctrl+C from the terminal) instructs Jenkins to perform a graceful shutdown.

This can happen if a pipeline job contains System.exit in the pipeline code. There are a few places where the method would exist.

Scenario #1: Approved method in the groovy sandbox

Prior to Script Security 1.58, a pipeline build could call the method System.exit, and then one of the Jenkins administrators approved the System.exit method from Manage Jenkins -> In-process Script Approval, after which point any time a Pipeline calls that method, it will exit the JVM that is running Jenkins itself.

Since Script Security 1.58 (18 Apr 2019), System.exit is included in the denylist so administrator can no longer approve this method.

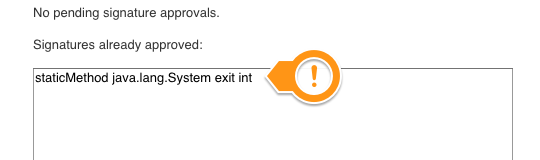

Case A ) If your Jenkins instance is able to fully load, then check under Manage Jenkins -> In-process Script Approval and ensure that you do not have the method java.lang.System exit in the approved methods:

If you have that entry, you can clear it by using the Clear Approvals or Clear only dangerous Approvals buttons.

Case B ) If your Jenkins instance keeps restarting, check the following file: JENKINS_HOME/scriptApproval.xml, and look for the line with staticMethod java.lang.System exit int

<approvedSignatures>

<string>staticMethod java.lang.System exit int</string>

...

</approvedSignatures>

If you see that line, you should delete the <string>staticMethod java.lang.System exit int</string> line, and start Jenkins again.

<approvedSignatures>

...

</approvedSignatures>

Note: if the whole groovy script is approved, only the hashed value will be shown.

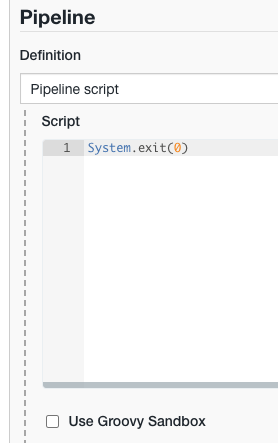

Scenario #2: system.exit runs outside the groovy sandbox

The method System.exit can be executed outside the sandbox without any restrictions.

Maybe you have a pipeline job configured to not use the Groovy Sandbox, or the system.exit method resides in a global shared library which by design always runs outside the sandbox.

To find where the system.exit is located, you can:

Grep all files in $JENKINS_HOME:

grep -ri 'system.exit' $JENKINS_HOME

Find all XMLs and Groovy files modified within the last day:

find $JENKINS_HOME -mtime -1 -type f \( -iname "*.xml" -o -iname ".groovy" \) -print0 | xargs -0 grep -i system.exit

Also search in your SCM where the Jenkinsfiles and Shared Libraries are stored.

Note: The SCM may return nothing if the commit was pushed and later deleted

Further Troubleshooting

-

When Jenkins stops, collect

$JENKINS_HOME/org.jenkinsci.plugins.workflow.flow.FlowExecutionList.xml, only after then start Jenkins again. The file contains a list of pipelines that were executing before Jenkins was shutdown. -

If Jenkins keeps shutting down, you can start Jenkins in quiet down mode to prevent jobs from executing. If Jenkins can start successfully this way, the shutdown is likely caused by one of its running jobs.

Tested product/plugin versions

Script Security plugin version 1.51

CloudBees Core CI on Traditional Platforms 2.303.3.3