Issue Summary

Starting in 2.249.2.3 the CloudBees High Availability (active/passive) plugin has been upgraded to 4.24, which incorporates a JGroups dependency update to 4.0.2.Final+. This update was performed to solve a previously known memory leak issue.

This change requires CloudBees CI administrators to recreate the JGroups configuration if it was previously customized as some fields have changed between JGroups 3.x and JGroups 4.x.

-

MERGE2 -> MERGE3

-

UNICAST -> UNICAST3

-

Various TCP_NIO2 options were removed

-

For example, thread_pool, oob_thread_pool.

If the recommended migration updates are not completed, then the instance will fail to start with a stack trace, as illustrated in the following examples:

Typical stack trace when CloudBees CI fails to start because of a non-updated $JENKINS_HOME/jgroups.xml file

2020-10-14 10:30:14.066+0000 [id=1] SEVERE c.c.jenkins.ha.HASwitcher#reportFallback: CloudBees CI Operations Center appears to have failed to boot. If this is a problem in the HA feature, you can disable HA by specifying JENKINS_HA=false as environment variable

java.lang.IllegalArgumentException: JGRP000001: configuration error: the following properties in TCP_NIO2 are not recognized: {oob_thread_pool.enabled=true, timer.keep_alive_time=3000, thread_pool.queue_enabled=false, thread_pool.queue_max_size=100, oob_thread_pool.queue_max_size=100, oob_thread_pool.keep_alive_time=5000, oob_thread_pool.min_threads=1, oob_thread_pool.queue_enabled=false, oob_thread_pool.max_threads=8, oob_thread_pool.rejection_policy=discard, thread_pool.rejection_policy=discard, timer.queue_max_size=500, timer.min_threads=4, max_bundle_timeout=30, timer.max_threads=10, timer_type=new}

at org.jgroups.stack.Configurator.createLayer(Configurator.java:278)

at org.jgroups.stack.Configurator.createProtocols(Configurator.java:215)

at org.jgroups.stack.Configurator.setupProtocolStack(Configurator.java:82)

at org.jgroups.stack.Configurator.setupProtocolStack(Configurator.java:49)

at org.jgroups.stack.ProtocolStack.setup(ProtocolStack.java:475)

at org.jgroups.JChannel.init(JChannel.java:965)

at org.jgroups.JChannel.<init>(JChannel.java:148)

at org.jgroups.JChannel.<init>(JChannel.java:106)

at com.cloudbees.jenkins.ha.AbstractJenkinsSingleton.createChannel(AbstractJenkinsSingleton.java:143)

at com.cloudbees.jenkins.ha.singleton.HASingleton.start(HASingleton.java:86)

Caused: java.lang.Error: Failed to form a cluster

at com.cloudbees.jenkins.ha.singleton.HASingleton.start(HASingleton.java:179)

Typical stack trace when CloudBees CI fails to start because JGroups customized through GUI + running 2.249.2.3

020-10-12 21:25:06.280+0000 [id=1] SEVERE winstone.Logger#logInternal: Container startup failed

java.lang.IllegalArgumentException: JGRP000001: configuration error: the following properties in com.cloudbees.jenkins.ha.singleton.CHMOD_FILE_PING are not recognized: {remove_old_files_on_view_change=true}

at org.jgroups.stack.Configurator.createLayer(Configurator.java:278)

at org.jgroups.stack.Configurator.createProtocols(Configurator.java:215)

at org.jgroups.stack.Configurator.setupProtocolStack(Configurator.java:82)

at org.jgroups.stack.Configurator.setupProtocolStack(Configurator.java:49)

at org.jgroups.stack.ProtocolStack.setup(ProtocolStack.java:475)

at org.jgroups.JChannel.init(JChannel.java:965)

at org.jgroups.JChannel.<init>(JChannel.java:148)

at org.jgroups.JChannel.<init>(JChannel.java:122)

at com.cloudbees.jenkins.ha.AbstractJenkinsSingleton.createChannel(AbstractJenkinsSingleton.java:176)

at com.cloudbees.jenkins.ha.singleton.HASingleton.start(HASingleton.java:86)

Caused: java.lang.Error: Failed to form a cluster

at com.cloudbees.jenkins.ha.singleton.HASingleton.start(HASingleton.java:179)

Environment

Affected instances are those which are using the CloudBees High Availability plugin and are customizing JGroups by placing a jgroups.xml file inside the $JENKINS_HOME directory. Note: If High Availability has been configured only via the GUI under Manage Jenkins -> Configure System -> High Availability Configuration, then the instance will not be affected and you can upgrade to version 2.249.2.4 or higher without changes.

Resolution

Restoring service quickly while you work on migrating your jgroups.xml

To restore service quickly while you work on migrating your jgroups.xml file, add the following Java argument -Dcom.cloudbees.jenkins.ha=false to one controller (only add it to one controller) and restart it. This Java argument will disable High Availability, so the instance should start without issues. Applying this workaround means only the controller with the Java Argument will be available and running.

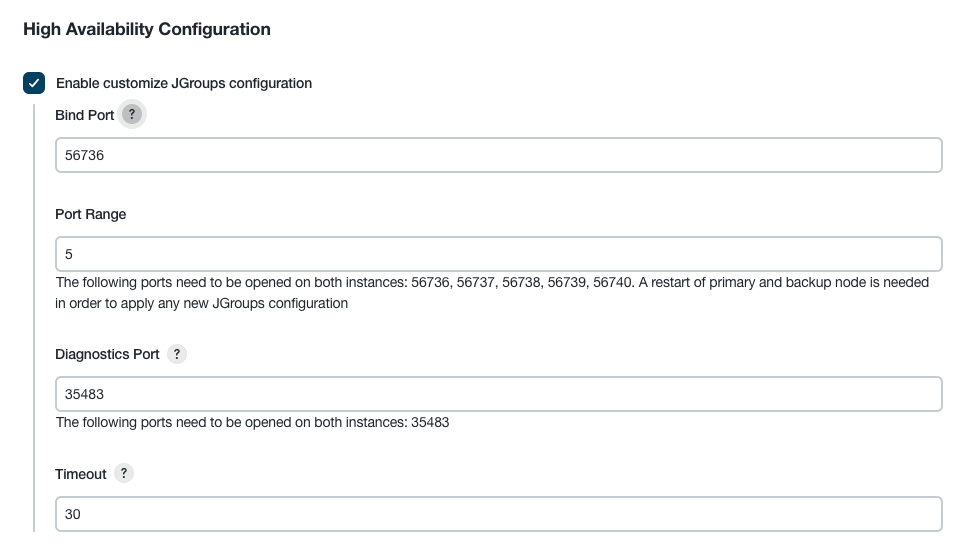

Upgrading from versions older than 2.249.2.3 to 2.303.2.3 or higher

For product versions 2.303.2.3 or higher, you no longer need to use a custom jgroups.xml to customize the HA timeout or ports (which is typically the only reason clients use a custom jgroups.xml), you can adjust the timeout from the product by going to Manage Jenkins -> Configure System -> High Availability Configuration -> Enable customize JGroups configuration. You will need to configure the ports you would like to use, as well as you can configure the timeout (default is 30 seconds).

Upgrading from versions older than 2.249.2.3 to 2.249.2.3-2.303.1.6

Instances with a customized JGroups file upgrading from a version older than 2.249.2.3 must update the current JGroups customization (by placing a jgroups.xml file inside the $JENKINS_HOME directory as explained below).

JGroups customization performed through $JENKINS_HOME/jgroups.xml

To migrate the JGroups configuration, you must determine what customization has previously been applied by comparing the jgroups.xml file with the reference file included in Appendix B: Example JGroups customization previous to 2.249.2.3.

On Unix-like systems, an easy way to see what customizations have been made is with the diff tool.

Copying the contents of the JGroups file from Appendix B and saving it as jgroups-3-base.xml, then running the command diff --color --ignore-all-space --unified=500 jgroups-3-base.xml jgroups.xml will show what lines have changed.

Removed lines are identified by a line starting with a - symbol. Added lines start with the + symbol, and if a line has been changed, it will display both removed and added symbols.

Depending on the scope of changes identified above, different paths are recommended. If only ports have been configured, we recommend the jgroups.xml file be removed from $JENKINS_HOME, a single instance started, and HA ports configured inside the GUI; otherwise, the old configuration entries will need to be mapped to a new configuration format.

Only ports have been configured, if only the following entries are different: config/TCP_NIO2/bind_port,config/TCP_NIO2/port_range and config/TCP_NIO2/diagnostics_port.

The output of the diff command from above will look like the following for this case:

--- jgroups-3-base.xml 2020-10-14 14:40:24.918727200 +0100 +++ jgroups.xml 2020-10-14 11:09:19.640017900 +0100 @@ -1,68 +1,68 @@ <!-- HA setup; may be overridden with $JENKINS_HOME/jgroups.xml --> <config xmlns="urn:org:jgroups" xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="urn:org:jgroups https://www.jgroups.org/schema/JGroups-3.1.xsd"> <TCP_NIO2 recv_buf_size="${tcp.recv_buf_size:128K}" send_buf_size="${tcp.send_buf_size:128K}" max_bundle_size="64K" max_bundle_timeout="30" sock_conn_timeout="1000" - bind_port="${HA_BIND_PORT}" - port_range="${HA_PORT_RANGE}" - diagnostics_port="${HA_DIAGNOSTIC_PORT}" + bind_port="56736" + port_range="5" + diagnostics_port="35483" timer_type="new" timer.min_threads="4" timer.max_threads="10" timer.keep_alive_time="3000" timer.queue_max_size="500" thread_pool.enabled="true" thread_pool.min_threads="1" thread_pool.max_threads="10" thread_pool.keep_alive_time="5000" thread_pool.queue_enabled="false" thread_pool.queue_max_size="100" thread_pool.rejection_policy="discard" oob_thread_pool.enabled="true" oob_thread_pool.min_threads="1" oob_thread_pool.max_threads="8" oob_thread_pool.keep_alive_time="5000" oob_thread_pool.queue_enabled="false" oob_thread_pool.queue_max_size="100" oob_thread_pool.rejection_policy="discard"/> <CENTRAL_LOCK /> <com.cloudbees.jenkins.ha.singleton.CHMOD_FILE_PING location="${HA_JGROUPS_DIR}" remove_old_coords_on_view_change="true" remove_all_files_on_view_change="true"/> <MERGE2 max_interval="30000" min_interval="10000"/> <FD_SOCK/> <FD timeout="3000" max_tries="3" /> <VERIFY_SUSPECT timeout="1500" /> <BARRIER /> <pbcast.NAKACK2 use_mcast_xmit="false" discard_delivered_msgs="true"/> <UNICAST /> <!-- When a new node joins a cluster, initial message broadcast doesn't necessarily seem to arrive. Using a shorter cycles in the STABLE protocol makes the cluster recognize this dropped transmission and cause a retransmission. --> <pbcast.STABLE stability_delay="1000" desired_avg_gossip="50000" max_bytes="4M"/> <pbcast.GMS print_local_addr="true" join_timeout="3000" view_bundling="true" max_join_attempts="5"/> <MFC max_credits="2M" min_threshold="0.4"/> <FRAG2 frag_size="60K" /> <pbcast.STATE_TRANSFER /> <!-- pbcast.FLUSH /--> </config>

Migrating from a customized JGroups configuration file to in-product GUI configuration

| This migration requires 2.249.2.4 or higher. |

Starting from CloudBees High Availability version 4.8, it is possible to configure the ports via the UI. Open Manage Jenkins > Configure System and locate the High Availability Configuration section. Enable the customization, specify the ports and restart both nodes in HA singleton.

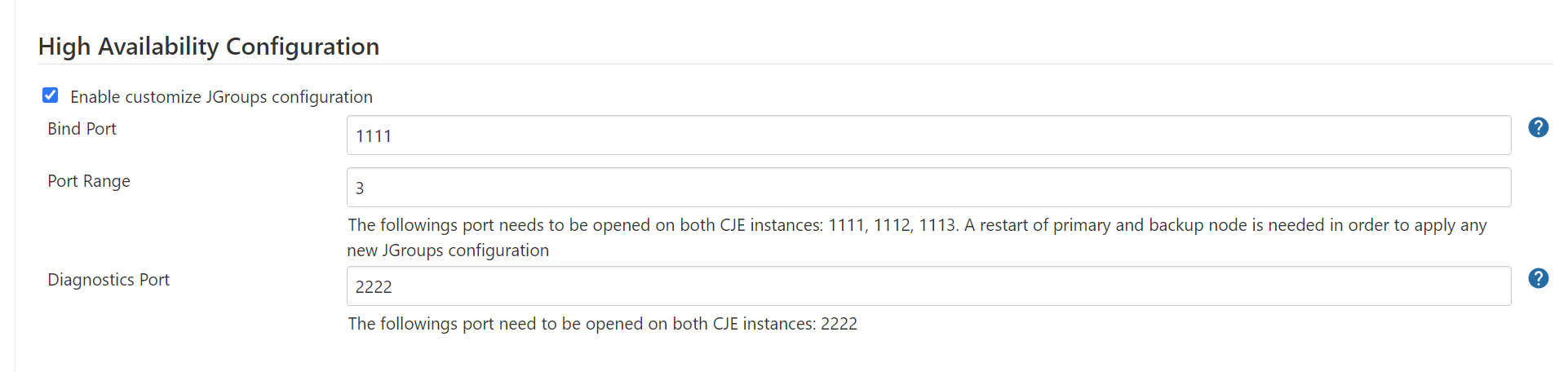

Add port customization from a customized jgroups.xml file to the GUI as follows:

-

Bind Port in the GUI corresponds to

bind_portin thejgroups.xml -

Port Range in the GUI corresponds to

port_rangein thejgroups.xml -

Diagnostics Port in the GUI corresponds to

diagnostics_portin thejgroups.xml

The following example shows what would be set if your jgroups.xml had the following entries:

-

bind_port="1111" -

port_range="3" -

diagnostics_port="2222"

Migrating from a customized JGroups configuration to the newer format JGroups configuration

If more values have changed than just the ports, then a fuller migration will be required.

In the majority of cases, the only additional values that will have been changed are the timeouts like in the following example:

<FD timeout="20000" max_tries="3" /> <VERIFY_SUSPECT timeout="5000" />

To migrate the values, first create a backup of your old jgroups.xml configuration (mv jgroups.xml jgroups.xml.pre-migration) and then place a copy of the new jgroups.xml configuration from Appendix A: Example JGroups customization for version 2.249.2.3 or higher in $JENKINS_HOME.

-

Open the

jgroups.xmlfile in your text editor. -

Replace

${HA_BIND_PORT}with the value from your old configuration file. -

Replace

${HA_PORT_RANGE}with the value from your old configuration file. -

Replace

${HA_DIAGNOSTIC_PORT}with the value from your old configuration file. -

Replace the

<FD timeout="3000" max_tries="3" />entry with the corresponding line from your old configuration file. -

Replace the

<VERIFY_SUSPECT timeout="1500" />entry with the corresponding line from your old configuration file.

If you have more customizations to the file than those outlined above and are not familiar with jgroups, please open a ticket with support and include the contents of the file in the ticket.

Appendix A: Example JGroups customization for version 2.249.2.3 or higher

<!-- HA setup; may be overridden with $JENKINS_HOME/jgroups.xml -->

<config xmlns="urn:org:jgroups"

xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:org:jgroups https://www.jgroups.org/schema/jgroups-4.0.xsd">

<TCP_NIO2

recv_buf_size="${tcp.recv_buf_size:128K}"

send_buf_size="${tcp.send_buf_size:128K}"

max_bundle_size="64K"

sock_conn_timeout="1000"

bind_port="${HA_BIND_PORT}"

port_range="${HA_PORT_RANGE}"

diagnostics_port="${HA_DIAGNOSTIC_PORT}"

thread_pool.enabled="true"

thread_pool.min_threads="1"

thread_pool.max_threads="10"

thread_pool.keep_alive_time="5000"/>

<CENTRAL_LOCK />

<com.cloudbees.jenkins.ha.singleton.CHMOD_FILE_PING

location="${HA_JGROUPS_DIR}"

remove_old_coords_on_view_change="true"/>

<MERGE3 max_interval="30000"

min_interval="10000"/>

<FD_SOCK/>

<FD timeout="3000" max_tries="3" />

<VERIFY_SUSPECT timeout="1500" />

<BARRIER />

<pbcast.NAKACK2 use_mcast_xmit="false"

discard_delivered_msgs="true"/>

<UNICAST3 />

<!--

When a new node joins a cluster, initial message broadcast doesn't necessarily seem

to arrive. Using a shorter cycles in the STABLE protocol makes the cluster recognize

this dropped transmission and cause a retransmission.

-->

<pbcast.STABLE stability_delay="1000" desired_avg_gossip="50000"

max_bytes="4M"/>

<pbcast.GMS print_local_addr="true" join_timeout="3000"

view_bundling="true"

max_join_attempts="5"/>

<MFC max_credits="2M"

min_threshold="0.4"/>

<FRAG2 frag_size="60K" />

<pbcast.STATE_TRANSFER />

<!-- pbcast.FLUSH /-->

</config>

Appendix B: Example JGroups customization previous to 2.249.2.3

<!-- HA setup; may be overridden with $JENKINS_HOME/jgroups.xml -->

<config xmlns="urn:org:jgroups"

xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:org:jgroups https://www.jgroups.org/schema/JGroups-3.1.xsd">

<TCP_NIO2

recv_buf_size="${tcp.recv_buf_size:128K}"

send_buf_size="${tcp.send_buf_size:128K}"

max_bundle_size="64K"

max_bundle_timeout="30"

sock_conn_timeout="1000"

bind_port="${HA_BIND_PORT}"

port_range="${HA_PORT_RANGE}"

diagnostics_port="${HA_DIAGNOSTIC_PORT}"

timer_type="new"

timer.min_threads="4"

timer.max_threads="10"

timer.keep_alive_time="3000"

timer.queue_max_size="500"

thread_pool.enabled="true"

thread_pool.min_threads="1"

thread_pool.max_threads="10"

thread_pool.keep_alive_time="5000"

thread_pool.queue_enabled="false"

thread_pool.queue_max_size="100"

thread_pool.rejection_policy="discard"

oob_thread_pool.enabled="true"

oob_thread_pool.min_threads="1"

oob_thread_pool.max_threads="8"

oob_thread_pool.keep_alive_time="5000"

oob_thread_pool.queue_enabled="false"

oob_thread_pool.queue_max_size="100"

oob_thread_pool.rejection_policy="discard"/>

<CENTRAL_LOCK />

<com.cloudbees.jenkins.ha.singleton.CHMOD_FILE_PING

location="${HA_JGROUPS_DIR}"

remove_old_coords_on_view_change="true"

remove_all_files_on_view_change="true"/>

<MERGE2 max_interval="30000"

min_interval="10000"/>

<FD_SOCK/>

<FD timeout="3000" max_tries="3" />

<VERIFY_SUSPECT timeout="1500" />

<BARRIER />

<pbcast.NAKACK2 use_mcast_xmit="false"

discard_delivered_msgs="true"/>

<UNICAST />

<!--

When a new node joins a cluster, initial message broadcast doesn't necessarily seem

to arrive. Using a shorter cycles in the STABLE protocol makes the cluster recognize

this dropped transmission and cause a retransmission.

-->

<pbcast.STABLE stability_delay="1000" desired_avg_gossip="50000"

max_bytes="4M"/>

<pbcast.GMS print_local_addr="true" join_timeout="3000"

view_bundling="true"

max_join_attempts="5"/>

<MFC max_credits="2M"

min_threshold="0.4"/>

<FRAG2 frag_size="60K" />

<pbcast.STATE_TRANSFER />

<!-- pbcast.FLUSH /-->

</config>