Issue

-

I want to separate traffic coming from external TCP or Websocket agents and external TCP or Websocket controllers

-

I want to deploy an Ingress Controller dedicated to external TCP or Websocket agents and external TCP or Websocket controllers

Explanation

A typical use case is to separate the traffic of general users and the traffic of external agents and controllers, that is the example that this article outlines.

In the case of Ingress Nginx, changes to Ingress objects processed by the Ingress Controller cause Nginx in each Ingress controller pods to reload and close long-lasting connections - such as the ones of external TCP / Websocket agents and controllers - after a timeout. This timeout at the time of writing is 4 minutes (see worker-shutdown-timeout). In CloudBees CI prior to version 2.277.2.x, the start / stop / restart of ANY managed controller recreates the Ingress Object of that controller. This causes a reload of the Nginx of all Ingress Controller pods. That eventually causes a disconnection of the external agents / controller connections.

Isolating traffic in a different Ingress Controller improves the stability of the connection of external agents and controllers.

Resolution

The general idea is to replicate the original Ingress Controller solution for a different host:

1. Create a replica of the current Ingress Controller deployment with:

-

A different hostname (DNS record)

-

A different Ingress Class

-

In most cases - service of type

LoadBalancer- a distinct Load Balancer -

In some cases - when a wildcard DNS / Certificate cannot be used - a distinct TLS Certificate Key pair

2. For each CloudBees CI component that must accept external traffic, create a replica of the Ingress object with:

-

A different hostname

-

A different Ingress Class

-

A different name

3. Reconnect external agents and controllers via the new hostname

| due to the multiple combinations of configuration in an Ingress Controller deployment (layer of the Load Balancer in front, location of the TLS termination, Service Type, usage of Proxy Protocol, etc…), it is nearly impossible to have a single detailed documented solution. The general recommendation is to use the original Ingress Controller configuration as a base and adapt it. |

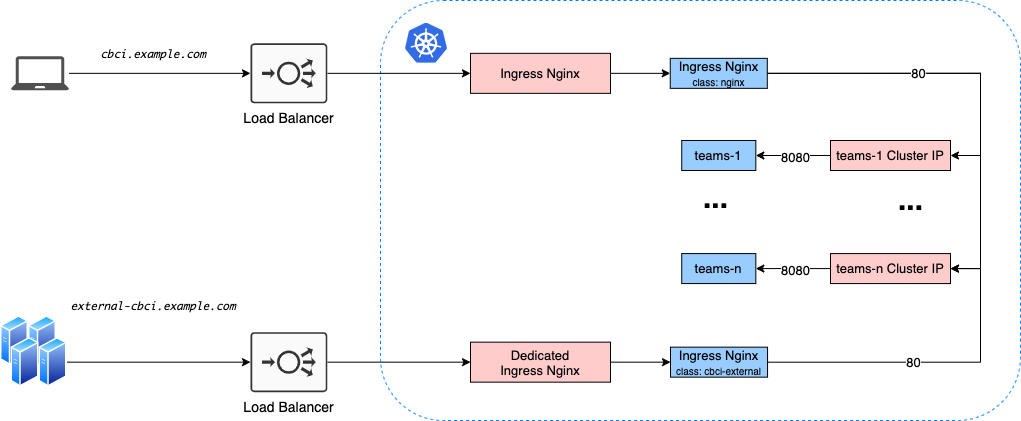

Architecture

(Note: what looks like two Load Balancers could be just one Load Balancer with host-based capabilities).

Steps

1. Dedicated Ingress Controller Deployment

1.1. Deploy the dedicated Ingress Controller

The Ingress Controller deployment should be the same as the one already in place. The main difference being the Ingress Class that must be distinct. Here is a very simple example of helm values on how to set the Ingress Class:

rbac: create: true defaultBackend: enabled: false controller: ingressClass: "cbci-external"

| This is a very basic example. It should be adjusted accordingly to the environment. |

1.2. Set Up the Load Balancer (only if using NodePort)

If using a Service of type NodePort for the Ingress Controller, configure the Load Balancer (or a new Load Balancer) to forward traffic from the dedicated hostname to the Ingress Controller Service nodePorts.

| This should be similar to what was done for the original Ingress Controller deployment, but for a different hostname and different ports. Health Checks configuration should also be similar but with different ports. |

1.3. Set Up the DNS

Configure the DNS Server so that the dedicated DNS hostname points to the Load Balancer in front of the dedicated Ingress Controller.

| This is similar to what was done for the original Ingress Controller deployment, but for a different hostname and depending on the environment a different Load Balancer. |

2. CloudBees CI Configuration

2.1. Add an Ingress for the Operations Center

| This would be required if using external Shared Agents and / or connecting external Controllers |

2.1.1. Retrieve the current Ingress of Operations Center with kubectl get ing cjoc -o yaml as a base. Then:

-

replace the value of the

kubernetes.io/ingress.classannotation by the ingress class of the dedicated Ingress Controller - for examplecbci-external -

replace the value of the

.spec.rules[*].hostby the dedicated DNS hostname that will serve that Ingress - for exampleexternal-cbci.example.com -

replace the value of the

.metadata.nameby a unique name - for examplecjoc-external

2.1.2. Create this Ingress in the namespace where Operations Center is deployed.

Here is a full example:

---

kind: "Ingress"

metadata:

annotations:

kubernetes.io/ingress.class: "cbci-external"

nginx.ingress.kubernetes.io/proxy-body-size: "50m"

nginx.ingress.kubernetes.io/proxy-request-buffering: "off"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/app-root: "/cjoc/teams-check/"

name: "cjoc-external"

spec:

rules:

- host: "external-cbci.example.com"

http:

paths:

- backend:

serviceName: "cjoc"

servicePort: 80

path: "/cjoc/"

- backend:

serviceName: "cjoc"

servicePort: 80

path: "/cjoc/*"

Multi-Cluster environment

For Multi cluster environment:

-

In the Operations Center under , go to the configuration of the Kubernetes Endpoint

-

Change the

Jenkins URLby the dedicated URLhttps://external-cbci.example.com/cjoc

When the Managed controllers are restarted, they will connect to Operations Center using the dedicated URL.

2.2. Add an Ingress for the controller

(IMPORTANT Note: In versions earlier than 2.277.2.x, the Ingresses of a controller that are managed by Operations Center are recreated after a controller restart, that provokes a reload of each Ingress Nginx pod and closes long-lasting connections - such as TCP/JNLP and Websockets. For that reason it is recommended to create dedicated Ingresses manually rather than through the Managed Controller’s configuration).

2.2.1. Retrieve the current Ingress of a typical controller with kubectl get ing <controllerName> -o yaml as a base. Then:

-

replace the value of the

kubernetes.io/ingress.classannotation by the ingress class of the dedicated Ingress Controller - for examplecbci-external -

replace the value of the

.spec.rules[*].hostby the dedicated DNS hostname that will serve that Ingress - for exampleexternal-cbci.example.com -

replace the value of the

.metadata.nameby a unique name - for example${name}-external -

replace any reference of the controller name by the controller name to create the ingress for

2.2.2. Create this Ingress in the namespace where the Managed Controller is deployed.

Here is a full example (replace ${name} by the controller name):

---

kind: "Ingress"

metadata:

annotations:

kubernetes.io/ingress.class: "cbci-external"

nginx.ingress.kubernetes.io/proxy-body-size: "50m"

nginx.ingress.kubernetes.io/proxy-request-buffering: "off"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/app-root: "/cjoc/teams-check/"

labels:

type: "controller"

tenant: "${name}"

com.cloudbees.pse.type: "controller"

com.cloudbees.cje.type: "controller"

com.cloudbees.pse.tenant: "${name}"

com.cloudbees.cje.tenant: "${name}"

name: "${name}-external"

spec:

rules:

- host: "external-cbci.example.com"

http:

paths:

- backend:

serviceName: "${name}"

servicePort: 80

path: "/${name}/"

- backend:

serviceName: "${name}"

servicePort: 80

path: "/${name}/*"

3. Reconnect External Agents / Controllers

3.1. External Agents

Reconfigure / reconnect external agents so that they connect to the Controllers using the dedicated hostname. This can be done using the Raw approach to launch Agents:

java -cp agent.jar hudson.remoting.jnlp.Main -headless -internalDir "remoting" -workDir "${workDir}" -url "https://external-cbci.example.com/${name}/" -webSocket ${agentName} ${agentSecret}

Considerations / Caveats

Maintainability

This solution requires to maintain an Ingress Controller and also additional CloudBees CI component Ingresses manually to avoid Nginx reloads.

Reload still Required but more Manageable

The dedicated Ingress Controller listens to the dedicated Ingresses and maintains that traffic only. That means that it is NOT subjected to reloads caused by traditional Operations Center operations such as Managed Controller stop / start / restarts. However it is subjected to reloads if a new dedicated Ingress is added or deleted. That being said, with this setup those reload are manageable and controllable: since ingresses are created manually the administrator decides when to add / remove dedicated Ingresses and can plan for it with maintenance windows.