| This article references an issue that affects a product version that is no longer supported. Please verify the version listed in the article applies to your situation. If unsure, please submit a support ticket at: https://support.cloudbees.com/. |

Issue

-

A Managed controller or Operation Center fails during provisioning and show an error similar to the following (it might be related to Manual Scaling).

Pod : Pod currently Pending. Reason: Unschedulable -> 0/4 nodes are available: 1 Insufficient cpu, 1 node(s) had taints that the pod didn't tolerate, 2 node(s) had no available volume zone.

or

Warning FailedScheduling 13m (x88 over 142m) default-scheduler 0/1 nodes are available: 1 node(s) had volume node affinity conflict.

Explanation

The Kubernetes scheduler does not handle zone constraints when using dynamic provisioning of zone specific storage - such as for example EBS. First, the PersistentVolumeClaim is created and it is only then that the volume binding / dynamic provisioning occurs. This can lead to unexpected behavior where the PVC is created in a zone where there are actually no resources available for scheduling the pod.

When persistent volumes are created, the PersistentVolumeLabel admission controller automatically adds zone labels to them: topology.kubernetes.io/zone (previously failure-domain.beta.kubernetes.io/zone ). For example, for a given cjoc

$> kubectl describe pv pvc-0c7a3250-5da0-11e8-9391-42010a840fe8 -n cje-support-general Name: pvc-0c7a3250-5da0-11e8-9391-42010a840fe8 Labels: failure-domain.beta.kubernetes.io/region=europe-west1 failure-domain.beta.kubernetes.io/zone=europe-west1-b [...] Claim: cje-support-general/jenkins-home-cjoc-0 [...]

The scheduler will then ensure that pods that claim a given volume are only placed into the same zone as that volume (as long as it has enough capacity), as volumes cannot be attached across zones.

Thus, the node affinity conflict is happening when the application (Operation Center or Managed controller) has been moved to a different Availability Zone where the issued application was initially created, the volume remains attached to the previous Availability Zone.

Resolution

Kubernetes 1.12 and later

Kubernetes 1.12 introduces a feature called Volume Binding Mode to control that behavior.

It requires that the VolumeScheduling feature gate is enabled - which it is by default since 1.10. You need to add the field volumeBindingMode: WaitForFirstConsumer to the default storage class.

Here is an example:

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: test-storage volumeBindingMode: WaitForFirstConsumer ...

Notes:

-

Supported plugins support are listed at Storage Classes - Volume Binding Mode.

-

volumeBindingModeis an immutable field. If you have defined this field for a storage class it cannot be edited, thus a new storage class would be needed. -

Once the volume is created and associated with the PVC, adding this flag (

volumeBindingMode: WaitForFirstConsumer) will have no subsequent effect. -

If the issue is exposed after using

volumeBindingMode: WaitForFirstConsumer, it might be caused by manual scaling which is not recommended. Please, use the Autoscaling capabilities of your Kubernetes Vendor (For example in Enabling auto-scaling nodes on GKE). In the case it happens, the application data must be migrated to their previous Availability Zone by Creating a manual backup of the previous application, Restoring in the new Availability Zones and Deleting previous pvc and pv for that application. IMPORTANT: deleting a controller PVC will delete the corresponding controller/oc volume (including its$JENKINS_HOME)

Kubernetes < 1.12

The solution is to upgrade to Kubernetes 1.12.

Workarounds

-

Using a single zone environment until access to Kubernetes 1.12 is possible.

-

Creating single zone storage classes (one per Availability Zone). The Managed controller can be configured to use a specific storage class.

1) Create Storage Classes

To create a Storage Class and bind it to an availability zone, the zone parameter can be used. Following is a YAML example of a storage class using the AWS EBS provisioner bound to us-east-1a:

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: gp2-1a labels: k8s-addon: storage-aws.addons.k8s.io parameters: type: gp2 zone: us-east-1a provisioner: kubernetes.io/aws-ebs

| Different provisioners accept different parameters, more details can be found at Storage Class - Parameters. |

2) Configure Managed controllers

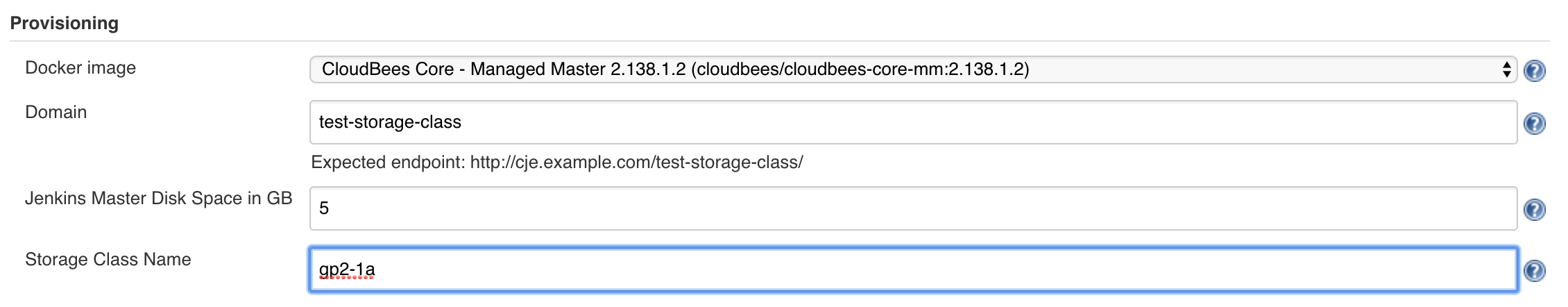

In the Managed controller configuration, set the storage class name in the "Storage Class Name" field:

If a persistent volume claim already exists for the managed controller, it must be deleted to be able to change the storage class. In that case you can follow these steps:

IMPORTANT NOTE: deleting a controller PVC will delete the corresponding controller volume.

1/ Stop the controller from the CJOC UI

2/ Delete the controller’s PVC with kubectl delete pvc <controller-pod-name> -n build

3/ Set the value of the "Storage Class Name" to use

4/ Start the controller from the CJOC UI

| A default storage class for controller provisioning can be set under . This setting would apply to newly created controller only. |