Google Kubernetes Engine (GKE) supports node auto-scaling by enabling the option in the GKE console.

-

Go to GKE console

-

Select your cluster.

-

Select Edit.

-

Under Node Pools, set Autoscaling to on.

-

Adjust autoscaling limits by setting Minimum size and Maximum size.

Auto-scaling considerations for GKE

Managed controller and operations center workload

By assigning managed controller and operations center workload to a dedicated pool, the scaling down of nodes can be prevented by restricting eviction of managed controller or operations center deployments. Scale up will happen normally when resources need to be increased in order to deploy additional managed controllers, but scale down will only happen when the nodes are free of operations center or managed controller workload. This might be acceptable since controllers are meant to be stable and permanent, meaning that they are not ephemeral but long-lived.

This is achieved by adding the following annotation to operations center and managed controllers:

"cluster-autoscaler.kubernetes.io/safe-to-evict": "false"

For operations center, the annotation is added to the cloudbees-core.yml in the CJOC "StatefulSet" definition

under "spec - template - metadata - annotations"

apiVersion: "apps/v1" kind: "StatefulSet" metadata: name: cjoc labels: com.cloudbees.cje.type: cjoc com.cloudbees.cje.tenant: cjoc spec: serviceName: cjoc replicas: 1 updateStrategy: type: RollingUpdate template: metadata: annotations: cluster-autoscaler.kubernetes.io/safe-to-evict: "false"

For managed controllers, the annotation is added in the configuration page under the Advanced Configuration - YAML parameter.

The YAML snippet to add would look like:

apiVersion: apps/v1 kind: StatefulSet spec: template: metadata: annotations: cluster-autoscaler.kubernetes.io/safe-to-evict: "false"

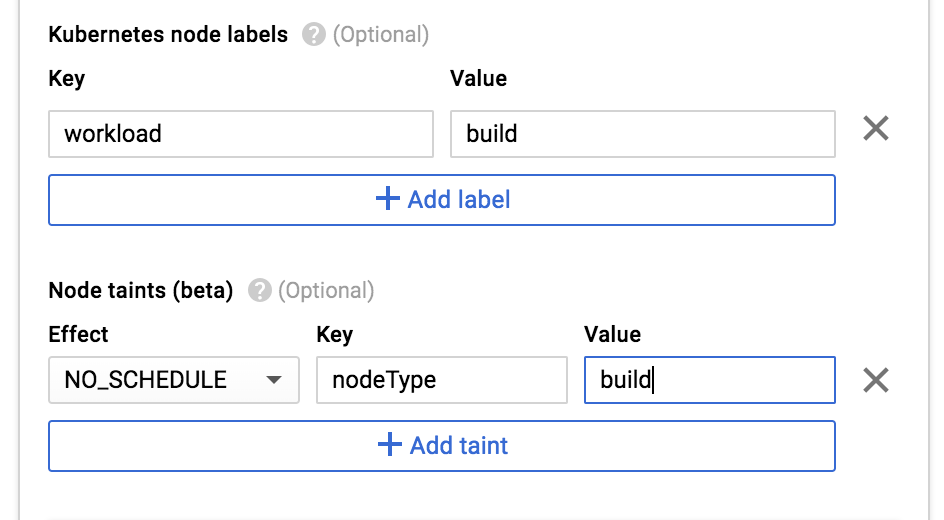

In the Google console, the taint and label can be specified when creating the NodePool:

The first parameter will automatically add the label workload=build to the newly created nodes.

This label will then be used as the NodeSelector for the agent.

The second parameter will automatically add the nodeType=build:NoSchedule taint to the node.

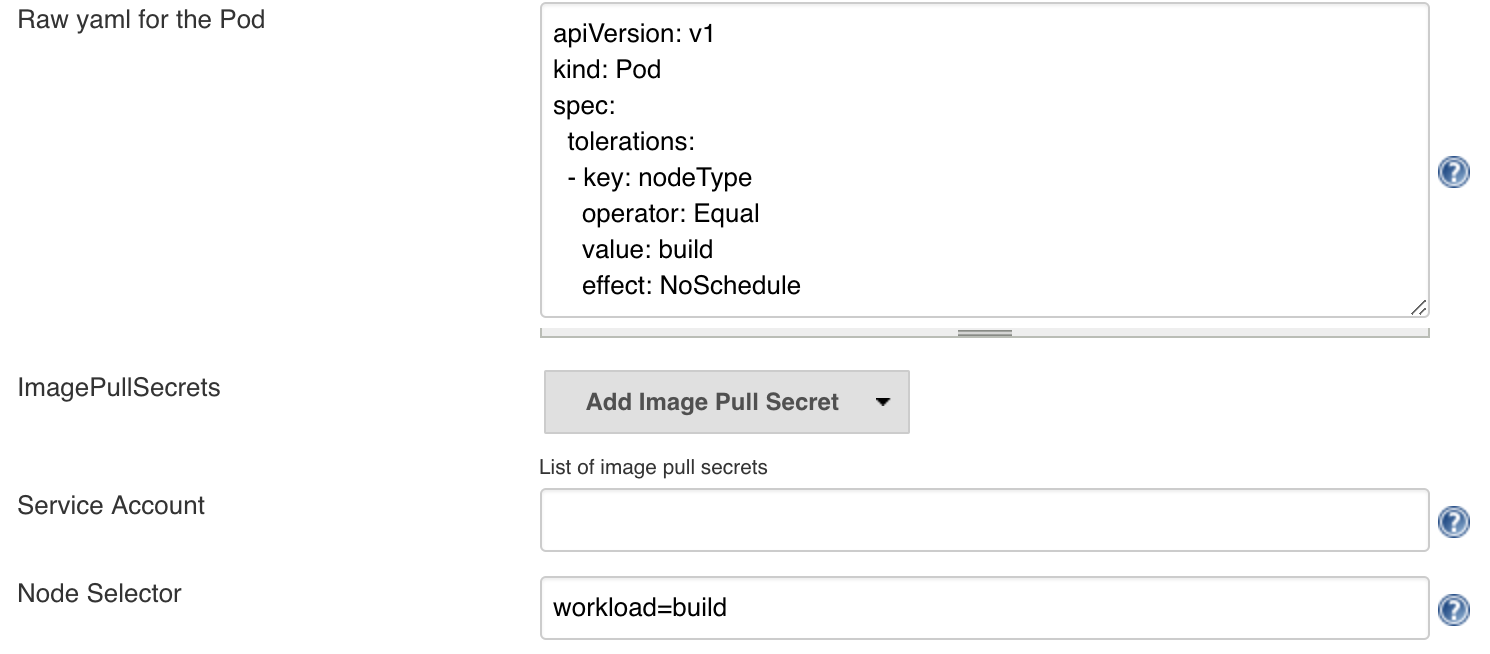

The agent template will then need to add the corresponding 'toleration' to allow the scheduling of agent workload on those nodes.

For Pipelines, 'toleration' can be added to podTemplate using the yaml parameter as follows:

def label = "mypodtemplate-${UUID.randomUUID().toString()}" def nodeSelector = "workload=build" podTemplate(label: label, yaml: """ apiVersion: v1 kind: Pod spec: tolerations: - key: nodeType operator: Equal value: build effect: NoSchedule """, nodeSelector: nodeSelector, containers: [ containerTemplate(name: 'maven', image: 'maven:3.3.9-jdk-8-alpine', ttyEnabled: true, command: 'cat') ]) { node(label) { stage('Run maven') { container('maven') { sh 'mvn --version' } } } }