Amazon Simple Storage Service (Amazon S3) is storage for the internet. It is designed to make web-scale computing easier for developers.

Amazon S3 has a simple web-services interface where you can store and retrieve any amount of data, at any time, from anywhere on the web. It gives developers access to the same highly scalable, reliable, fast, inexpensive data storage infrastructure that Amazon uses to run its own global network of websites. The goal is to maximize benefits of scale and pass those benefits on to developers. For more information about Amazon S3, go to the Amazon Web Services (AWS) website.

The S3 plugin uses the Amazon S3 application programming interface (API) that allows developers to choose where their objects are stored on the Amazon cloud. Using this secure API, your application is automatically scaled up and down as needed. This integration allows ElectricFlow to manage S3 buckets and objects.

The Amazon Simple Storage Service plugin interacts with Amazon S3 data by using AWS Java SDK to perform the following tasks:

-

Create configurations with connection information.

-

Create buckets and folders.

-

Store objects in existing buckets and folders.

-

Download objects or entire folders.

-

List all the buckets and folders.

Prerequisites

This plugin uses an updated version of Perl, cb-perl shell (Perl v5.32), and requires CloudBees CD/RO agents version 10.3+ to work.

Plugin Version 1.2.3.2024121677

Revised on February 23, 2024

This plugin was developed and tested against Amazon Simple Storage Service (Amazon S3).

Create Amazon Simple Storage Service plugin configurations

Plugin configurations are sets of parameters that can be applied across some, or all, plugin procedures. They can reduce the repetition of common values, create predefined parameter sets, and securely store credentials. Each configuration is given a unique name that is entered in the designated parameter for the plugin procedures that use them. The following steps illustrate how to create a plugin configuration that can be used by one or more plugin procedures.

To create a plugin configuration:

-

Navigate to .

-

Select Add plugin configuration to create a new configuration.

-

In the New Configuration window, specify a Name for the configuration.

-

Select the Project that the configuration belongs to.

-

Optionally, add a Description for the configuration.

-

Select the appropriate Plugin for the configuration.

-

Configure the plugin configuration parameters.

-

Select OK.

|

Depending on your plugin configuration and how you run procedures, the field may behave differently in the CloudBees CD/RO UI. For more information, refer to Differences in plugin UI behavior. |

Amazon Simple Storage Service plugin configuration parameters

| Parameter | Description |

|---|---|

Configuration Name |

Name of the Amazon S3 configuration. The default is |

Description |

A description for this configuration. |

Service URL |

Required. The service URL for the Amazon S3 service. For the Amazon public S3, this should be |

Resource Pool |

Required. The name of the pool of resources on which the integration steps can run. |

Workspace |

Required. The workspace to use for resources dynamically created by this configuration. |

Access IDs (Credential Parameters) |

Required. The two access IDs that are required for communicating with Amazon S3 (Access ID and Secret Access ID). The configuration stores these as a credential, putting the Access ID in the user field of the credential and the Secret Access ID in the password field of the credential. |

Attempt Connection? |

Required. If selected, the system attempts a connection to check credentials. |

Debug Level |

Required. Provide the debug level for the output:

|

Create Amazon Simple Storage Service plugin procedures

Plugin procedures can be used in procedure steps, process steps, and pipeline tasks, allowing you to orchestrate third-party tools at the appropriate time in your component, application process, or pipeline.

|

Depending on your plugin configuration and how you run procedures, the field may behave differently in the CloudBees CD/RO UI. For more information, refer to Differences in plugin UI behavior. |

CreateBucket

Creates an Amazon S3 bucket.

|

A bucket is a container for objects stored in Amazon S3. To ensure a single, consistent, naming approach for Amazon S3 buckets across regions, and to ensure bucket names conform to DNS naming conventions, bucket names must comply with the following requirements. Bucket names:

|

CreateFolder

Creates nested folders within the specified bucket. Folders help to organize the Amazon S3 objects.

CreateFolder input parameters

| Parameter | Description |

|---|---|

Configuration |

Required. The name of the configuration that holds all the connection information. This must reference a valid existing configuration. |

Bucket Name |

Required. Name of the bucket in which to create the folder. |

Folder Name |

Required. Name of the folder to create. |

DeleteBucketContents

Deletes the contents of the specified bucket.

DeleteBucketContents input parameters

| Parameter | Description |

|---|---|

Configuration |

Required. The name of the configuration that holds all the connection information. This must reference a valid existing configuration. |

Bucket Name |

Required. Name of the bucket from which to clear the contents. |

DeleteObject

This procedure deletes the Amazon S3 object in specified bucket or folder.

DeleteObject input parameters

| Parameter | Description |

|---|---|

Configuration |

Required. The name of the configuration that holds all the connection information. This must reference a valid existing configuration. |

Bucket Name |

Required. Name of the bucket where the object is stored. |

Key |

Required. Key of the object to delete. |

DownloadFolder

Downloads the contents of the specified folder to the local filesystem. After the folder is successfully downloaded, CloudBees CD/RO stores the key names and download paths of the objects in the property sheet. The default location is /myJob/S3Output.

DownloadFolder input parameters

| Parameter | Description |

|---|---|

Configuration |

Required. The name of the configuration that holds all the connection information. This must reference a valid existing configuration. |

Bucket Name |

Required. Name of the bucket where the folder is stored. |

Key Prefix - Folder |

Key prefix of the folder to download. |

Download Location |

Required. Path of the download location (for example, |

DownloadObject

Downloads the Amazon S3 object specified by the key to the local file system. CloudBees CD/RO stores the key names and the download locations of the objects in the property sheet. The default location is /myJob/S3Output.

DownloadObject input parameters

| Parameter | Description |

|---|---|

Configuration |

Required. The name of the configuration that holds all the connection information. This must reference a valid existing configuration. |

Bucket Name |

Required. Name of the bucket where the folder is stored. |

Key |

Key of the object to download. |

Download Location |

Required. Path of the download location (for example, |

ListBucket

Lists all buckets. CloudBees CD/RO stores the list of buckets in the property sheet. The default location is /myJob/S3Output.

ListFolder

Lists the contents of the folders, either recursively or non-recursively. CloudBees CD/RO stores the list of all the objects in the folder in the property sheet. The default location is /myJob/S3Output.

ListFolder input parameters

| Parameter | Description |

|---|---|

Configuration |

Required. The name of the configuration that holds all the connection information. This must reference a valid existing configuration. |

Bucket Name |

Required. Name of the bucket in which to list the folders. |

Folder Name |

Name of the folder or prefix to include in the list. |

List Objects in this folder or Include all subfolders? |

If selected, all objects in this folder and all subfolders are included in the list. |

UploadFolder

Uploads the specified local file system folder to the Amazon S3 service. After a folder is successfully uploaded, CloudBees CD/RO stores the key names and AWS access URLs for the objects in this folder in the property sheet. The default location is /myJob/S3Output.

UploadFolder input parameters

| Parameter | Description |

|---|---|

Configuration |

Required. The name of the configuration that holds all the connection information. This must reference a valid existing configuration. |

Bucket Name |

Required. Name of the bucket in which to list the folders. |

Key |

The key prefix of the virtual directory to which the folder is uploaded. Leave this field empty to upload files to the root of the bucket. |

Folder to Upload |

Required. Name of the folder to upload (for example, |

Make the object public |

If selected, the uploaded object is publicly accessible. |

UploadObject

Uploads the specified local file system folder to the Amazon S3 service. After an object is successfully uploaded, CloudBees CD/RO stores the key name and AWS link to the object in the property sheet. The default location is /myJob/S3Output.

UploadObject input parameters

| Parameter | Description |

|---|---|

Configuration |

Required. The name of the configuration that holds all the connection information. This must reference a valid existing configuration. |

Bucket Name |

Required. Name of the bucket to which the object will be uploaded. |

Key |

Required. Key of the object to upload. This value is used as the key for the object that is uploaded. |

File to Upload |

Required. Path for file to upload (for example, |

Make the object public |

If selected, the uploaded object is publicly accessible. |

WebsiteHosting

You can use Amazon S3 to host a website that uses client-side technologies (such as HTML, CSS, and JavaScript) and does not require server-side technologies (such as PHP and ASP.NET). This is called a static website and is used to display content that does not change frequently.

To host your static website, use this procedure to configure an Amazon S3 bucket for website hosting. It is then available at the region-specific website endpoint of the bucket: <bucket-name>.s3-website-<AWS-region>.amazonaws.com.

After the bucket is successfully configured for static website hosting, CloudBees CD/RO stores the bucket name as a key and Amazon S3 website endpoint for your bucket as a value in the property sheet. The default location is */myJob/S3Output.

WebsiteHosting input parameters

| Parameter | Description |

|---|---|

Configuration |

The name of the configuration that has all the connection information. This must refer to a valid existing configuration. |

Bucket Name |

Name of the bucket to create. |

Enable website hosting |

If selected, after you enable your bucket for static website hosting, your content is accessible to web browsers through the Amazon S3 endpoint for your bucket. |

Index Document |

Name of the index document. |

Error Document |

Name of the error document. |

Amazon Simple Storage Service plugin use cases

One of the common use case of this plugin is to host a publicly accessible website.To achieve this, create a bucket on Amazon S3 and then upload the contents to that folder.To do this, you must:

-

Configure the CreateBucket procedure to create a bucket on Amazon S3.

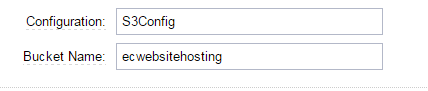

Figure 1. CreateBucket parameters

Figure 1. CreateBucket parameters -

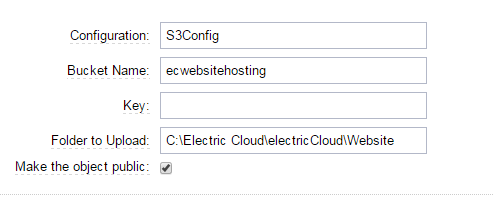

Configure the UploadFolder procedure to upload the contents of the folder to the bucket.

Figure 2. UploadFolder parameters

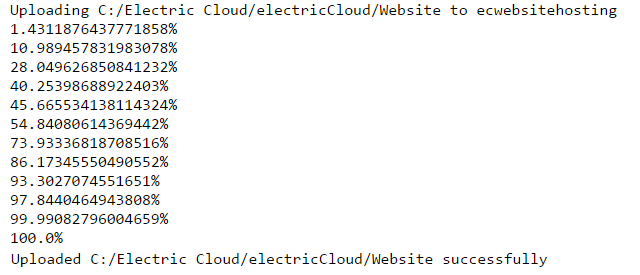

Figure 2. UploadFolder parametersWhen this procedure runs, the contents of the

C:\Electric Cloud\electricCloud\Websitedirectory are uploaded to theecwebsitehostingbucket. -

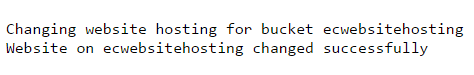

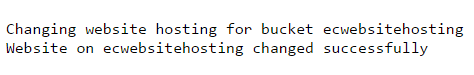

Use the WebsiteHosting procedure to configure the bucket for website hosting.

Figure 3. WebsiteHosting parameters

Figure 3. WebsiteHosting parameters -

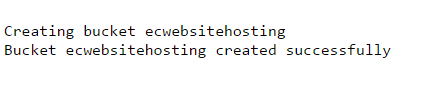

Run the procedures. The following output appears during the procedures:

Figure 4. CreateBucket output

Figure 4. CreateBucket output Figure 5. UploadFolder output

Figure 5. UploadFolder output Figure 6. WebsiteHosting output

Figure 6. WebsiteHosting output

Amazon Simple Storage Service plugin release notes

1.2.2

-

Fixed issue where the plugin configuration Service URL parameter was not applied to procedures.

1.2.1

-

Improved SSL/TLS certificate validation to ensure that when the Ignore SSL issues parameter is selected that SSL/TLS validation is properly disabled.

1.2.0

-

Added support for a new plugin configuration.

-

Upgraded from Perl 5.8 to Perl 5.32.

-

Starting with EC-S3 1.2.0, CloudBees CD/RO agents running v10.3 and later are required to run plugin procedures.

-

Removed CGI scripts.

1.1.4

-

Fixed the following Java error:

java.lang.NoClassDefFoundError: javax/xml/bind/DatatypeConverter.

1.0.0

-

Added support to create new buckets and folders in buckets.

-

Added support to clean the bucket contents.

-

Added support to delete specific objects in a bucket or folder.

-

Added support to upload or download objects or the entire content of the bucket or folder.

-

Added support to list buckets and folders.