How to configure CloudBees CD/RO for very large Helm deployments

A very large Helm deployment of CloudBees CD/RO is:

-

~ 100K jobs per day

-

~ 2000 running pipelines per day

-

~ 5M job steps per day

Active customers and partners can refer to the CloudBees CD/RO Level 2: Helm for Very Large Kubernetes Deployments training course for details.

The following tables summarize the changes to the default Helm chart for very large deployments:

CloudBees CD/RO server values

For the default CloudBees CD/RO Helm charts, refer to CloudBees CD/RO server values. You can find these cloudbees-flow values referenced in the values.yaml under the Flow server configuration section.

| Name | Value |

|---|---|

server.replicas |

4 |

server.ecconfigure |

"--serverInitMemory=70 --serverMaxMemory=85" |

server.resources.limits.cpu |

16 |

server.resources.limits.memory |

128Gi |

server.resources.requests.cpu |

4 |

server.resources.requests.memory |

16Gi |

CloudBees CD/RO web server values

For the default CloudBees CD/RO Helm charts, refer to CloudBees CD/RO web server values. You can find these cloudbees-flow values referenced in the values.yaml under the Flow web server configuration section.

| Name | Value |

|---|---|

web.replicas |

2 |

Repository values

For the default CloudBees CD/RO Helm charts, refer to Repository values. You can find these cloudbees-flow values referenced in the values.yaml under the Flow repository configuration section.

| Name | Value |

|---|---|

repository.resources.requests.memory |

768Mi |

CloudBees Analytics server values

For the default CloudBees CD/RO Helm charts, refer to CloudBees Analytics server values. You can find these cloudbees-flow values referenced in the values.yaml under the Analytics server configuration section.

| Name | Value |

|---|---|

analytics.replicas |

|

analytics.esMinMasterNodes |

|

analytics.heapSize |

|

analytics.resources.limits.cpu |

|

analytics.resources.limits.memory |

|

analytics.resources.requests.cpu |

|

analytics.resources.requests.memory |

|

CloudBees CD/RO agent values

For the default CloudBees CD/RO Helm charts, refer to CloudBees CD/RO agent configuration values You can find these cloudbees-flow-agent values referenced in the agent values.yaml.

| Name | Value |

|---|---|

replicas |

|

How to set global values in CloudBees CD/RO Helm charts

Starting with v2023.10.0, you can configure global values for image settings to use for all CloudBees CD/RO components. In v2023.10.0 and later cloudbees-flow values files, you can find these configurations by searching for Global configurations section.

When you set a global image, it is applied to the following components:

-

server -

web -

repository -

analytics -

analytics.backup -

boundAgent -

zookeeper -

internalGatewayAgent -

externalGatewayAgent

This option improves usability and enables you to set and update chart values for these components in a single location, instead of for each component individually. However, this setting is optional, and you can still configure CloudBees CD/RO components individually to pull specific images.

|

The following sections do not apply values from the global section and must be individually configured:

For information on setting custom images, refer to How to configure custom images for CloudBees CD/RO. |

Setting global chart values

Setting global values for image settings is an optional configuration for CloudBees CD/RO v2023.10.0 and later, meant to improve usability and maintainability of your Helm charts. Global chart values allow you to set a value in one location that, where applicable, is applied to all CloudBees CD/RO components.

|

Global-level chart values override component-level chart values. This means, if you have both a global-level value set and component-level value set, the global value is taken. Before setting any global-level value, CloudBees strongly suggests to ensure the global value is valid and correct for all instances where it will be applied. |

The following is an example of setting a global image value:

-

In your v2023.10.0 and later values file, navigate to the Global configurations section.

-

Provide values for:

-

global.cloudbees.imageRegistry -

global.cloudbees.imageTag -

(OPTIONAL)

global.cloudbees.imagePullSecretsYou must enable

imagePullSecretsif you are using a private registry. Secrets must be manually created or already exist in the namespace.

-

-

(OPTIONAL) For CloudBees CD/RO component values that have a

global.<value>set, remove the<component>.<value>.Global-level configurations override component-level configurations. However, from a troubleshooting point of view, having two different values configured in different locations in your values file may cause some confusion. Because of this, CloudBees suggests removing the component-level configuration for values already set at the global level.

You are now able to update the global image to use for all CloudBees CD/RO components in a single location in your values file.

How to configure custom resources for init job

If using CloudBees CD/RO v2024.06.0 or later, you can configure the resources allocated to the CloudBees CD/RO init job within your values file using jobInit.resources. To get started:

-

If you do not already have a v2024.06.0 or later values file, update your existing values file with the following fields for

jobInit:resources: limits: cpu: 4 memory: 6Gi requests: cpu: 2 memory: 6Gi -

Set your custom values for the fields.

The default values are the minimum suggested values. For some environments these settings may not be sufficient, and result in the init jobfailing. If this occurs, increase the default settings for your environment, and run the installation or upgrade again. -

Ensure the YAML is valid, and save your changes.

-

Deploy the updated chart to your environment using your

helm upgradecommand.

The CloudBees CD/RO init job will now be allocated the configured resources.

How to configure custom images for CloudBees CD/RO

If you need to use custom images for individual components in your project, you can configure the image information in your myvalues.yaml. The following sections describe how to set custom images for:

|

If you set a Before configuring custom images for bound agents, ensure you do not have images configured for |

Configure custom bound agent images

This section is intended for use with v2023.10.0 and later Helm charts. For CloudBees CD/RO Helm charts v2023.08.0 and earlier, the boundAgent included image configuration fields in the Helm chart by default.

|

Add a custom boundAgent image to Helm chart

In CloudBees CD/RO v2023.10.0 and above Helm charts, global image configurations were implemented in the cloudbees-flow values file, values.global.cloudbees. As part of this change, bound agents were reconfigured to use the cloudbees-flow-agent.values.image values. Additionally, the boundAgent.imageRegistry values were removed from the cloudbees-flow values file.

If your project uses custom images for bound agents, you must make the following changes to CloudBees CD/RO v2023.10.0 and above Helm charts before starting the upgrade:

-

In your

cloudbees-flowmyvalues.yaml, ensure novalues.global.cloudbeesimage value is set.If you set both a values.global.cloudbeesimage value and any component-level image values in yourmyvalues.yaml, thevalues.global.cloudbeesimage value overrides all component-level image values. -

In the

boundAgentsection of yourcloudbees-flowvalues file, add the following chart configuration:images: ## Image registry to pull CloudBees CD/RO boundAgent images from. ## Example: registry: "123456789012.dkr.ecr.us-east-1.amazonaws.com" registry: "<YOUR-IMAGE-REGISTRY>" ## The `imageRepository` in the `images.registry` to pull the bound agent image from. imageRepository: "<YOUR-IMAGE-REPO>" ## CloudBees CD/RO bound agent image tag to pull. tag: "<YOUR-IMAGE-TAG>"-

For example, if your project uses an internal registry called

internal.registry.example/cloudbees::images: ## Image registry to pull CloudBees CD/RO boundAgent images from. ## Example: registry: "123456789012.dkr.ecr.us-east-1.amazonaws.com" registry: "internal.registry.example/cloudbees" ## The `imageRepository` in the `images.registry` to pull the bound agent image from. imageRepository: "cbflow-boundagent" ## CloudBees CD/RO bound agent image tag to pull. tag: "1.0"

-

You can now upgrade to CloudBees CD/RO v2023.10.0 and above using your custom bound agent image.

Configure custom ingress-nginx images

Add a custom ingress-nginx image to Helm chart

If your project uses custom images for ingress-nginx, you must make the following image changes to the CloudBees CD/RO Helm charts in your myvalues.yaml before starting the upgrade.

In the ingress-nginx section of your cloudbees-flow values file, add the following chart configuration:

controller: image: registry: <YOUR-IMAGE-REGISTRY> image: <YOUR-IMAGE> ## For backward compatibility, consider setting the full image URL via the repository value below. ## Use *either* the current default registry/image or the repository format. Otherwise, installing the chart by providing the `values.yaml` will fail. # repository: tag: "<YOUR-IMAGE-TAG>" digest: <YOUR-DIGEST-HASH> admissionWebhooks: patch: registry: <YOUR-IMAGE-REGISTRY> image: <YOUR-IMAGE> ## For backward compatibility, consider setting the full image URL via the repository value below. ## Use *either* the current default registry/image or the repository format. Otherwise, installing the chart by providing the `values.yaml` will fail. # repository: tag: "<YOUR-IMAGE-TAG>" digest: <YOUR-DIGEST-HASH> ## Set images if enabled defaultBackend: enabled: false image: registry: <YOUR-IMAGE-REGISTRY> image: <YOUR-IMAGE> ## For backward compatibility, consider setting the full image URL via the repository value below. ## Use *either* the current default registry/image or the repository format or installing the chart by providing the `values.yaml` will fail. # repository: tag: "<YOUR-IMAGE-TAG>"

For example, if your project uses an internal registry called internal.registry.example/ingress-nginx:

controller: image: registry: internal.registry.example/ingress-nginx image: controller ## For backward compatibility, consider setting the full image URL via the repository value below. ## Use *either* the current default registry/image or the repository format or installing the chart by providing the `values.yaml` will fail. # repository: tag: "v1.1.0" digest: sha256:f766669fdcf3dc26347ed273a55e754b427eb4411ee075a53f30718b4499076a admissionWebhooks: patch: registry: internal.registry.example/ingress-nginx image: kube-webhook-certgen ## For backward compatibility, consider setting the full image URL via the repository value below. ## Use *either* the current default registry/image or the repository format or installing the chart by providing the `values.yaml` will fail. # repository: tag: "v1.1.1" digest: sha256:64d8c73dca984af206adf9d6d7e46aa550362b1d7a01f3a0a91b20cc67868660 ## Set images if enabled defaultBackend: enabled: false image: registry: internal.registry.example/ingress-nginx image: defaultbackend-amd64 ## For backward compatibility, consider setting the full image URL via the repository value below. ## Use *either* the current default registry/image or the repository format or installing the chart by providing the `values.yaml` will fail. # repository: tag: "1.5"

You can now upgrade CloudBees CD/RO to use your custom ingress-nginx image.

Configure custom CloudBees sidecar injector images

Add a custom CloudBees sidecar injector image to Helm chart

If your project uses custom images for a CloudBees sidecar injector, you must make the following images changes to the CloudBees CD/RO Helm charts in your myvalues.yaml before starting the upgrade.

In the cloudbees sidecar injector (cdsidecarinjector) section of your cloudbees-flow values file, add the following chart configuration:

enabled=true hub=<YOUR-IMAGE-REGISTRY> image=<YOUR-IMAGE> tag="<YOUR-IMAGE-TAG>" requestCert: image=<YOUR-IMAGE> tag="<YOUR-IMAGE-TAG>"

-

For example, if your project uses an internal registry called

internal.registry.example/cloudbees:enabled=true hub=internal.registry.example/cloudbees image=test-sidecar-injector tag="2.2.1" requestCert: image=test-cert-requester tag="2.2.1"

You can now upgrade CloudBees CD/RO to use your custom cloudbees-sidecar-injector image.

Configure custom MariaDB images

Add a custom MariaDB image to Helm chart

If your project uses custom images for MariaDB, you must make the following images changes to the CloudBees CD/RO Helm charts in your myvalues.yaml before starting the upgrade.

In the mariadb section of your cloudbees-flow values file, add the following chart configuration:

image: registry: <YOUR-IMAGE-REGISTRY> repository: <YOUR-IMAGE-REPO> tag: <YOUR-IMAGE-TAG>

For example, if your project uses an internal registry called internal.registry.example/cloudbees:

image: registry: internal.registry.example/cloudbees repository: mariadb tag: 10.3.20-debian-9-r0

You can now upgrade CloudBees CD/RO to use your custom MariaDB image.

Configure custom ZooKeeper images

Add a custom ZooKeeper image to Helm chart with a global image set

If your project uses custom images for ZooKeeper, and has global values set, you must make the following images changes to the CloudBees CD/RO Helm charts in your myvalues.yaml before starting the upgrade:

-

In your

cloudbees-flowmyvalues.yaml, ensure thevalues.global.cloudbees.imageRegistryandvalues.global.cloudbees.imageTagimage values are set.Ensure your ZooKeeper image is located in the values.global.cloudbees.imageRegistry, and it set with thevalues.global.cloudbees.imageTag. If it is not, your ZooKeeper image will fail to load. If your project is not using these values, refer to Add a custom ZooKeeper image to Helm chart with no global image set. -

In the

zookeepersection of yourcloudbees-flowvalues file, add the following chart configuration:image: imageRepository: <YOUR-IMAGE-REPO>-

For example, if your project uses a global registry called

global-images-registry.examplewith image tags set toglobal-image-tag, and your ZooKeeper image was in thezookeeper-imagerepository, your values file should have the following configurations:global: cloudbees: imageRegistry: global-images-registry.example imageTag: global-image-tag .... zookeeper: image: imageRepository: zookeeper-image

-

You can now upgrade CloudBees CD/RO to use your custom ZooKeeper image.

Add a custom ZooKeeper image to Helm chart with no global image set

If your project uses custom images for ZooKeeper, and has no global image values set, you must make the following images changes to the CloudBees CD/RO Helm charts in your myvalues.yaml before starting the upgrade:

-

In your

cloudbees-flowmyvalues.yaml, ensure novalues.global.cloudbeesimage value is set.If you have values.global.cloudbees.imageRegistryandvalues.global.cloudbees.imageTag, your ZooKeeper image will fail to load using the following configuration. If you are using these values, refer to Add a custom ZooKeeper image to Helm chart with a global image set. -

In the

zookeepersection of yourcloudbees-flowvalues file, add the following chart configuration:image: repository: <YOUR-IMAGE-REGISTRY>/<YOUR-IMAGE-REPO> tag: "<YOUR-IMAGE-TAG>"-

For example, if your project uses an internal registry called

internal.registry.example/with image repository calledzookeeper-image:image: ## Container repository to pull ZooKeeper image from. repository: internal.registry.example/zookeeper-image ## Zookeeper image tag to pull. tag: "2023.08.0.167214_3.2.51_20230809"

-

You can now upgrade CloudBees CD/RO to use your custom ZooKeeper image.

How to configure load balancing with ingress

For the default CloudBees CD/RO Helm charts, refer to Ingress values. You can find these cloudbees-flow values referenced in the values.yaml under the Flow ingress configuration section.

|

An ingress controller typically does not eliminate the need for an external load balancer but adds a layer of routing and control behind the load balancer. However, you can configure the ingress controller so all service endpoints, such as web, server, and repository, may be exposed from the same domain name and load balancer endpoint. CloudBees recommends configuring your ingress controller so all CloudBees CD/RO services can be exposed through a single load balancer. |

By default, ingress is enabled in the CloudBees CD/RO chart. The following is a summary of the settings:

To run CloudBees CD/RO, you must use the ingress-nginx controller with the following required settings:

-

ingress-nginx.enabled=true -

ingress.class=nginx

ingress-nginx.controller.ingressClass |

Default: |

ingress-nginx.controller.publishService.enabled |

Default: |

ingress-nginx.controller.scope.enabled |

Default: |

ingress-nginx.enabled |

Default: |

ingress-nginx.tcp.61613 |

CloudBees CD/RO server Default: |

ingress-nginx.tcp.8200 |

CloudBees CD/RO repository Default: |

ingress-nginx.tcp.8443 |

CloudBees CD/RO web server Default: |

ingress-nginx.tcp.9201 |

CloudBees Analytics database Default: |

How to install CloudBees CD/RO on Kubernetes using an existing database

You can install CloudBees CD/RO using your existing database, if you are:

-

Performing an initial installation of CloudBees CD/RO.

-

Migrating from a traditional CloudBees CD/RO installation to Kubernetes.

-

Spinning up an additional testing or production environment.

-

Upgrading between CloudBees CD/RO versions.

The procedures to install your existing database with CloudBees CD/RO are nearly the same for each of these scenarios. However, if this is your initial installation, it may be easier to install CloudBees CD/RO using the instructions found in Install CloudBees CD/RO production server, and then update your installation with the steps listed here.

| If you only want to find the values needed to configure your existing database as part of the Helm chart customization process, you can also refer to Database values. |

Before you start

-

Ensure the CloudBees CD/RO version you are installing or upgrading to supports the database you want to integrate. For more information on which databases are supported by each CloudBees CD/RO release, refer to Supported platforms for CloudBees CD/RO on Kubernetes.

-

Always make backups of your project-specific resources prior to installing or upgrading your CloudBees CD/RO version. These backups may be vital to recovering your data if critical errors occur during upgrades.

-

Always perform adequate and thorough testing in a development environment before releasing any version installation or upgrade into your production environment.

-

To see an example of a complete CloudBees CD/RO installation (not upgrade) using an existing database, refer to Example installation of CloudBees CD/RO using an existing database.

If you do not already have a myvalues.yaml, refer to CloudBees CD/RO default Helm charts to set yours up before continuing these steps.

|

Installing CloudBees CD/RO with your existing database

To install your existing database:

-

Make backups of your deployment and database. These backups may be necessary to recover data if critical errors occurs during the upgrade process.

-

Set the variables for the current release’s name, its namespace, and version you want to install/upgrade to:

releaseName="<your-current-release>" namespace="<your-current-release-namespace>" # Example upgradeVersion value: "2.21.0" upgradeVersion="<version-of-upgrade-release>" -

Save the values from your current release to a values file by running:

helm get values $releaseName --namespace $namespace > old-values.yamlIf this is your initial installation, this step is not needed, you can continue and use the same steps for your myvalues.yamlas described forold-values.yaml. -

Ensure your

old-values.yamlcontains values, or set the values, for the following parameters:-

database.externalEndpoint -

database.dbPort -

database.dbType -

database.dbName -

database.dbUser -

database.dbPassword -

flowLicense -

flowCredentials.adminPasswordWhen using the helm installcommand, you can use the--setoption to specify these values.

-

-

If your

old-values.yamluses theimages.tag, you must update it with the image tag of the new CloudBees CD/RO release you want to upgrade to.If this is your initial installation, and you downloaded the latest version of the values file from SonaType Nexus, your values file already contains this information unless you removed it during customization. If you do not update the images.tagor update it with an incorrect value, your installation/upgrade will not succeed.

After you have performed these steps, set any additional values you need in your old-values.yaml for the CloudBees CD/RO installation. For more information refer to Configure Helm charts.

| Once you have your values file ready for installation, ensure the network connection between your database instance and Kubernetes cluster is active before continuing. |

Next, you need your flow-server passkey and keystore files from your existing CloudBees CD/RO installation and to convert them to base64 format:

-

Copy and save your

flow-serverpasskey:# Get the flow-server pod name cdServerPod=$(kubectl get pod -l app=flow-server -o \ jsonpath='{.items[*].metadata.name}' -n $namespace); echo $cdServerPod # Get the passkey file name passkey=$(kubectl -n $namespace exec $cdServerPod -- ls /tmp/ | grep passkey); echo $passkey # Copy the passkey file from the flow-server pod to the local machine kubectl -n $namespace cp $cdServerPod:/tmp/$passkey ./passkey # Ensure the passkey file has been copied ls -l | grep passkey -

Convert your passkey to

base64format:base64 -i passkey -o passkey.b64 -

Copy and store the

flow-serverkeystore files:# Get the keystore file name keystore=$(kubectl -n $namespace exec $cdServerPod -- ls /tmp/ | grep keystore); echo $keystore # Copy the keystore file from flow-server pod to your local machine kubectl -n $namespace cp $cdServerPod:/tmp/$keystore ./keystore # Ensure the keystore file has been copied ls -l | grep keystore -

Convert your keystore to

base64format:base64 -i keystore -o keystore.b64 -

Install CloudBees CD/RO using your

old-values.yaml, passkey, keystore, andlicense.xml(if required):helm install $releaseName cloudbees/cloudbees-flow --namespace $namespace \ --values old-values.yaml \ --set-file server.customConfig.passkey\\.b64=passkey.b64 \ --set-file server.customConfig.keystore\\.b64=keystore.b64 \ # Helm upgrades exit when all tasks are complete, regardless of timeout. # However, if your database is very large, the timeout may need to be increased to prevent returning a FAILED status. # For instance: --timeout 10800s --timeout 4200s \ # If required include your license.xml --set-file flowLicense.licenseData=license.xmlIf you are installing a CloudBees CD/RO instance with an external database, do not use the --waitoption. Theflow-server-init-jobcannot be started if--waitis used.

Example installation of CloudBees CD/RO using an existing database

For your reference, the following shows a complete installation example of CloudBees CD/RO using an existing database:

Example CloudBees CD/RO installation using existing database

dbType=sqlserver dbEndpoint=mssql.k8s.xyz dbPort=1433 dbUser=dbadmin dbPass=mySecretDbPassword dbName=myexistingdb flowPassword=mySecretFlowPassword analyticsPassword=mySecretAnalyticsPassword licenseFile=path/to/license.xml passkeyFile=path/to/passkey keystoreFile=path/to/keystore storageClass=nfs-client hostname=flow-server.k8s.xyz namespace=test-existing-db release=cloudbees-flow releaseVersion=2.21 base64 -i $passkeyFile -o passkey.b64 base64 -i $keystoreFile -o keystore.b64 kubectl create namespace $namespace kubectl -n $namespace create secret \ generic $release-cloudbees-flow-credentials \ --from-literal CBF_SERVER_ADMIN_PASSWORD=$flowPassword kubectl -n $namespace create secret \ generic $release-cloudbees-flow-analytics \ --from-literal CBF_ANALYTICS_ADMIN_PASSWORD=$flowPassword \ --from-literal CBF_ANALYTICS_PASSWORD=$analyticsPassword helm repo add cloudbees https://charts.cloudbees.com/public/cloudbees helm repo update helm search repo cloudbees/cloudbees-flow --versions helm install $release cloudbees/cloudbees-flow --version $releaseVersion \ --namespace $namespace \ --set storage.volumes.serverPlugins.storageClass=$storageClass \ --set storage.volumes.repositoryStorage.storageClass=$storageClass \ --set storage.volumes.analyticsStorage.storageClass=$storageClass \ --set database.externalEndpoint=$dbEndpoint \ --set database.dbPort=$dbPort \ --set database.dbType=$dbType \ --set database.dbName=$dbName \ --set database.dbUser=$dbUser \ --set database.dbPassword=$dbPass \ --set flowCredentials.existingSecret=$release-cloudbees-flow-credentials \ --set analytics.credentials.existingSecret=$release-cloudbees-flow-analytics \ --set serverName=$hostname \ --set ingress.host=$hostname \ --set ingress.class=$release-nginx \ --set ingress-nginx.controller.ingressClassResource.name=$release-nginx \ --set ingress-nginx.controller.ingressClassResource.enabled=true \ --set ingress-nginx.controller.ingressClassResource.default=false \ --set ingress-nginx.controller.ingressClassResource.controllerValue="k8s.io/$release-ingress-nginx" \ --set-file flowLicense.licenseData=$licenseFile \ --set-file server.customConfig.passkey\\.b64=passkey.b64 \ --set-file server.customConfig.keystore\\.b64=keystore.b64 \ --timeout 4200s

How to configure the URL for Oracle databases

CloudBees CD/RO supports configuring Oracle databases as a custom alternative to the built-in database. When configuring your Oracle database, CloudBees CD/RO expects the database URL to be configured in values.database.customDatabaseUrl field using either the System Identifier (SID) or service name. The following sections explain using both.

Using the Oracle database SID

How to use Oracle database SID for values.database.customDatabaseUrl

When using the SID to configure your database, the required format for the values.database.customDatabaseUrl value is:

customDatabaseUrl: "jdbc:oracle:<driver>:@<serviceName>.<namespace>.svc.cluster.local:<portNumber>:<SID_NAME>"

customDatabaseUrl: "jdbc:oracle:thin:@oracle-service.default.svc.cluster.local:1521:MYDB"

Where:

-

jdbc:oracle:<driver>:@: The identifier for Oracle databases and driver. In the Example, thethindriver is used. -

<serviceName>: The Kubernetes service name of the database service. In the Example, theoracle-serviceis the service name. -

<namespace>: The Kubernetes namespace. In the Example,defaultis the namespace. -

.svc.cluster.local: The default location of services in a Kubernetes deployment. -

<portNumber>: The port assigned to the Oracle database in the environment. In the Example,1521is the port. -

<SID_NAME>: The SID name assigned to the database when it was created. In the Example,MYDBis the SID name.

This section describes how to convert your clustered Oracle database service name to the required SID.

-

If you do not already have the service name and port of your database, run the following to list the service details in your namespace:

CommandExamplekubectl get services -n <namespace>NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE oracle-service ClusterIP 10.0.0.1 <none> 1521/TCP 3dWhere

oracle-serviceis the service name, and the port is1521. -

If you do not already have your SID, it can be found in your

listener.oraon your Oracle server.

Once you have the required information for the Oracle customDatabaseUrl configuration either:

-

Set the

customDatabaseUrlas part of yourhelm installorhelm upgradecommand using--set customDatabaseUrl="<YOUR-DB-SID-URL>".When using this method, if you do not update customDatabaseUrlin your values file before your next upgrade, this value will be overwritten by the one present in the values file on the next upgrade. -

Update your values file:

-

Open your values file, and navigate to

values.database. -

Locate the

customDatabaseUrlfield. -

Add your SID URL using the format (as explained above):

customDatabaseUrl: "jdbc:oracle:<driver>:@<service-name>.<namespace>.svc.cluster.local:<port>:<SID_NAME>" -

After you save your file, install or upgrade CloudBees CD/RO.

If you have not done so, include the other databasevalues for your environment in the Helm chart.

-

Your Oracle database has now been configured to connect with CloudBees CD/RO.

Using the Oracle database service name

How to use Oracle database service name for values.database.customDatabaseUrl

When using the service name to configure your database, the required format for the values.database.customDatabaseUrl value is:

customDatabaseUrl: "jdbc:oracle:<driver>:@//<hostName>:<portNumber>/<serviceName>"

customDatabaseUrl: "jdbc:oracle:thin:@dbserver.example.com:1521/mydbservice"

Where:

-

jdbc:oracle:<driver>:@//: The identifier for Oracle databases and driver. In the Example, thethindriver is used.The @//is required when using the service name. -

<hostName>: The hostname or IP address of the Oracle database server. In the Example,dbserver.example.comis the hostname. -

<port>: The port assigned to the Oracle database in the environment. In the Example,1521is the port. -

<serviceName>: The service name assigned to the database in the Kubernetes deployment. In the Example,mydbserviceis the service name.

This section describes how to convert your clustered Oracle database service name to required format.

-

If you do not already have the service name and port of your database, run the following to list the service details in your namespace:

CommandExamplekubectl get services -n <namespace>NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE oracle-service ClusterIP 10.0.0.1 <none> 1521/TCP 3dWhere

oracle-serviceis the service name, and the port is1521.

Once you have the required information for the Oracle customDatabaseUrl configuration either:

-

Set the

customDatabaseUrlas part of yourhelm installorhelm upgradecommand using--set customDatabaseUrl="<your-DB-serviceName-URL>".When using this method, if you do not update customDatabaseUrlin your values file before your next upgrade, this value will be overwritten by the one present in the values file on the next upgrade. -

Update your values file:

-

Open your values file, and navigate to

values.database. -

Locate the

customDatabaseUrlfield. -

Add your service name URL using the format (as explained above):

customDatabaseUrl: "jdbc:oracle:<driver>:@//<hostName>:<portNumber>/<serviceName>" -

After you save your file, install or upgrade CloudBees CD/RO.

If you have not done so, include the other databasevalues for your environment in the Helm chart.

-

Your Oracle database has now been configured to connect with CloudBees CD/RO.

How to install custom ingress controllers with CloudBees CD/RO

To configure CloudBees CD/RO to use your custom or existing ingress controller:

-

Configure your ingress controller manifest to expose the following required TCP ports:

-

TCP port

8200is required byflow-repository. -

TCP ports

8443and61613are required byflow-server. -

(Optional) If you are using CloudBees Analytics, TCP port

9201is required byflow-analytics.If you need help configuring the ingress controller manifest, refer to Exposing TCP and UDP services in the NGINX Ingress Controller documentation. Ensure you follow the example for TCP/UDP proxy support.

-

-

Update your

myvalues.yamlwith your custom ingress controller values:ingress: enabled: true host: <your-host> annotations: <your-annotations> class: <your-custom-ingress-class>For a reference, refer to CloudBees CD/RO Helm chart ingress example. -

In your

myvalues.yaml, ensureingress-nginx.enabledis configured asfalsefor your installation.ingress-nginx: enabled: falseIf you do not have the ingress-nginx.enabledentry in yourmyvalues.yaml, by default, it is set tofalsein thecloudbees-flowvalues file. -

If you have not already, apply your ingress controller manifest to your Kubernetes CloudBees CD/RO cluster.

-

To apply the custom ingress controller configuration, update your CloudBees CD/RO installation:

helm upgrade <server-releasename> cloudbees/cloudbees-flow \ -f <myvalues.yaml> \ --namespace <server-namespace> \ --timeout 10000s

How to override CloudBees CD/RO Kubernetes network policies

As of v2023.04.0, you can override network polices for CloudBees CD/RO on Kubernetes by adding custom network polices and ingress controllers to override the default network policies. This is useful to deploy custom ingress controllers for internal networking for CloudBees CD/RO components or agents.

| CloudBees strongly suggests testing all network policy changes in a development environment before releasing them into your production environment. |

Before you start

Before overriding the default CloudBees CD/RO network policies:

-

You must have a

NetworkPolicymanifest(s) developed that describes network behavior for all CloudBees CD/RO components.Example

NetworkPolicymanifestapiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: annotations: meta.helm.sh/release-name: cbflow meta.helm.sh/release-namespace: cbflow labels: app.kubernetes.io/managed-by: Helm name: web-policy namespace: cbflow spec: ingress: - from: - ipBlock: cidr: 0.0.0.0/0 - podSelector: matchLabels: app: flow-bound-agent release: cbflow - podSelector: matchLabels: app: flow-server release: cbflow - namespaceSelector: matchLabels: kubernetes.io/metadata.name: kube-system podSelector: matchLabels: app.kubernetes.io/name: ingress-nginx ports: - port: 2080 protocol: TCP - port: 2443 protocol: TCP podSelector: matchLabels: app: flow-web release: cbflow policyTypes: - Ingress -

Your

NetworkPolicymanifest(s) must be present in your cluster. To add manifest(s) to your cluster, run:kubectl apply -f <your-NetworkPolicy-manifest.yaml> \ --namespace <development-server-namespace>If you have more than one manifest, use additional instances of -fto include them in the previous command. -

If your

myvalues.yamlis based on thecloudbees-flowvalues file v2.23.1 or earlier, you need to update theNetwork isolation configurationsection with new configurations. To do so:-

Go to Sonatype Nexus and download the latest

cloudbees-flowrelease. -

Extract the package, and open the

cloudbees-flow/values.yaml. -

Find the

Network isolation configurationsection in thevalues.yamland copy it to yourmyvalues.yaml. Ensure thenetworkIsolation.networkPoliciesconfiguration is present.When updating your

myvalues.yamlwith the latestNetwork isolation configurationsection, ensure:-

You do not overwrite any custom

networkIsolation.*settings. -

Your values file is valid and the indention is correct.

Failing to do so may cause your upgrade to fail or other unexpected behavior.

-

-

Override CloudBees CD/RO Kubernetes network policies

After you’ve met the requirements in Before you start, in your myvalues.yaml:

-

Navigate to the

Network isolation configurationsection. -

To disable the default CloudBees CD/RO network, configure

networkIsolation.networkPolicies.enable: false.After configuring networkIsolation.networkPolicies.enable: false, CloudBees CD/RO will not create its default network policies, and depend completely on your custom policies. Failure to correctly configure your custom network policies may cause unexpected behavior in your deployment. -

In the

additionalIngressSelectorfor each CloudBees CD/RO component, provide the network policy configurations for your ingress.Example custom

cbflow-webingress configurationweb: additionalIngressSelector: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: kube-system podSelector: matchLabels: app.kubernetes.io/name: ingress-nginx

Once you’ve updated your myvalues.yaml, update your flow-server deployment:

helm upgrade <development-server-releasename> cloudbees/cloudbees-flow \ -f <myvalues.yaml> \ --namespace <development-server-namespace> \ --timeout 10000s

You can now test your custom network policies in your development environment. After testing is complete, to updated your production servers, refer to Update CloudBees CD/RO production servers.

How to install custom CloudBees Analytics init container images

CloudBees CD/RO supports integrations using custom CloudBees Analytics init container images. In your values file, the analytics.sysctlInitContainer.imageRepository and analytics.sysctlInitContainer.imageTag values are used to configure the CloudBees Analytics image to include during installations and upgrades. To set these values:

-

Open your values file, and navigate to

analytics.sysctlInitContainer. -

Replace the

imageRepositoryvalue with your init container image repository. -

Replace

imageTagvalue with your init container image tag. -

Ensure the YAML is well-formed and save.

Now that you have updated your values file with your custom CloudBees Analytics init container image, update your CloudBees CD/RO environment to apply your image. For more information, refer to Update CloudBees CD/RO production servers.

| Always ensure you perform sufficient testing in your development environment before applying your changes to production environments. |

How to configure custom probing values

In CloudBees CD/RO v2023.12.0 and later Helm charts, liveness and readiness probe values were added for:

-

-

jobInit.livenessProbe

-

-

-

web.livenessProbe -

web.readinessProbe

-

-

CloudBees CD/RO repository server:

-

repository.livenessProbe -

repository.readinessProbe

-

To override the defaults values in the CloudBees CD/RO Helm charts, follow the instructions below to configure custom values in your myvalues.yaml.

Configure probing values for CloudBees CD/RO server jobInit

Add a custom jobInit probe values

To configure custom jobInit.livenessProbe values:

-

In your

myvalues.yaml, navigate tovalues.jobInit. -

Add the following fields under

jobInit:## Kubernetes Liveness Probes: livenessProbe: initialDelaySeconds: <INITIAL-DELAY-IN-SECONDS> periodSeconds: <PROBE-PERIOD-IN-SECONDS> timeoutSeconds: <TIMEOUT-LENGTH-IN-SECONDS>-

The default values are:

## Kubernetes Liveness Probes: livenessProbe: initialDelaySeconds: 60 periodSeconds: 60 timeoutSeconds: 10

-

-

Install or upgrade your CloudBees CD/RO instance to apply these values.

Configure probing values for CloudBees CD/RO web server

Add a custom web probe values

To configure custom web.livenessProbe or web.readinessProbe values:

-

In your

myvalues.yaml, navigate tovalues.web. -

Add the following applicable fields under

web:web.livenessProbeweb.readinessProbe## Kubernetes Liveness Probes: livenessProbe: initialDelaySeconds: <INITIAL-DELAY-IN-SECONDS> periodSeconds: <PROBE-PERIOD-IN-SECONDS> timeoutSeconds: <TIMEOUT-LENGTH-IN-SECONDS>## Kubernetes Readiness Probes: readinessProbe: initialDelaySeconds: <INITIAL-DELAY-IN-SECONDS> periodSeconds: <PROBE-PERIOD-IN-SECONDS> timeoutSeconds: <TIMEOUT-LENGTH-IN-SECONDS> failureThreshold: <FAILURE-THRESHOLD-IN-SECONDS>-

The default values are:

web.livenessProbe defaultsweb.readinessProbe defaults## Kubernetes Liveness Probes: livenessProbe: initialDelaySeconds: 10 periodSeconds: 60 timeoutSeconds: 10 failureThreshold: 3## Kubernetes Readiness Probes: readinessProbe: initialDelaySeconds: 10 periodSeconds: 5 timeoutSeconds: 10 failureThreshold: 3

-

-

Install or upgrade your CloudBees CD/RO instance to apply these values.

Configure probing values for CloudBees CD/RO repository server

Add a custom repository probe values

To configure custom repository.livenessProbe or repository.readinessProbe values:

-

In your

myvalues.yaml, navigate tovalues.repository. -

Add the following fields applicable under

repository:repository.livenessProberepository.readinessProbe## Kubernetes Liveness Probes: livenessProbe: initialDelaySeconds: <INITIAL-DELAY-IN-SECONDS> periodSeconds: <PROBE-PERIOD-IN-SECONDS> timeoutSeconds: <TIMEOUT-LENGTH-IN-SECONDS> failureThreshold: <FAILURE-THRESHOLD-IN-SECONDS>## Kubernetes Readiness Probes: readinessProbe: initialDelaySeconds: <INITIAL-DELAY-IN-SECONDS> periodSeconds: <PROBE-PERIOD-IN-SECONDS> timeoutSeconds: <TIMEOUT-LENGTH-IN-SECONDS> failureThreshold: <FAILURE-THRESHOLD-IN-SECONDS>-

The default values are:

repository.livenessProbe defaultsrepository.readinessProbe defaults## Kubernetes Liveness Probes: livenessProbe: initialDelaySeconds: 120 periodSeconds: 10 timeoutSeconds: 5 failureThreshold: 3## Kubernetes Readiness Probes: readinessProbe: initialDelaySeconds: 120 periodSeconds: 5 timeoutSeconds: 5 failureThreshold: 3

-

-

Install or upgrade your CloudBees CD/RO instance to apply these values.

How to autoscale server pods

A HorizontalPodAutoscaler (HPA) automatically updates a workload resource to scale the workload to match demand. HPA deploys additional pods in response to an increased load.

For more information, refer to Horizontal Pod Autoscaling.

CloudBees CD/RO includes horizontal pod autoscaling support for the following deployment components:

-

CloudBees CD/RO server

-

Web server

-

Repository server

CloudBees CD/RO server

The CloudBees CD/RO server supports HPA only when clusteredMode is true.

To enable HPA for the CloudBees CD/RO server, add the following parameter values:

server: autoscaling: enabled: true # enable: true to enable HPA for server minReplicas: 1 # Min Number of Replicas maxReplicas: 3 # Max Number of Replicas to scale targetCPUUtilizationPercentage: 80 # CPU Threshold to scale up targetMemoryUtilizationPercentage: 80 # Memory Threshold to scale up templates: [] # Custom or additional autoscaling metrics # ref: https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#support-for-custom-metrics # - type: Pods # pods: # metric: # name: repository_process_requests_total # target: # type: AverageValue # averageValue: 10000m

server.autoscaling.minReplicas must match server.replicas.

|

Web server

The web server supports scaling in both cluster and non-cluster modes.

To enable HPA for the web server, add the following parameter values:

web: autoscaling: enabled: true # enable: true to enable HPA for web minReplicas: 1 # Min Number of Replicas maxReplicas: 3 # Max Number of Replicas to scale targetCPUUtilizationPercentage: 80 # CPU Threshold to scale up targetMemoryUtilizationPercentage: 80 # Memory Threshold to scale up templates: [] # Custom or additional autoscaling metrics # ref: https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#support-for-custom-metrics # - type: Pods # pods: # metric: # name: repository_process_requests_total # target: # type: AverageValue # averageValue: 10000m

web.autoscaling.minReplicas must match web.replicas.

|

Repository server

The repository server supports scaling in both cluster and non-cluster modes.

To enable HPA for the repository server, add the following parameter values:

repository: autoscaling: enabled: true # enable: true to enable HPA for repository minReplicas: 1 # Min Number of Replicas maxReplicas: 3 # Max Number of Replicas to scale targetCPUUtilizationPercentage: 80 # CPU Threshold to scale up targetMemoryUtilizationPercentage: 80 # Memory Threshold to scale up templates: [] # Custom or additional autoscaling metrics # ref: https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#support-for-custom-metrics # - type: Pods # pods: # metric: # name: repository_process_requests_total # target: # type: AverageValue # averageValue: 10000m

repository.autoscaling.minReplicas must match repository.replicas.

|

How to use an existing secret for bound agents

If you are using an existing secret in your values file for flowCredentials.existingSecret, you may have gotten the following error message when trying to install the bound agent (cbflow-agent):

To use flowCredentials.existingSecret, please set \ boundAgent.flowCredentials.existingSecret to the same value \ as flowCredentials.existingSecret in your values file.

This is because CloudBees CD/RO expects if you are using an existing secret in flowCredentials.existingSecret, the same existingSecret is used for the bound agent.

To use your existing CloudBees CD/RO flow-server secret for the bound agent (cbflow-agent):

-

Open your

myvalues.yaml, and search forboundAgent:. -

In the

boundAgentconfiguration, add the following:flowCredentials: existingSecret: <your-flowCredentials.existingSecret-value> -

Ensure the entries are correctly indented, and save your file.

On your next install or upgrade attempt, this should remove the boundAgent.flowCredentials.existingSecret error message.

How to add additional container values for sidecar injectors

For the default CloudBees CD/RO Helm charts, refer to cloudbees-flow chart configuration values for each component. You can find these cloudbees-flow values referenced in the values.yaml under the *.additionalContainers tag for the corresponding component.

| Key | Description/Default |

|---|---|

server.additionalContainers |

To add additional containers for the server, uncomment the name, image, and command in your values file.

|

web.additionalContainers |

To add additional containers, uncomment the name, image, and command in your values file.

|

analytics.additionalContainers |

To add additional containers, uncomment the name, image, and command in your values file.

|

repository.additionalContainers |

To add additional containers, uncomment the name, image, and command in your values file.

|

boundAgent.additionalContainers |

To add additional containers, uncomment the name, image, and command in your values file.

|

How to add additional volume values for sidecar injectors

For the default CloudBees CD/RO Helm charts, refer to cloudbees-flow chart configuration values for each component. You can find these cloudbees-flow values referenced in the values.yaml under the *.additionalVolume and *.additionalVolumeMounts tags for the corresponding component.

| Key | Description/Default |

|---|---|

server.additionalVolume server.additionalVolumeMounts |

You can use

|

web.additionalVolume web.additionalVolumeMounts |

To add an additional volume and mount, update the settings in the

|

repository.additionalVolume repository.additionalVolumeMounts |

To add an additional volume and mount, update the settings in the

|

boundAgent.additionalVolume boundAgent.additionalVolumeMounts |

To add an additional volume and mount, update the settings in the

|

How to reduce ZooKeeper startup time in cluster mode

When deploying CloudBees CD/RO with clusteredMode enabled, Zookeeper pods replicas may fail or restart multiple times before becoming Ready. The root cause of this issue is that pods are scheduled in an orderly manner, and one must be ready before another replica is scheduled.

To reduce initialization times, you can set publishNotReadyAddresses: true in the ZooKeeper service-headless.yaml. This allows DNS records to be published even if pods are not ready, which may help complete discovery before other pods are probed as Ready.

| As of CloudBees CD/RO v2023.06.0 and above, these values were updated in the default Helm charts, and you only need to upgrade to one of these versions to integrate the improvements. If you want to continue to use an older CloudBees CD/RO version, follow the steps in Before you start and Configure ZooKeeper to reduce startup time in cluster mode. |

Before you start

The following are important steps or prerequisites you should understand or perform before making changes to the CloudBees CD/RO ZooKeeper configuration:

-

There are two procedures that can be used to update the

service-headless.yamlin your deployment:-

You can use

kubectl applyto change the current deployment without updating the entire deployment.When using kubectl apply, the next instance you run ofhelm upgrademay revert these changes depending on the version you are upgrading to. If you chose to only usekubectl applyand patch your current deployment, ensure theservice-headless.yamltemplate is updated in your next upgrade cloudbees-flow chart version before runninghelm upgrade. -

You can update the

service-headless.yamlwithin your cloudbees-flow Helm charts, and then usehelm upgradeto update the entire deployment.If you are using

helm upgradeyou need the cloudbees-flow package of your current deployment, and a copy of anymyvalues.yamlthat need to be applied to the deployment. If you have a copy of your CloudBees CD/RO version cloudbees-flow package, you can find the files atcloudbees-flow/charts/zookeeper/templates/.If you do not have a local copy of your CloudBees CD/RO Helm charts:

-

Update your cloudbees-flow Helm repository, by running:

helm repo update cloudbees -

To see a list of all available charts, run:

helm search repo cloudbees/cloudbees-flow -l -

Find the APP VERSION of your CloudBees CD/RO deployment and note its CHART VERSION.

-

To install a local copy of the Helm charts for your CloudBees CD/RO version, run:

helm pull cloudbees/cloudbees-flow --version <CHART VERSION> -

To see where the package is stored, run

helm env. The packages are stored as archives in the path for$HELM_REPOSITORY_CACHE.

-

-

Configure ZooKeeper to reduce startup time in cluster mode

The following steps describe how to reconfigure your ZooKeeper integration to help stabilize and reduce startup times. There are two methods you can use depending on your requirements:

-

Patch ZooKeeper using Kubectl: Apply changes to your current deployment only.

-

Update ZooKeeper using Helm: Update your deployment to apply changes from the cloudbees-flow Helm charts.

| Both methods and their prerequisites are described in Before you start. |

Patch ZooKeeper using Kubectl

There is prerequisite information for this task listed in Before you start. Ensure you understand this information prior to starting.

Using kubectl apply will result in updating the current deployment only, and not the actual ZooKeeper Helm charts. The next time you run helm upgrade, if you do not ensure to apply the changes as described in Update ZooKeeper using Helm or upgrade to CloudBees CD/RO 2023.06.0 or later, you will overwrite these changes.

|

To reconfigure ZooKeeper using kubectl apply and patch your deployment:

-

To create a local copy of the

zookeeper-headless.yaml, run:kubectl get svc zookeeper-headless -n <your-namespace> -o yaml > zookeeper-headless.yaml -

Open the

zookeeper-headless.yamltemplate in an editor, and update thevalues.specsection as follows:spec: clusterIP: None ports: {{- range $key, $port := .Values.ports }} - name: {{ $key }} port: {{ $port.containerPort }} targetPort: {{ $key }} protocol: {{ $port.protocol }} {{- end }} publishNotReadyAddresses: true selector: app: {{ template "zookeeper.name" . }} release: {{ .Release.Name }} -

To apply the ZooKeeper template to your CloudBees CD/RO deployment, run:

kubectl apply -f zookeeper-headless.yaml -n <your-namespace>This should return the message,

service/zookeeper-headless configured.-

(Optional) You can check the updated version by running:

kubectl get svc zookeeper-headless -n <your-namespace> -o yaml

-

-

To restart the ZooKeeper service and apply the changes, run:

kubectl rollout restart deployment/<your-cloudbees-flow-deployment> -

(Optional) To monitor the status of the upgrade and the initialzation of the ZooKeeper pods, run:

kubectl get pods --namespace <your-namespace> --watch

This should help reduced the startup times associated with ZooKeeper and stabilized the installation. If your ZooKeeper errors are returned, review each step to ensure the correct values were used.

Update ZooKeeper using Helm

The prerequisites for this task listed in Before you start and should be performed prior to starting.

To reconfigure ZooKeeper using helm upgrade and update your deployment:

-

Navigate to your path for

$HELM_REPOSITORY_CACHE, and open the package archive for your CloudBees CD/RO version. For more information on finding the$HELM_REPOSITORY_CACHE, refer to Before you start. -

In the CloudBees CD/RO package, navigate to

cloudbees-flow/charts/zookeeper/templates/. -

In your

service-headless.yamltemplate, update thevalues.specsection as follows:spec: clusterIP: None ports: {{- range $key, $port := .Values.ports }} - name: {{ $key }} port: {{ $port.containerPort }} targetPort: {{ $key }} protocol: {{ $port.protocol }} {{- end }} publishNotReadyAddresses: true selector: app: {{ template "zookeeper.name" . }} release: {{ .Release.Name }} -

To upgrade your CloudBees CD/RO with the new templates and values files, run:

helm upgrade <your-release-name> cloudbees-flow \ --namespace <your-namespace> \ # You can use mulitple instances of -f if you have more than one values file. -f <path-to-your-myvalues.yaml> # Helm upgrades exit when all tasks are complete, regardless of timeout. # However, if your database is very large, the timeout may need to be increased to prevent returning a FAILED status. # For instance: --timeout 10800s --timeout 4200s -

(Optional) To monitor the status of the upgrade and the initialzation of the ZooKeeper pods, run:

kubectl get pods --namespace <your-namespace> --watch

This should help reduced the startup times associated with ZooKeeper and stabilized the installation. If your upgrade fails or ZooKeeper errors are returned, review each step to ensure the correct values were used.

How to update CloudBees CD/RO properties in Zookeeper

This section provides step-by-step instructions to update the server and database properties files (commander.properties and database.properties) on the CloudBees CD/RO server pod using Kubernetes commands.

| To perform the following procedure, you must have the necessary cluster permissions to execute these commands. |

To update CloudBees CD/RO properties in Zookeeper:

-

Set the

NAMESPACEenvironment variable to the appropriate namespace:export NAMESPACE=<namespace> -

To retrieve the CloudBees CD/RO server pod name and launch a shell in it, run:

SERVER_POD_NAME=$(kubectl get pods -l app=flow-server -o jsonpath='{.items[0].metadata.name}' -n $NAMESPACE) kubectl exec -it $SERVER_POD_NAME -n $NAMESPACE -- bash -

Download the properties file from Zookeeper:

-

Navigate to the

/tmpdirectory. -

Download the properties file by running:

commander.propertiesdatabase.properties/opt/cbflow/jre/bin/java -DCOMMANDER_ZK_CONNECTION=zookeeper:2181 -jar /opt/cbflow/server/bin/zk-config-tool-jar-with-dependencies.jar com.electriccloud.commander.cluster.ZKConfigTool --readFile /commander/conf/commander.properties ./commander.properties/opt/cbflow/jre/bin/java -DCOMMANDER_ZK_CONNECTION=zookeeper:2181 -jar /opt/cbflow/server/bin/zk-config-tool-jar-with-dependencies.jar com.electriccloud.commander.cluster.ZKConfigTool --readFile /commander/conf/database.properties ./database.properties

-

-

Copy the properties file to the current directory of your local environment by running:

commander.propertiesdatabase.propertieskubectl cp $SERVER_POD_NAME:/tmp/commander.properties ./commander.properties -n $NAMESPACEkubectl cp $SERVER_POD_NAME:/tmp/database.properties ./database.properties -n $NAMESPACECloudBees strongly recommends creating a backup of this version of the properties file to enable quick recovery if issues arise after redeploying the updated file. -

Edit your local copy of the properties file.

-

Upload the updated properties file to the CloudBees CD/RO server pod by running:

commander.propertiesdatabase.propertieskubectl cp ./commander.properties $SERVER_POD_NAME:/tmp/commander.properties -n $NAMESPACEkubectl cp ./database.properties $SERVER_POD_NAME:/tmp/database.properties -n $NAMESPACE -

Upload the updated properties file to Zookeeper:

-

Navigate to the

/tmpdirectory. -

Upload the properties file by running:

commander.propertiesdatabase.properties/opt/cbflow/jre/bin/java -DCOMMANDER_ZK_CONNECTION=zookeeper:2181 -jar /opt/cbflow/server/bin/zk-config-tool-jar-with-dependencies.jar com.electriccloud.commander.cluster.ZKConfigTool --writeFile /commander/conf/commander.properties ./commander.properties/opt/cbflow/jre/bin/java -DCOMMANDER_ZK_CONNECTION=zookeeper:2181 -jar /opt/cbflow/server/bin/zk-config-tool-jar-with-dependencies.jar com.electriccloud.commander.cluster.ZKConfigTool --writeFile /commander/conf/database.properties./database.properties

-

-

For the changes to take effect, restart the CloudBees CD/RO server pods:

-

Create a new bash script file, such as

restart_cdro_pods.sh. -

Paste the following content:

If your environment uses a name other than

flow-serverfor the deployment name, update theDEPLOYMENT_NAMEvariable in the script below.Additionally, if the

NAMESPACEvariable is not set the script fails. Run the following to set it:export NAMESPACE=<namespace>Expand for

restart_cdro_pods.shscript.#!/bin/bash # Set variables DEPLOYMENT_NAME="flow-server" # Replace with your deployment name. if [[ -z "$NAMESPACE" ]]; then echo "Error: NAMESPACE variable is not set. Set it and try again." exit 1 fi # Check if all new pods are running and ready: wait_for_all_pods_ready() { local old_pod="$1" echo "Waiting for all new pods to be in 'Running' and 'Ready' state..." while true; do # Get all new pods, excluding the pod that is being deleted: NEW_PODS=$(kubectl get pods -n $NAMESPACE -l app=$DEPLOYMENT_NAME \ -o jsonpath='{.items[?(@.metadata.name!="'$old_pod'")].metadata.name}') # Initialize a flag to check if all pods are ready: ALL_READY=true for NEW_POD in $NEW_PODS; do if [[ -n "$NEW_POD" ]]; then STATUS=$(kubectl get pod $NEW_POD -n $NAMESPACE -o jsonpath='{.status.phase}') READY=$(kubectl get pod $NEW_POD -n $NAMESPACE -o jsonpath='{.status.containerStatuses[0].ready}') echo "Checking pod $NEW_POD - Status: $STATUS, Ready: $READY" if [[ "$STATUS" != "Running" || "$READY" != "true" ]]; then ALL_READY=false fi fi done # If all pods are ready, break out of the while loop: if [[ "$ALL_READY" == "true" ]]; then echo "All new pods are 'Running' and 'Ready'." break fi echo "Not all new pods are ready. Retrying in 5 seconds..." sleep 5 done } # Get all pods in the deployment: PODS=$(kubectl get pods -n $NAMESPACE -l app=$DEPLOYMENT_NAME -o jsonpath='{.items[*].metadata.name}') # Delete pods one by one: for POD in $PODS; do echo "Deleting pod $POD..." kubectl delete pod $POD -n $NAMESPACE # Wait for all new pods to be ready before deleting the next one: wait_for_all_pods_ready $POD done echo "All pods have been replaced successfully."

-

-

Run the script to restart the CloudBees CD/RO server pods:

bash restart_cdro_pods.sh

After the CloudBees CD/RO pod restarts, confirm the changes were successful by checking the application logs and updated functionality.

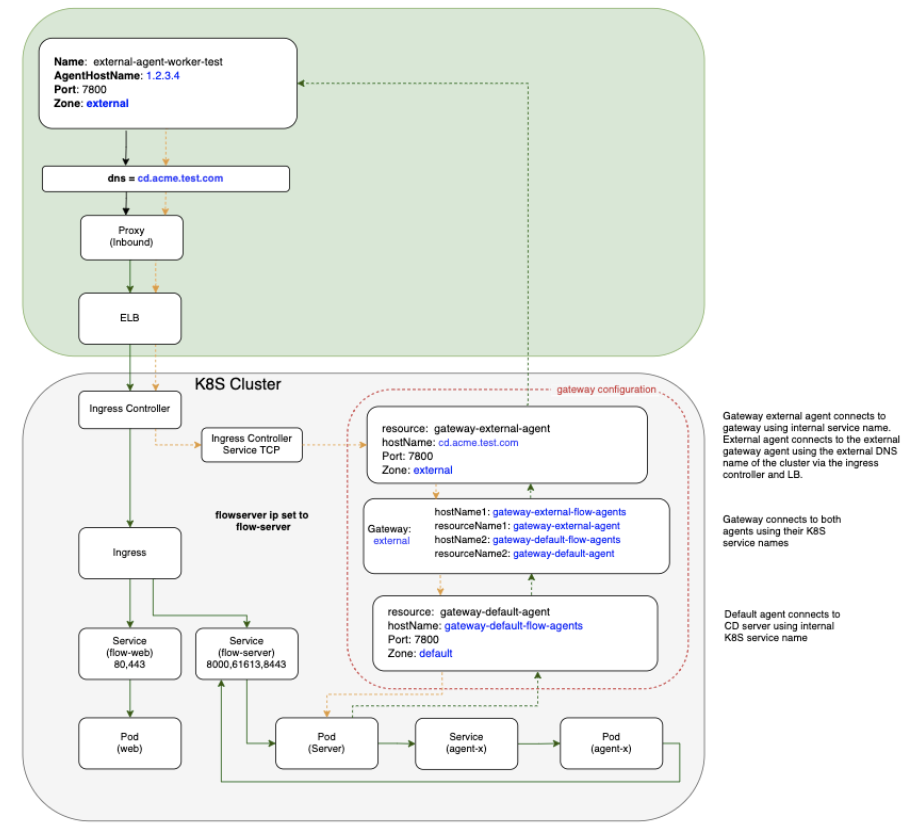

How to configure internal and external agents

CloudBees CD/RO installation on Kubernetes uses internal services within the cluster to communicate between components, which ensures that communication within the cluster is efficient and secure. A common method for deploying CloudBees CD/RO on Kubernetes is to install CloudBees CD/RO components inside the Kubernetes cluster while installing worker agents such as deployment targets outside the cluster, such as on VMs and traditional platforms.

In a mixed inside and outside deployment use case, communications with the CloudBees CD/RO components happen with internal services installed on Kubernetes, while communication with CD agents happen outside the cluster. These external agents can communicate with the internal components using a pair of gateway agents.

Gateway agent pairs can be installed using one of these methods:

-

Using the CloudBees CD/RO Helm chart

-

Using the CloudBees CD/RO agent Helm chart

Installing gateway agent pairs using the CloudBees CD/RO Helm chart

-

Enable both

internalGatewayAgentandexternalGatewayAgentto enable the CloudBees CD/RO chart to install the agent sub-chart as gateway agents. Make sure you setingress.hostandexternalGatewayAgent.service.publicHostNameboth as the same value:ingress: host: my-flow.example.com internalGatewayAgent: enabled: true externalGatewayAgent: enabled: true service: publicHostName: my-flow.example.com -

Ensure your default values file has

ingress-nginxTCP config map settings uncommented, to expose the 7800 gateway agents service:ingress-nginx: tcp: 8200: "{{ .Release.Namespace }}/flow-repository:8200" 8443: "{{ .Release.Namespace }}/flow-server:8443" 7800: "{{ .Release.Namespace }}/gateway-external-agent-flow-agents:7800" -

If you are not creating an ingress controller as part of the CloudBees CD/RO chart because you already have an existing ingress controller make sure you have

tcpconfigmap added to expose above ports and services (for more details, refer to the Kubernetes documentation):helm repo add cloudbees https://public-charts.artifacts.cloudbees.com/repository/public/ helm repo update helm install releaseName cloudbees/cloudbees-flow \ -f valuesFile --namespace nameSpace --timeout 10000s

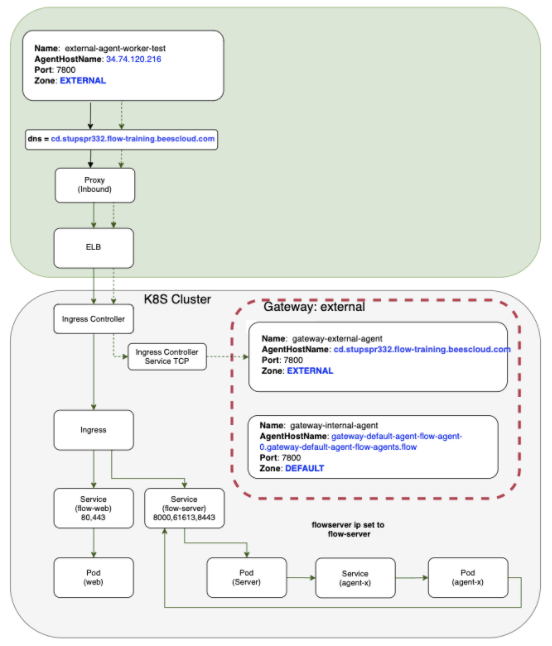

Installing gateway agents using the CloudBees CD/RO agent Helm chart

To create the gateway agent using the agent chart:

-

Create the server secret:

# Flow server credentials to use in order to register # with agent as a resource on the Flow server. kubectl create secret generic flow-agent-secret \ --from-literal=CBF_SERVER_USER='admin' \ --from-literal=CBF_SERVER_PASSWORD=<flow-server-password> \ -n <namespace> -

Create a values file for the internal agent called

gateway-default-agent.yaml:resourceName: gateway-default-agent replicas: 1 flowCredentials: existingSecret: flow-agent-secret service: publicHostName: gateway-default-agent-flow-agents -

Install the gateway internal agent using

gateway-default-agent.yaml:helm install gateway-default-agent cloudbees/cloudbees-flow-agent \ -f gateway-default-agent.yaml -n <namespace> -

Create a values file for the external agent called

gateway-external-agent.yamlthat addszoneName,publicHostNameand gateway configuration:resourceName: gateway-external-agent zoneName: external service: # External DNS hostname that the external agents would use to communicate # with the external gateway agent publicHostName: my-flow.example.com # configure gateway using external gateway agent gateway: # configure gateway using this agent enabled: true # Name of the gateway to create name: external # Name of gateway agent to pair with as gateway resource 2 pairedResourceName: gateway-default-agent flowCredentials: existingSecret: flow-agent-secret -

Install the gateway external agent using

gateway-external-agent.yaml:helm install gateway-external-agent cloudbees/cloudbees-flow-agent \ -f gateway-external-agent.yaml -n <namespace>

Autoscaling gateway agents

External gateway agents allow connectivity to be routed through gateways internally within your Kubernetes cluster or externally via an external endpoint. Typically, agents are included within the gateway configuration. As each pod replica is registered individually as a resource, and their gateway agents created 1:1, this creates a single point of failure within each replica.

This model is shown in the following example Kubernetes cluster using an external gateway agent:

Although this model generally provides internal and external connectivity, it doesn’t promote the goal of high availability. However, horizontally autoscaling your gateway agents allows your cluster to dynamically handle workloads or the loss of a gateway agent. CloudBees CD/RO provides this option for horizontal pod autoscaling (HPA) with HPA gateway agents.

| By default, the CloudBees CD/RO server does not include HPA gateway agents. You must manually configure gateway agent autoscaling. |

Configuring HPA gateway agents

Using HPA gateway agents allows you to distribute the cluster workload over one or many agent replicas and provides availability if one fails. The following steps allow your HPA gateway agents to be registered as services, using a service name, instead of the resource name of individual pods.

The CloudBees CD/RO cloudbees-flow Helm chart is available in the public cloudbees-examples repository. CloudBees recommends you save this in a local myvaules.yaml to retain it within your environment.

|

-

In your CloudBees CD/RO cloudbees-flow Helm chart, add the following:

internalGatewayAgent: enabled: true autoscaling: enabled: true minReplicas: 1 maxReplicas: 2 targetCPUUtilizationPercentage: 80 targetMemoryUtilizationPercentage: 80 # cloudbees-flow-agent chart configurations used for creating the # external agent for the gateway externalGatewayAgent: enabled: true autoscaling: enabled: true minReplicas: 1 maxReplicas: 2 targetCPUUtilizationPercentage: 80 targetMemoryUtilizationPercentage: 80 -

For

internalGatewayAgent, configure the following for your cluster:-

minReplicas -

maxReplicas -

targetCPUUtilizationPercentage -

targetMemoryUtilizationPercentageFor HPA gateway agents to work, internalGatewayAgent.enabledandinternalGatewayAgent.autoscaling.enabledmust be set totrue.

-

-

For

externalGatewayAgent, configure the following for your cluster:-

minReplicas -

maxReplicas -

targetCPUUtilizationPercentage -

targetMemoryUtilizationPercentageFor HPA gateway agents to work, externalGatewayAgent.enabledandexternalGatewayAgent.autoscaling.enabledmust be set totrue.

-

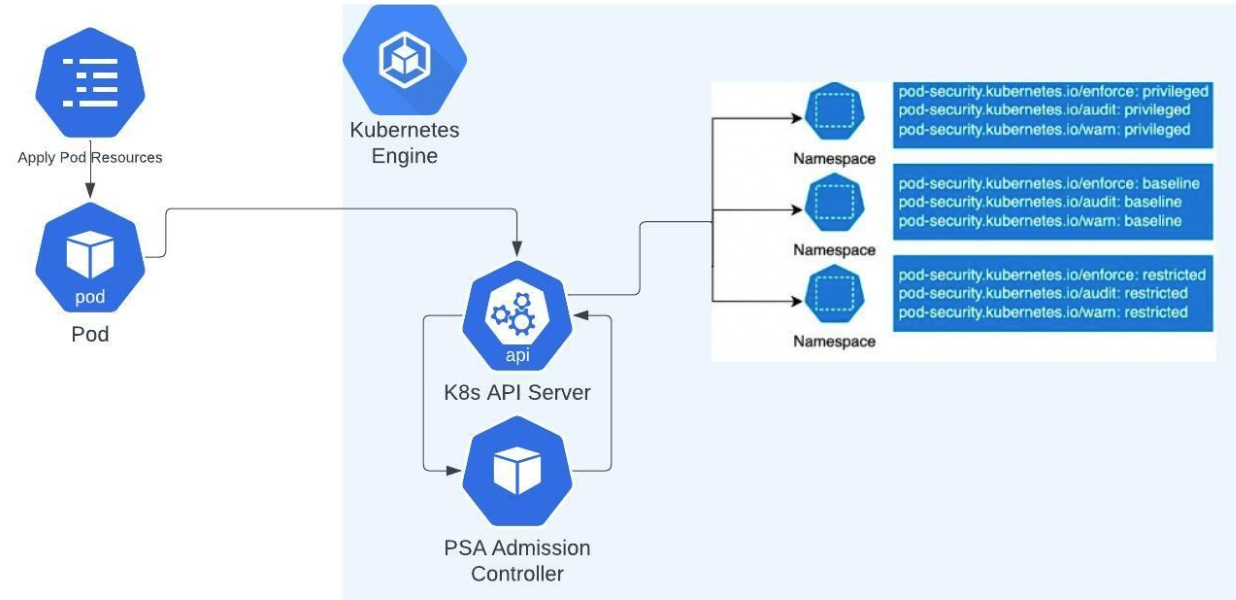

How to use Kubernetes Pod Security Standards

As of Kubernetes version 1.25, Pod Security Standards (PSS) are a built-in feature you can use to enforce hardening policies in your cluster. Acting as a built-in admission controller, PSS allows you to apply consistent baselines by adding labels to your Kubernetes namespaces, as shown in the following cluster example:

| PSS replaces deprecated Pod Security Policies to enforce restrictions on pod behavior and should be implemented with Kubernetes clusters with version 1.25 and above. |

Pod Security Standards

There are three standards/levels you can configure for pods using the pod security admission controller:

-

Privileged: An allow-by-default policy that provides the widest possible level of permissions. This policy allows known privilege escalations and is typically reserved for privileged, trusted users.

-

Baseline: A minimally restrictive policy that prevents known privilege escalations. This policy allows the minimally-specified default pod configuration and typically is used in relation to non-critical applications.

-

Restricted: A heavily restricted policy that follows current pod hardening best practices. This policy is typically used in relation to security-critical applications and lower-trust users.

For more information, refer to the Kubernetes Pod Security Standards documentation.

These policies are applied to namespaces using labels via different monitoring modes, enforce, warn, and audit. Depending on your needs, you can configure namespace labels with multiple modes, each with their own policies. For example, you can configure namespaces to warn against using restricted policies, but to only enforce baseline policies. This approach allows you to enforce minimal protections, while identifying areas you can improve before enforcing restricted standards.

Applying pod security context in CloudBees CD/RO Helm charts

Security contexts provide parameterization configured for pods at runtime. To use security context in CloudBees CD/RO, you must configure the securityContext in the CloudBees CD/RO server and agent Helm charts.

By default, securityContext.enabled=false is used in CloudBees CD/RO server and agent Helm charts must be configured.

|

To enable this setting:

-

Open your

myvalues.yamlforcloudbees-floworcloudbees-flow-agent. -

Change

securityContext.enabled=falsetosecurityContext.enabled=true. -

Configure additional project-specific settings for

securityContextas needed:Refer to the Kubernetes Pod Security Standards documentation for more information. -

The following configurations are available for CloudBees CD/RO v2023.12.0 and later Helm charts:

Pods security context configurations v2023.12.0 and later

### -------------------------------------------- ### Pods security context ### --------------------------------------------- ## Requires `securityContext.enabled=true` to apply `securityContext` settings for pod spec. ## Ref: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/ securityContext: enabled: false fsGroup: 1000 runAsUser: 1000 ## Configure pod security context, which applied to pod spec. ## Ref: https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.28/#podsecuritycontext-v1-core # fsGroup: 1000 # fsGroupChangePolicy: OnRootMismatch # runAsGroup: 1000 # runAsNonRoot: true # runAsUser: 1000 # seLinuxOptions: {} # seccompProfile: {} # supplementalGroups: # sysctls: ## Requires `securityContext.enabled=true` to apply `containerSecurityContext` settings for containers. containerSecurityContext: {} ## Configure container security context, which is applied to containers. ## Ref: https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.28/#securitycontext-v1-core # allowPrivilegeEscalation: false # capabilities: # drop: [ "ALL" ] # privileged: false # procMount: "Default" # readOnlyRootFilesystem: true # runAsGroup: 1000 # runAsNonRoot: true # runAsUser: 1000 # seLinuxOptions: {} # seccompProfile: {} -

The following child Helm charts also support security context configurations for CloudBees CD/RO v2023.12.0 and later:

Zookeeper

To configure a security context for ZooKeeper, navigate to the ZooKeeper section of your

myvales.yamland add the following fields:zookeeper: securityContext: enabled: true fsGroup: 1000 containerSecurityContext: {}MariaDB

To configure a security context for MariaDB, navigate to the MariaDB section of your

myvales.yamland add the following fields:mariadb: securityContext: enabled: true fsGroup: 1000 containerSecurityContext: {}Bound agents

To configure a security context for bound agents, navigate to the bound agents section of your

myvales.yamland add the following fields:boundAgent: securityContext: enabled: true fsGroup: 1000 containerSecurityContext: {}CloudBees CD/RO agents

To configure a security context for CloudBees CD/RO agents, in your agent

myvales.yaml, add the following fields:securityContext: enabled: true fsGroup: 1000 runAsUser: 1000 containerSecurityContext: {}

-

Applying Policy Security Admission to namespaces

To add or modify Kubernetes Policy Security Admissions labels use:

kubectl label --overwrite ns <namespace-to-modify> pod-security.kubernetes.io/<mode-of-coverage>=<policy-level>

Where:

-

<namespace-to-modify>: Is the namespace you want to add or modify the label. -

<mode-of-coverage>: Is the mode you want to apply,enforce,warn, oraudit. -

<policy-level>: Is the PSS policy you want to apply,privileged,baselineorresricted.

For example, if you create a new namespace called mynamespace to enforce a baseline level and warn at restricted levels:

kubectl create namespace mynamespace kubectl label --overwrite ns mynamespace pod-security.kubernetes.io/enforce=baseline kubectl label --overwrite ns mynamespace pod-security.kubernetes.io/warn=restricted

Applying different Pod Security Standards versions

By default, when a PSS is applied to a mode using pod-security.kubernetes.io/, the latest version of PSS is used. However, you can also specify a specific Kubernetes version of PSS to use for your mode with:

kubectl label --overwrite ns mynamespace pod-security.kubernetes.io/<mode-of-coverage>-version=<K8s-version>

Where:

-

<mode-of-coverage>: Is the mode you want to apply,enforce,warn, oraudit. -

<K8s-version>: Is the Kubernetes version of PSS to use, such asv1.25.

For example, if you create a new namespace called mynamespace to enforce a baseline level using the PSS enforce mode of Kubernetes version 1.25:

kubectl create namespace mynamespace kubectl label --overwrite ns mynamespace pod-security.kubernetes.io/enforce=baseline kubectl label --overwrite ns mynamespace pod-security.kubernetes.io/enforce-version=v1.25

How to configure agent resource name templates

Generally, you set the name for the agent resource with the value agent.resourceName , but this only works when the agent deployment has only one replica. If there are multiple replicas, then each of them tries to register itself using the same resource name. As a result, only one replica from the entire deployment will be registered as a resource.

The resource name defaults to hostname, but other template values are available to fully qualify the resource:

-

Ordinary Helm template values. For example:

{{ .Release.Name }},{{ .Release.Namespace }}, and so on. -

In addition, two special template values are allowed :

-

{{ hostname }}: The actual resource hostname. -

{{ ordinalIndex }}: The serial replica index in the StatefulSet.

-

For example:

resourceName: "myResource - {{ .Release.Name }} - {{ ordinalIndex }}"

How to pre-provision volume snapshots as a PVC in StatefulSets

This how-to describes using a pre-provisioned volume snapshot as a PersistentVolumeClaim (PVC) within a PersistentVolume (PV) for a Kubernetes StatefulSet. The instructions on how to perform these actions may differ depending on your cloud provider, however the general steps are:

-

Create a PVC manifest.

-

Create a PV manifest that references your PVC and snapshot.

-

Apply these manifests to your cluster.

-

Test your cluster to ensure the PV and PVC are present with the desired values.

Even though this how-to describes specific steps for CloudBees Analytics (flow-analytics), you can modify the steps here to apply to other CloudBees CD/RO components.

|

-

Create a PVC manifest (

pvc.yaml):apiVersion: v1 kind: PersistentVolumeClaim metadata: name: PVCNAME spec: storageClassName: STORAGE_CLASSS accessModes: - ReadWriteOnce resources: requests: storage: STORAGE_SIZEFor your

metadata.nameused with a CloudBees Analytics PVC, CloudBees recommends:-

analytics-data-flow-analytics-0

This is based on the convention

<volume-name>-<pod-name-with-index>, where:-

<volume-name>isanalytics-dataas the default.Values.storage.volumes.analyticsStorage.name. -

<pod-name-with-index>isflow-analytics-0as the default pod name for the CloudBees Analytics server with its0index.

-

-

Create a PV manifest (

pv.yaml) that references thePVCNAMEfrom your PVC manifest and your snapshot:The following example is based on using GCP’s gcePersistentDisk. Use the format required by your provider to create a reference for your snapshot.apiVersion: v1 kind: PersistentVolume metadata: name: PVNAME spec: storageClassName: STORAGE_CLASSS capacity: storage: STORAGE_SIZE accessModes: - ReadWriteOnce claimRef: namespace: NAMESPACE name: PVCNAME # Use the directive from your provider to reference your snapshot gcePersistentDisk: pdName: CLOUD_DISK_NAME fsType: ext4 -

Update your cluster with the manifest files:

# Apply the PVC (pvc.yaml) and # PV (pv.yaml) to your cluster: kubectl apply -f pvc.yaml -f pv.yaml -

Assign the following variables:

pvcName ="<PVCNAME-from-pvc.yaml>" pvName ="<PVNAME-from-pv.yaml>" -

Check if the PV is available in your cluster and has the desired values:

kubectl get pv $pvName -

Check if the PVC is available in your cluster and has the desired values:

kubectl get pvc $pvcName

How to configure SMTP proxy servers for CloudBees CD/RO on Kubernetes

For CloudBees CD/RO installations on Kubernetes, you can configure an SMTP proxy server by passing its configuration as server.ecconfigure values in the cloudbees-flow Helm chart. These configurations are added to the CloudBees CD/RO wrapper.conf and applied during installation.