More and more enterprises from regulated industries, like financial services and healthcare, have strict auditing guidelines around data retention. For example, some agencies need to maintain records for seven years for compliance purposes. As CloudBees CD/RO is used, the volume of runtime data accumulates in its databases, possibly eroding performance, along with data storage challenges. CloudBees CD/RO data retention provides a way for the enterprise to manage its data archival and purge needs for runtime objects.

Data retention in CloudBees CD/RO includes support for these concepts:

-

Support for data archiving: The process of copying data to storage external to the CloudBees CD/RO server. Available via either UI or API.

-

Support for data purging: The process of deleting data from the CloudBees CD/RO server. Available via either UI or API.

-

Data retention policies: Data archive and purge criteria, based on object type. Configure via either UI or API.

-

Archive connectors: Specifications of the target archival systems. Configure via API, only.

Key benefits of data retention

CloudBees CD/RO data retention provides the following key benefits to your organization:

-

Performance and cost benefits: Archiving infrequently accessed data to a secondary storage optimizes application performance. Systematically removing or purging data that is no longer needed from the system helps improve performance and saves disk space.

-

Regulatory compliance: Enterprises in regulated industries, such as the Financial industry, are required to retain data for certain lengths of time for regulatory compliance.

-

Internal corporate policy compliance: Organizations may need to retain historical data for audit purposes or to comply with corporate data retention policies

-

Business intelligence and analytics: Organizations want to use archived information in potentially new and unanticipated ways. For example, in retrospective analytics, ML, and more.

Planning your data retention strategy

-

Decide which objects to include in your retention strategy. Supported objects include:

-

Releases

-

Pipeline runs

-

Deployments

-

Jobs

-

CloudBees CI builds

-

-

Decide on data retention server settings. For more information, refer to Setting up data retention.

Keeping in mind the amount and frequency of data you wish to process, configure CloudBees CD/RO server settings to handle the rate.

-

Decide the archive criteria for each object type. For more information, refer to Manage data retention policies.

-

List of projects to which the object can belong.

-

List of completed statuses for the object based on the object type. Active objects cannot be archived.

-

Look-back time frame of completed status.

-

Action: archive only, purge only, purge after archive.

-

-

Decide on the archive storage system. For more information, refer to Managing archive connectors.

The choice of archive storage systems may be driven by the enterprise’s data retention requirements. For example, regulatory compliance might require a WORM-compliant storage that prevents altering data, while data analytics might require a different kind of storage that allows for easy and flexible data retrieval. Based on your archival requirements, you can create an archive connector into which the CloudBees CD/RO data archiving process feeds data.

Archive storage system types include:

-

Cloud-based data archiving solutions: AWS S3, AWS Glacier, Azure Archive Storage, and so on.

-

WORM-compliant archive storage: NetApp.

-

Analytics and reporting system: Elasticsearch, Splunk, and so on.

-

Traditional disk-based storage.

-

RDBMS and NoSql databases.

-

Setting up data retention

To set up data retention for your CloudBees CD/RO server, you must perform the following:

-

Create your data detention rules. For more information, refer to Manage data retention policies.

-

For archiving: An archive connector needs to be established. For more information, refer to Managing archive connectors.

Following is the list of CloudBees CD/RO server settings related to data retention. To access server settings, available from CloudBees CD/RO:

-

From the CloudBees navigation, select CloudBees CD/RO.

-

From the main menu, go to . The Edit Data Retention page displays.

Related settings include:

| Setting Name | Description |

|---|---|

Enable Data Retention Management |

When enabled, the data retention management service is run periodically to archive or purge data based on the defined data retention policy.

Property name: Type: Boolean |

Data Retention Management service frequency in minutes |

Controls how often, in minutes, the data retention management service is scheduled to run. Default: Property name: Type: Number of minutes |

Data Retention Management batch size |

Number of objects to process as a batch for a given data retention policy in one iteration. Default: Property name: Type: Number |

Maximum iterations in a Data Retention Management cycle |

Maximum number of iterations in a scheduled data archive and purge cycle. Default: Property name: Type: Number |

Number of minutes after which archived data may be purged |

Number of minutes after which archived data may be purged if the data retention rule is set up to purge after archiving. Default: Property name: Type: Number of minutes |

The Cleanup Associated Workspaces feature adds to the time the purge process takes to complete.

|

The Cleanup Associated Workspaces feature should not be used if you are running CloudBees CD/RO versions 10.3.5 or earlier, as the feature can cause performance issues on your instance.

|

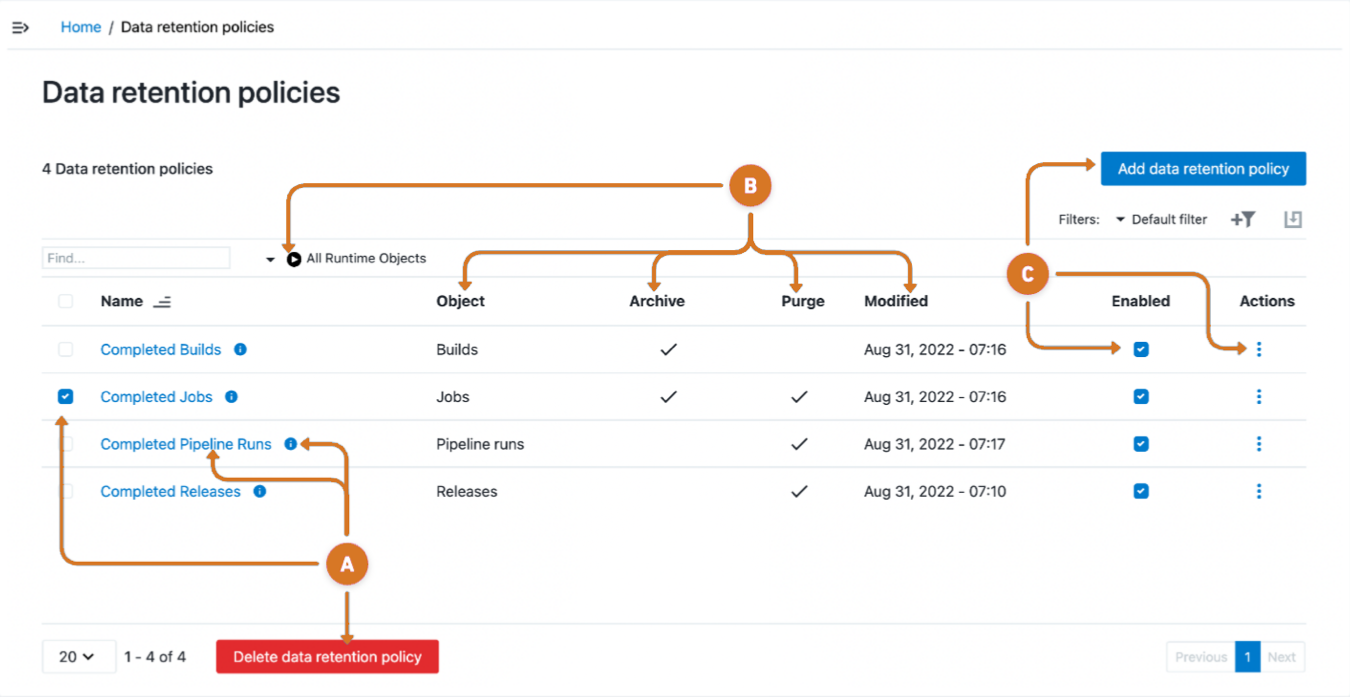

Manage data retention policies

From the CloudBees CD/RO UI, browse to https://<cloudbees-cd-server>/ and select from the main menu.

Use the information detailed below to view and manage data retention policies.

-

Manage data retention policies by:

-

Remove retention policies.

-

Select policy checkbox

-

Select Delete data retention policy.

-

-

Edit a data retention policy.

-

Select the policy name link.

-

Select

policy information.

policy information.

-

-

-

Review the following retention policy information:

-

Filter retention policies using runtime objects by selecting Run

.

. -

Review the retention policy Object, Archive, Purge or Modified column data.

-

-

Configure a data retention policy by:

-

Creating a new data retention policy by selecting Add a data retention policy

-

Activating the policy by selecting Enabled.

-

Modify a retention policy by selecting the policy three-dots

and a configuration option.

and a configuration option.View modification options.

-

Edit: - Use to modify a retention policy.

-

DSL Export: - Use to download a policy in DSL format.

-

Preview: Use to view a list of objects to be archived or purged.

-

Delete: - Use to remove the retention policy.

-

-

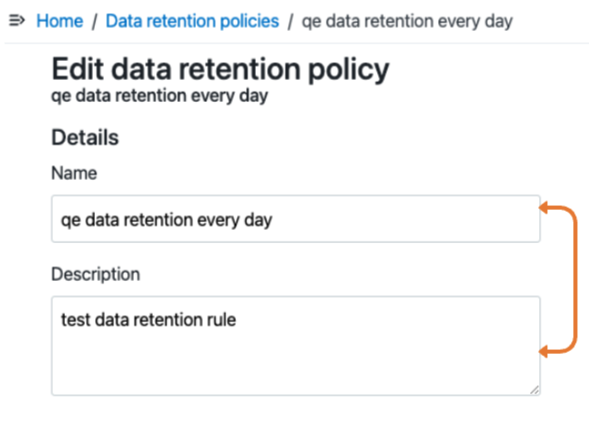

Edit a data retention policy

Access policy modification features from the Data retention policies page by:

-

Selecting the data retention policy name link or

policy information.

policy information. -

Selecting Edit from the

three-dots menu.

three-dots menu.

Data retention policy - Details

Use the information detailed below to edit data retention policy details and definitions.

Modify policy details.

-

Type a name for the data retention policy.

-

Type a description for the data retention policy.

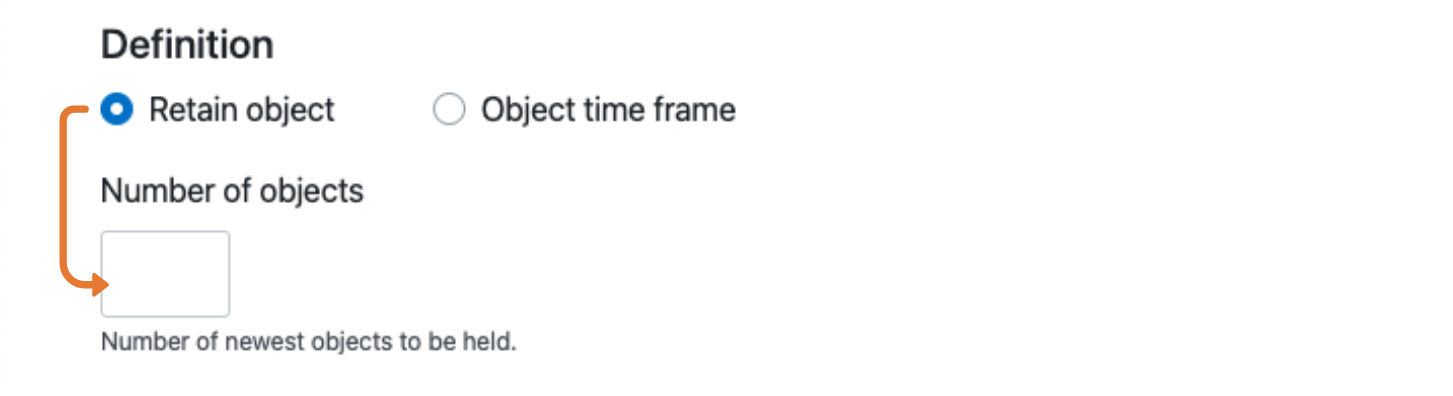

Data retention policy - Definition

Define how many objects to retain.

Identify the number of objects to retain.

-

Select Retain object to identify the number of objects to retain.

-

Enter the number of the newest, most recent objects to hold into the

Number of objectsfield. For example, if the policy is to hold the 100 most recent objects, enter100. The result is the latest 100 objects, chronologically, are always stored while other data beyond this count are subject to the Action defined below. Figure 4. Edit data retention policy - Definition - Object time frame

Figure 4. Edit data retention policy - Definition - Object time frame

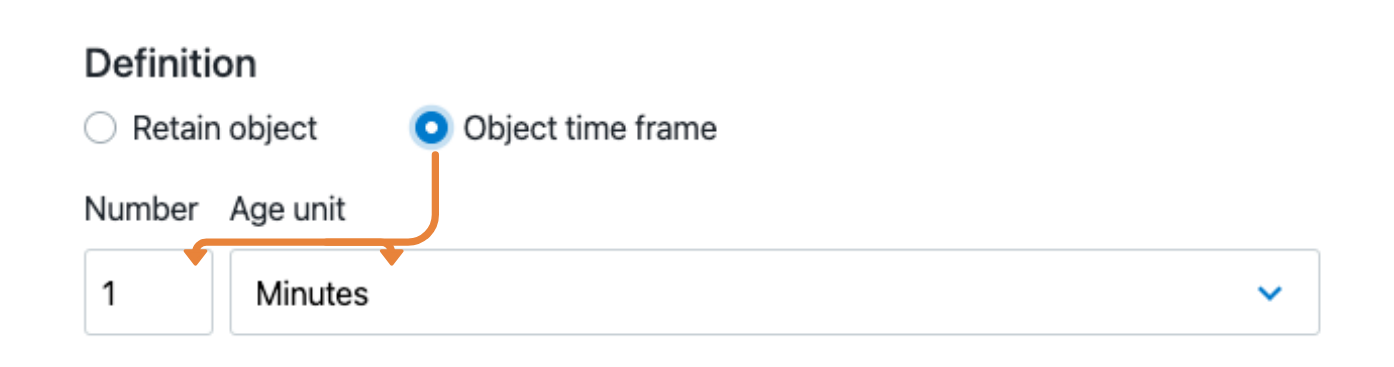

Define the timeframe for the policy.

-

Select Object time frame.

The

ageandageUnitfields together define a time threshold from the current date, determining which data to preserve or act upon based on its age. When the data retention process is initiated, it uses the current date and time as its reference point ("now"). With theageandageUnitfields, define the length of time previous to "now", instead of specifying a specific date. -

Enter the time-based number that defines the timeframe for this policy into the

Numberfield. -

Select the time unit (Minutes, Hours, Days, Weeks, Months, or Years) from the

Age unitfield to complete the time threshold for data retention. Figure 5. Edit data retention policy - Define remaining definition fields

Figure 5. Edit data retention policy - Define remaining definition fields

Select the attributes for the remaining definition fields.

-

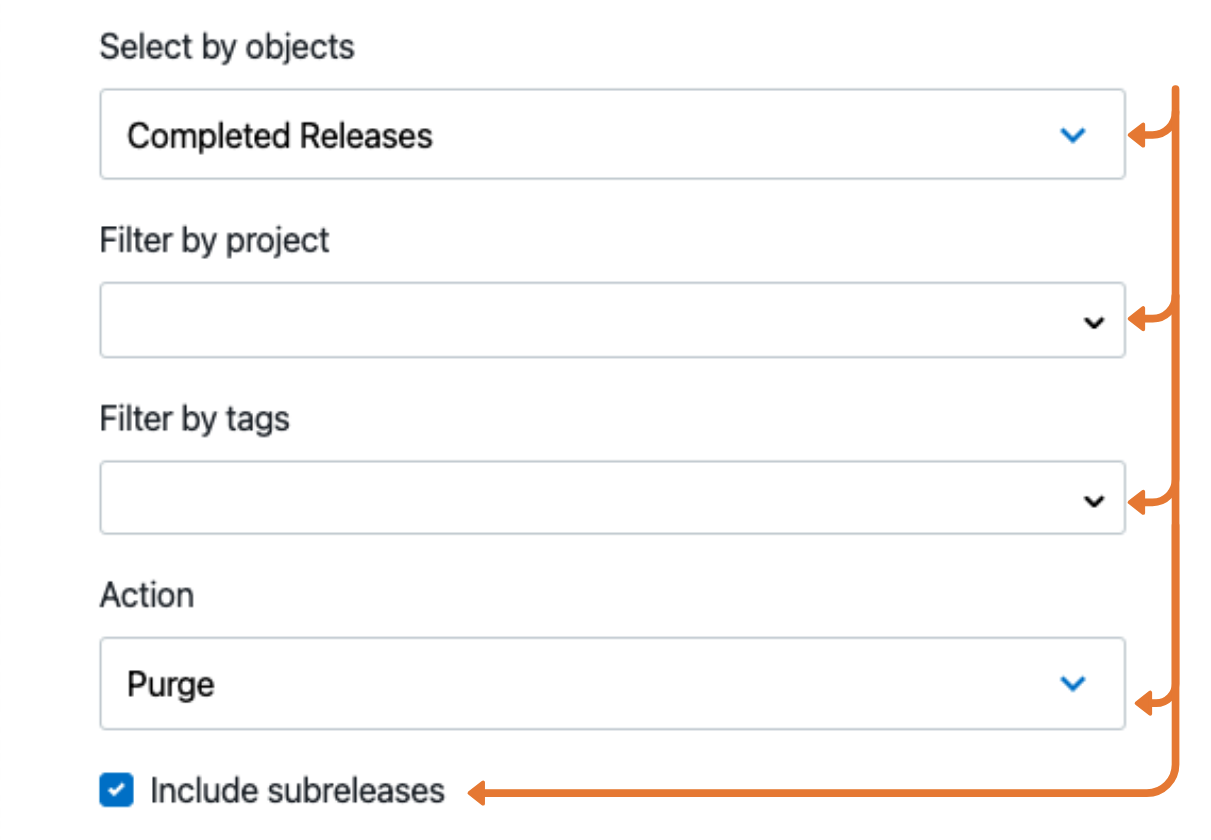

Choose an object by type and status:

-

Completed Releases: Include sub-releases.

-

Completed Pipeline runs: Successful, Error, Warning, Aborted.

-

Completed Deployments: Successful, Error, Warning, Aborted, Remove deployments in database, Remove associated workspaces.

-

Completed Jobs: Successful, Error, Warning, Aborted, Remove jobs in database, Remove associated workspaces.

-

Completed Builds: Successful, Failed, Unstable, Aborted, Not built.

-

-

Filter by project.

-

Filter by tags.

-

Select the action to take when an object is no longer within the retention policy: Purge, Archive, Purge after archive.

When using Purge after archive with you may need to run the policy twice. Purge after archive only purges objects if the duration configured in Number of minutes after which archived data may be purged is met, found in .

The first run archives the objects, but if they have not met the duration configured in Number of minutes after which archived data may be purged, they are not purged immediately. To purge these archived objects, run the schedule again after the objects have been archived for the duration configured.

If you want to purge objects immediately after archiving, configure Number of minutes after which archived data may be purged to

0. -

Indicate whether to include subreleases.

Data retention policy - Schedule and More criteria

Select the link below to use the schedules feature to modify all the schedules linked to the data retention policy.

Use the information detailed below to edit the data retention policy schedule and retention object criteria.

| The save, cancel, and preview features are located at the bottom of the screen. |

-

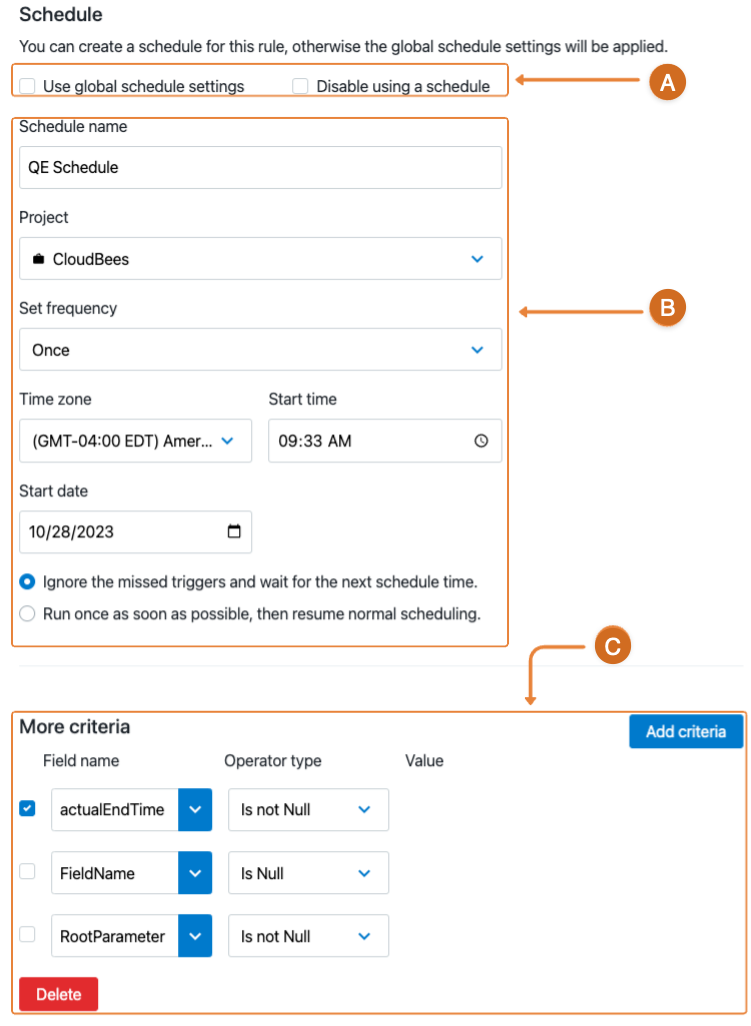

Choose one of the retention policy schedule options:

-

Select the Use global schedule checkbox tp use the default schedule.

-

Select the Disable using a schedule checkbox to turn off scheduling.

-

-

Define schedule attributes.

-

Enter a unique schedule identifier into the

Schedule namefield. -

Select a project to link the schedule from the

Projectmenu options. -

Choose the policy run interval by selecting the

Set frequencyconditional options:-

Once

-

Every Day

-

Days of the Week

-

Days of the Month

-

-

Complete the Time zone, Start time and Start date fields.

-

Apply the scheduling policy that defines how a schedule resumes in case the scheduled time is interrupted.

-

Select Ignore the missed triggers and wait for the next schedule time to run the job at the next scheduled time.

-

Select Run once as soon as possible, then resume normal scheduling to run the job at the soonest time that occurs within an active region. For example, at server startup a scheduled job will not trigger immediately on startup.

-

-

-

Configure the field name or root parameter object criteria to include additional field names or root parameter objects.

-

Select Add criteria.

-

Add the

Field namecriteria by either:-

Enter the name of the field or root parameter.

-

Select the name from the

menu.

menu. -

Select an

Operator typeoption. -

Enter applicable

Valuefield text. -

Modify existing

Field name,Operator type, orValuefield entries.

-

-

Remove criteria objects:

-

Select the

Field namecheckbox. -

Select Delete.

-

-

Via API

You can create and manage data retention rules using the API commands through the ectool command-line interface or through a DSL script. For complete details about the API, refer to CloudBees CD/RO APIs.

Managing archive connectors

The data retention framework provides an extension mechanism to register archive connectors configured for the particular storage system to support different archival systems.

CloudBees CD/RO includes two predefined sample archive connectors located in <installationDir>/src/samples/data_archive/archiveConnectors.dsl, which are disabled by default. Once a connector is enabled, use it as a starting point for developing your custom connector. The two sample connectors are:

-

File Archive Connector: Configures a directory to use as the archive target. -

CloudBees CD/RO DevOps Insight Server Connector: Configures archiving to a report object.

Only one archive connector can be enabled in the system at a time. Any data retention policies within the system that include an archive action will exclusively utilize the currently enabled connector.

Via UI

There is no UI support for managing archive connectors. However, this page explains the command-line instructions and provides examples for using the predefined archive connectors with the API.

Via API

Create and manage archive connectors using the API commands through the ectool command-line interface or a DSL script.

CloudBees CD/RO provides DSL for two archive connectors out of the box. Use one of these as a starting point to customize based on your requirements.

-

When you are ready to implement your connector, save the DSL script to a file (

MyArchiveConnector.dslis used below) and run the following on the command line:ectool evalDSL --dsl MyArchiveConnector.dsl -

To create your own archive connector, and add it to the system:

ectool createArchiveConnector <archiveConnectorName> -

To view your available archive connector:

ectool getArchiveConnector <archiveConnectorName>

File archive connector

This connector writes data to an absolute archive directory in your file system; use the connector out of hte box, or customize its logic, for example, to store data in subdirectories by month or year.

If you customize the logic, update the example DSL and apply it to the CloudBees CD/RO server by using the following command, where fileConnector.dsl is the name of your customized DSL script:

ectool evalDsl --dslFile fileConnector.dsl

File Archive Connector use case example

In this File Archive Connector example, the jobs are archived into the tmp/archive folder in the file system, specified using the archiveDirectory parameter. The following criteria are used for the configuration:

-

Archive completed successful jobs from the 'Test project 1' project.

-

Select jobs older than three days.

-

Archive the jobs into the

/tmp/archivefolder in the file system. -

Format all jobs in XML.

-

Do not use a custom schedule for this data retention policy.

Because the predefined archive connectors are disabled by default, you must first configure the File Archive Connector with basic functionality, then enable it, as follows:

-

Set the job to be in XML data format.

-

Specify

/tmp/archiveas the target folder. -

Enable the archive connector.

Continue with the steps below to configure the File Archive Connector for this example.

-

Run the command to specify the requirements:

ectool modifyArchiveConnector "File Archive Connector" \ --archiveDataFormat XML \ --enabled 1 \ --actualParameter archiveDirectory="/tmp/archive" -

Review the response; it should be similar to:

<response requestId="1" nodeId="192.168.56.4"> <archiveConnector> <archiveConnectorId>098b6165-41d6-11ee-b425-0800275bae6d</archiveConnectorId> <archiveConnectorName>File Archive Connector</archiveConnectorName> <archiveDataFormat>XML</archiveDataFormat> <archiveScript> def dir = new File(args.parameters.archiveDirectory, args.archiveObjectType) dir.mkdirs() File file = new File(dir, "${args.archiveObjectType}-${args.entityUUID}.${args.archiveDataFormat.toLowerCase()}") // Connectors can choose to handle duplicates if they need to. // This connector implementation will not process a record if the // corresponding file already exists. if (file.exists()) { return false } else { file << args.serializedEntity return true }</archiveScript> <createTime>2023-08-23T16:56:43.325Z</createTime> <enabled>1</enabled> <lastModifiedBy>admin</lastModifiedBy> <modifyTime>2023-08-28T08:37:16.973Z</modifyTime> <owner>admin</owner> <propertySheetId>098b8877-41d6-11ee-b425-0800275bae6d</propertySheetId> <parameterDetails> <parameterDetail> <parameterName>archiveDirectory</parameterName> <parameterValue>/tmp/archive</parameterValue> </parameterDetail> </parameterDetails> </archiveConnector> </response> -

Set up the data retention policy. The system may have several different data retention policies with different settings/filters.

Based on the requirements, the data retention policy should specify:

-

Archive action.

-

3 days age.

-

Job object type.

-

Filters such as project name 'Test project 1' and job status 'successful'.

-

Data retention policy enabled.

-

Applicable default settings for the global schedule used by all data retention policies.

-

-

Run the

ectoolcommand to create the data retention policy using the requirements described above:ectool createDataRetentionPolicy "Data retention policy 1" \ --action archiveOnly \ --age 3 \ --ageUnit days \ --description "Archive all successfully completed jobs in the 'Test project 1' project older than 3 days into the /tmp/archive folder in the file system in XML format with help of predefined 'File Archive Connector' archive connector. Use global/general schedule for this data retention policy." \ --enabled 1 \ --objectType job \ --projectNames "Test project 1" \ --statuses success -

Review the response; it should be similar to:

<response requestId="1" nodeId="192.168.56.4"> <dataRetentionPolicy> <dataRetentionPolicyId>7bb8c0bd-4580-11ee-b2fb-0800275bae6d</dataRetentionPolicyId> <dataRetentionPolicyName>Data retention policy 1</dataRetentionPolicyName> <action>archiveOnly</action> <age>3</age> <ageUnit>days</ageUnit> <cleanupDatabase>0</cleanupDatabase> <createTime>2023-08-28T08:54:22.881Z</createTime> <description>Archive all successfully completed jobs in the 'Test project 1' project older than 3 days into the /tmp/archive folder in the file system in XML format with help of predefined 'File Archive Connector' archive connector. Use global/general schedule for this data retention policy.</description> <enabled>1</enabled> <lastModifiedBy>admin</lastModifiedBy> <modifyTime>2023-08-28T08:54:22.881Z</modifyTime> <objectType>job</objectType> <owner>admin</owner> <propertySheetId>7bb8c0bf-4580-11ee-b2fb-0800275bae6d</propertySheetId> <projectNames> <projectName>Test project 1</projectName> </projectNames> <statuses> <status>success</status> </statuses> </dataRetentionPolicy> </response> -

Wait to ensure that all jobs are archived as expected. Your response should be similar to:

tree /tmp/archive/ /tmp/archive/ └── job ├── job-82dea183-4581-11ee-b015-0800275bae6d.xml ├── job-83c9c464-4581-11ee-b2fb-0800275bae6d.xml ├── job-851704f2-4581-11ee-b2fb-0800275bae6d.xml ├── job-85b281f8-4581-11ee-8141-0800275bae6d.xml ├── job-86303d30-4581-11ee-b2fb-0800275bae6d.xml ├── job-88634a26-4581-11ee-8141-0800275bae6d.xml ├── job-89554b54-4581-11ee-8141-0800275bae6d.xml ├── job-8ab1ce11-4581-11ee-b015-0800275bae6d.xml ├── job-9637f082-4581-11ee-8141-0800275bae6d.xml ├── job-973e8b0f-4581-11ee-b015-0800275bae6d.xml ├── job-97e6b18e-4581-11ee-b2fb-0800275bae6d.xml ├── job-98aa9ddc-4581-11ee-b2fb-0800275bae6d.xml ├── job-99550eba-4581-11ee-b2fb-0800275bae6d.xml ├── job-9a21fbfd-4581-11ee-b015-0800275bae6d.xml ├── job-9af99740-4581-11ee-9231-0800275bae6d.xml └── job-9c042930-4581-11ee-8a83-0800275bae6d.xml 1 directory, 16 files

In the /tmp/archive target folder above, a subfolder job is created for the archived object type, containing the archived jobs in the XML format.

The CloudBees CD/RO DevOps Insight Server Connector

This connector configures archiving to the CloudBees CD/RO DevOps Insight Server (DOIS), also called the Analytics server.

If you customize this connector’s logic, you must update the example DSL and apply it to the CloudBees CD/RO server using the following command, where doisConnector.dsl is the name of your customized DSL script:

ectool evalDsl --dslFile doisConnector.dsl

DevOps Insight Server Connector use case example

In this DevOps Insight Server Connector example, the following criteria are used for the configuration:

-

Archive jobs from the 'Test project 2' project.

-

Select jobs older than 15 minutes.

-

Archive the jobs into the Analytics server (DOIS).

-

The jobs must be in JSON format, as shown in the predefined DevOps Insight Server Connector archive connector.

-

Use a custom schedule for this data retention policy.

Configure and enable the DevOps Insight Server Connector.

-

Create the DOIS component.

The connector stores all data in the DOIS component via explicitly-created ElasticSearch indexes.

Run the command to create the special ElasticSearch indexes:

ectool evalDsl """ reportObjectType 'archived-release', displayName: 'Archived Release' reportObjectType 'archived-job', displayName: 'Archived Job' reportObjectType 'archived-deployment', displayName: 'Archived Deployment' reportObjectType 'archived-pipelinerun', displayName: 'Archived Pipeline Run' """ -

Review the response; it should be similar to:

<response requestId="1" nodeId="192.168.56.4"> <reportObjectType> <reportObjectTypeId>5eca97bf-458b-11ee-a626-0800275bae6d</reportObjectTypeId> <reportObjectTypeName>archived-pipelinerun</reportObjectTypeName> <createTime>2023-08-28T10:12:18.064Z</createTime> <defaultUri>ef-archived-pipelinerun-*/_search?pretty</defaultUri> <devOpsInsightDataSourceCount>0</devOpsInsightDataSourceCount> <displayName>Archived Pipeline Run</displayName> <lastModifiedBy>admin</lastModifiedBy> <modifyTime>2023-08-28T10:12:18.064Z</modifyTime> <owner>admin</owner> <propertySheetId>5ecabed1-458b-11ee-a626-0800275bae6d</propertySheetId> <reportObjectAssociationCount>0</reportObjectAssociationCount> <reportObjectAttributeCount>0</reportObjectAttributeCount> </reportObjectType> </response> -

Configure and enable the DevOps Insight Server Connector.

Because the predefined archive connectors are disabled by default, you must first configure the

DevOps Insight Server Connectorwith basic functionality, then enable it, as follows:Only one archive connector may be enabled in the system at a given time; all data retention policies with archive action in the system will use it. -

Run the

ectoolcommand to specify JSON data format and enable the archive connector:ectool modifyArchiveConnector "DevOps Insight Server Connector" \ --archiveDataFormat JSON \ --enabled 1 -

Check the response; it should be similar to:

<response requestId="1" nodeId="192.168.56.4"> <archiveConnector> <archiveConnectorId>099832ae-41d6-11ee-b425-0800275bae6d</archiveConnectorId> <archiveConnectorName>DevOps Insight Server Connector</archiveConnectorName> <archiveDataFormat>JSON</archiveDataFormat> <archiveScript> def reportObjectName = "archived-${args.archiveObjectType.toLowerCase()}" def payload = args.serializedEntity // If de-duplication should be done, then add documentId to the payload // args.entityUUID -> documentId. This connector implementation does not // do de-duplication. Documents in DOIS may be resolved upon retrieval // based on archival date or other custom logic. sendReportingData reportObjectTypeName: reportObjectName, payload: payload return true </archiveScript> <createTime>2023-08-23T16:56:43.325Z</createTime> <enabled>1</enabled> <lastModifiedBy>admin</lastModifiedBy> <modifyTime>2023-08-28T10:15:43.172Z</modifyTime> <owner>admin</owner> <propertySheetId>099832b0-41d6-11ee-b425-0800275bae6d</propertySheetId> </archiveConnector> </response> -

Set up the data retention policy.

Be aware that the system may have a number of different data retention policies with different configurations present.

Based on the requirements in the data retention policy, specify the following:

-

Archive action

-

15 minute age options

-

Job object type

-

Filters such as project name 'Test project 1' and job status 'successful'

-

Enable data retention policy

-

-

Run the

ectoolcommand to specify the requirements:ectool createDataRetentionPolicy "Data retention policy 2" \ --action archiveOnly \ --age 15 \ --ageUnit minutes \ --description "Archive all jobs in the 'Test project 2' project older than 15 minutes into the Analytics server (DOIS) in JSON format with help of predefined 'DevOps Insight Server Connector' archive connector. Use a custom schedule for this data retention policy." \ --enabled 1 \ --objectType job \ --projectNames "Test project 2" -

Review the response, it should be similar to:

<response requestId="1" nodeId="192.168.56.4"> <dataRetentionPolicy> <dataRetentionPolicyId>de33f934-458c-11ee-b1f0-0800275bae6d</dataRetentionPolicyId> <dataRetentionPolicyName>Data retention policy 2</dataRetentionPolicyName> <action>archiveOnly</action> <age>15</age> <ageUnit>minutes</ageUnit> <cleanupDatabase>0</cleanupDatabase> <createTime>2023-08-28T10:23:02.012Z</createTime> <description>Archive all jobs in the 'Test project 2' project older than 15 minutes into the Analytics server (DOIS) in JSON format with help of predefined 'DevOps Insight Server Connector' archive connector. Use a custom schedule for this data retention policy.</description> <enabled>1</enabled> <lastModifiedBy>admin</lastModifiedBy> <modifyTime>2023-08-28T10:23:02.012Z</modifyTime> <objectType>job</objectType> <owner>admin</owner> <propertySheetId>de33f936-458c-11ee-b1f0-0800275bae6d</propertySheetId> <projectNames> <projectName>Test project 2</projectName> </projectNames> <statuses> <status>success</status> <status>aborted</status> <status>warning</status> <status>error</status> </statuses> </dataRetentionPolicy> </response> -

Set a custom schedule; this is necessary because of the expected high frequency (try to archive existing jobs at least once per 15 minutes).

The

ectoolcommand to create a custom schedule for this particular data retention policy in the Default project:ectool createSchedule Default 'Data retention policy 2 schedule' \ --dataRetentionPolicyName 'Data retention policy 2' \ --interval 15 \ --intervalUnits minutes -

Review the response, it should be similar to:

<response requestId="1" nodeId="192.168.56.4"> <schedule> <scheduleId>3f527d62-458d-11ee-8c8f-0800275bae6d</scheduleId> <scheduleName>Data retention policy 2 schedule</scheduleName> <createTime>2023-08-28T10:25:44.947Z</createTime> <dataRetentionPolicyName>Data retention policy 2</dataRetentionPolicyName> <interval>15</interval> <intervalUnits>minutes</intervalUnits> <lastModifiedBy>admin</lastModifiedBy> <misfirePolicy>ignore</misfirePolicy> <modifyTime>2023-08-28T10:25:44.947Z</modifyTime> <owner>admin</owner> <priority>normal</priority> <projectName>Default</projectName> <propertySheetId>3f52a474-458d-11ee-8c8f-0800275bae6d</propertySheetId> <scheduleDisabled>0</scheduleDisabled> <subproject>Default</subproject> <timeZone>Europe/Berlin</timeZone> <tracked>1</tracked> <triggerEnabled>0</triggerEnabled> </schedule> </response> -

Check to ensure that jobs are archived as expected.

The command below checks to see if jobs are being archived as expected. It only works if you have installed CloudBees Analytics without security. If CloudBees Analytics is secure on your system, check the server logs, or log into the CloudBees Analytics user interface as reportuser, and go to the following URL to view the data:https://localhost:9201/ef-archived-job*/_search.

Also, the curl command below specifies the server host’s name (ubuntu1); replace this value in the command with the name of your server host. -

Run the command to check that the jobs are being archived:

IPv6 addresses are only supported for Kubernetes platforms. If using an IPv6 address, enclose the address in square brackets. Example: [<IPv6-ADDRESS>].curl http://ubuntu1:9201/_cat/indices?v -

Review the response, it should be similar to:

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size yellow open ef-archived-job-2023 ilgvht5NR1-WTivVNhChUA 2 1 5 0 42.2kb 42.2kb yellow open ef-job-2023 0bIvsUeqSMWkR04pC2Zqdg 2 1 5 0 50.9kb 50.9kbAs you can see in the OpenSearch, at least two indexes are created:

ef-job-2023for regular jobs andef-archived-job-2023for archived jobs.

If no indexes are displayed, there is a general communication issue between CloudBees CD/RO and CloudBees Analytics.

To troubleshoot:

-

Check your CloudBees CD/RO and CloudBees Analytics logs for any warnings or errors.

-

Check your CloudBees CD/RO license.

-

Try sending report data manually with the help of the API:

ectool sendReportingData --help Usage: sendReportingData <reportObjectTypeName> <payload> [--validate <0|1|true|false>]For complete details about the API, refer to CloudBees CD/RO APIs.