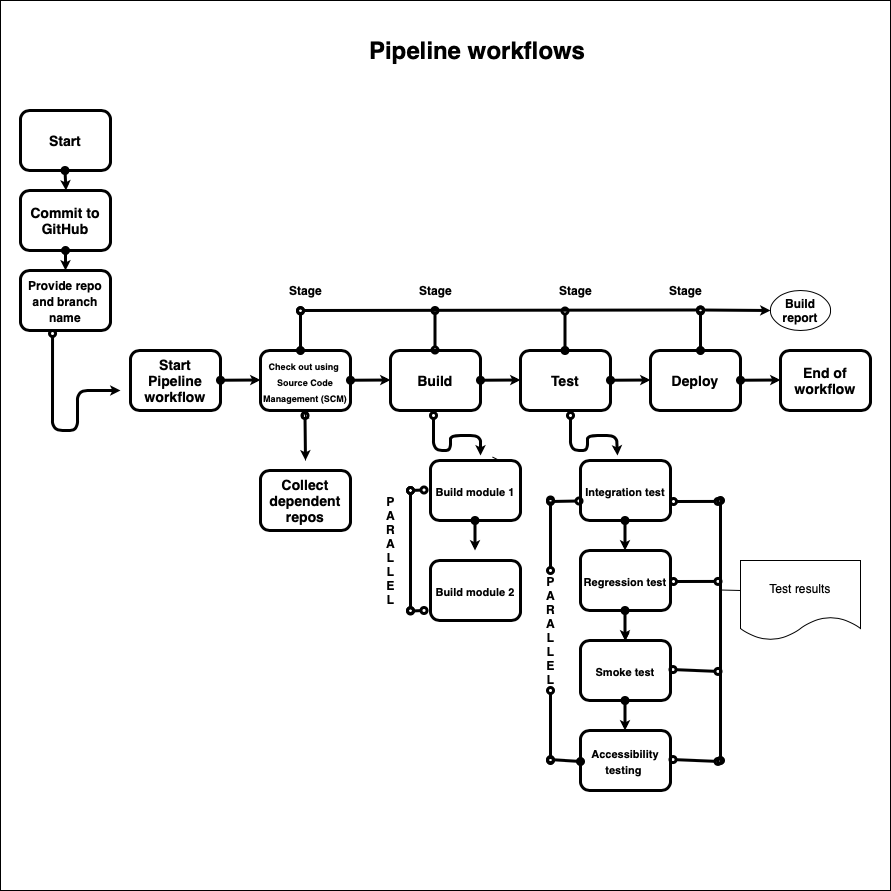

Pipelines are sets of processes that are used to compile, build, and deploy code into a production environment. With Pipelines, you can:

-

Manage automated builds, tests, and deployments as one workflow.

-

Deliver quality products frequently and consistently from testing through staging to production automatically.

-

Automatically promote or prevent build artifacts from being deployed. If errors are discovered anytime during the process the Pipeline stops and alerts are sent to the appropriate team for their attention.

To ensure that the quality of the software being developed is not compromised for speed, appropriate checks and balances are built into Pipeline processes. They can be programmed to trigger with commits to the code thereby enabling frequent releases and consistent behavior.