CloudBees Cache Step Plugin provides writeCache and readCache Pipeline steps to use separate storage as caches for workspaces. This is useful for builds running on cloud agents that start with empty caches of build tools, or when builds involve temporary files that take much longer to generate than they take to download.

CloudBees workspace caching has two implementations:

-

CloudBees S3 Cache Plugin: This plugin provides a cache implementation for these steps based on AWS S3 (S3).

-

CloudBees Google Cloud Storage Cache Plugin: This plugin provides a caching implementation based on Google Cloud Storage.

Enable workspace caching

Workspace caching is disabled by default. As an administrator, you can enable this setting on the System configuration page.

To enable workspace caching:

-

Select in the upper-right corner to navigate to the Manage Jenkins page.

-

Select System, navigate to Workspace Caching, and then select either S3 Cache or GCS Cache from the Cache Manager list.

S3 configuration

The S3 cache uses the configuration of Artifact Manager on S3 plugin. Refer to Cloud-ready Artifact Manager for AWS for more information.

S3 configuration permissions

For the CloudBees S3 Cache plugin specifically, the minimum required permissions are s3:PutObject, s3:GetObject, s3:DeleteObject, and s3:ListBucket. For more information on configuring S3, refer to Artifact Manager on S3 plugin.

The following examples are minimal IAM policies providing the required permissions, with and without a Base Prefix configured for the S3 bucket:

Without base prefix

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": "s3:ListBucket", "Resource": "arn:aws:s3:::BUCKET_NAME_HERE" }, { "Effect": "Allow", "Action": "s3:GetBucketLocation", "Resource": "arn:aws:s3:::BUCKET_NAME_HERE" }, { "Effect": "Allow", "Action": [ "s3:PutObject", "s3:GetObject", "s3:DeleteObject" ], "Resource": "arn:aws:s3:::BUCKET_NAME_HERE/*" } ] }

With base prefix

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": "s3:ListBucket", "Resource": "arn:aws:s3:::BUCKET_NAME_HERE", "Condition": { "StringLike": { "s3:prefix": "BASE_PREFIX_HERE/*" } } }, { "Effect": "Allow", "Action": "s3:GetBucketLocation", "Resource": "arn:aws:s3:::BUCKET_NAME_HERE" }, { "Effect": "Allow", "Action": [ "s3:PutObject", "s3:GetObject", "s3:DeleteObject" ], "Resource": "arn:aws:s3:::BUCKET_NAME_HERE/BASE_PREFIX_HERE/*" } ] }

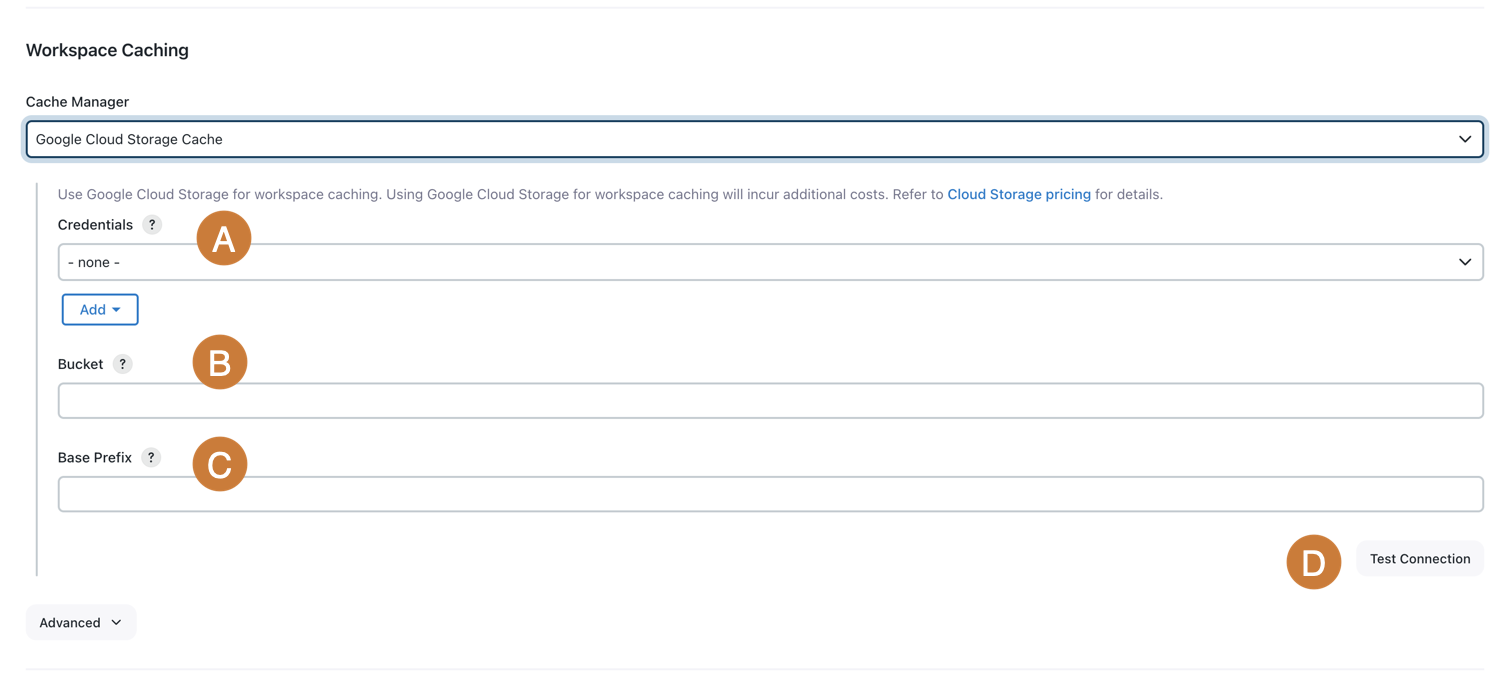

GCS configuration

CloudBees Workspace Caching supports Google Cloud Storage. This implementation allows the cache storage and the CloudBees CI agents to run in the same cloud provider environment and to benefit from good network speed.

Configure the following settings for the Google Cloud Storage Cache:

-

Credentials: Select a valid Google Service Account from private key or Google Service Account from metadata to access to the Google Cloud Storage bucket. Google credentials can be configured using Google OAuth Credentials plugin.

-

Bucket: Enter the name of the Storage Bucket. The Bucket should exist and the specified credentials should have access to it.

-

Base Prefix: Enter the string to be used as prefix for every file path. It must end with an

/if it is a folder. -

Select Test Connection to validate your connection.

GCS configuration permissions

The minimum required permissions assigned to a service account specified as credentials in Google Cloud Storage configuration are:

-

storage.buckets.get -

storage.objects.create -

storage.objects.get -

storage.multipartUploads.create -

storage.objects.delete -

storage.objects.list -

iam.serviceAccounts.signBlobThe iam.serviceAccounts.signBlobpermission is required for the Google Service Account from metadata setting to sign URLs. This permission is assigned to the VM’s service account at the IAM level and Cloud API scopes should have theCloud Platformscope enabled.

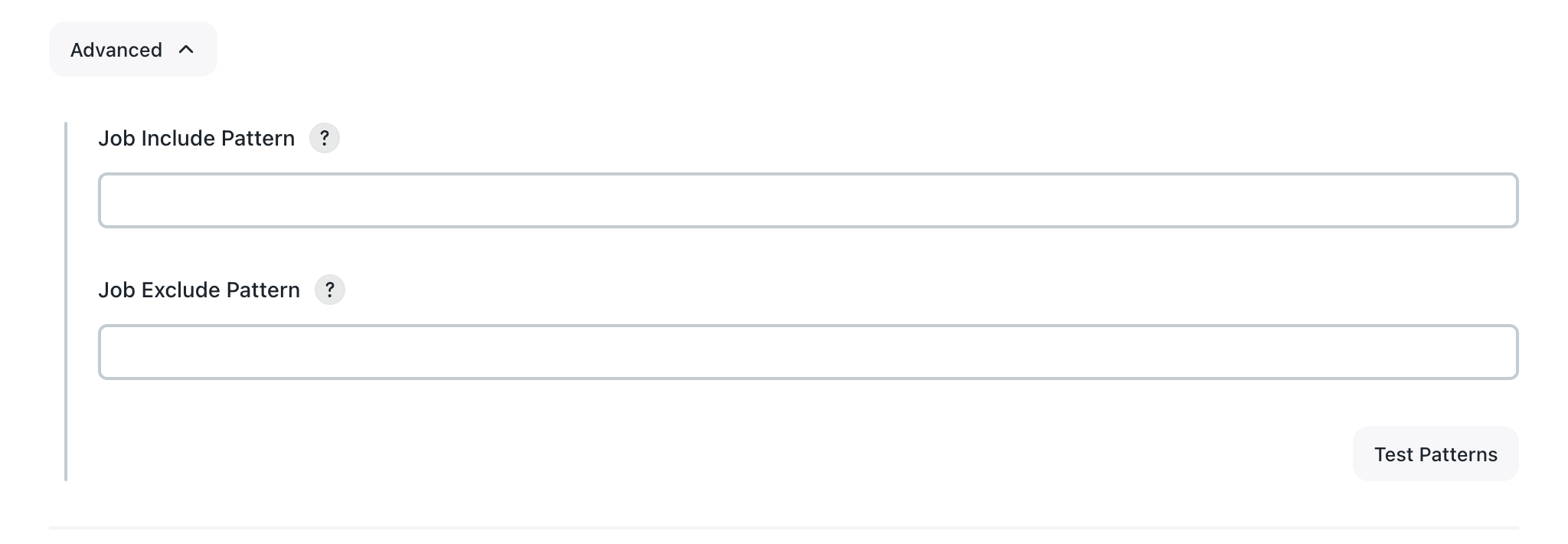

Advanced options configuration

Under Advanced Options you can also configure which jobs are able to use the cache with the Job Include Pattern and Job Exclude Pattern fields.

-

The Job Include Pattern allows you to configure specific jobs that are able to use the configured cache using a comma-separated Ant-style pattern. For example:

-

**matches all jobs. -

my-project/**matches all jobs in the foldermy-project. -

*e*matches all jobs whose name contains the letter e that are not in another folder.The semantics differ slightly from those used for Ant file paths (for example, the patterns used when selecting which files to archive using the artifactArtifactspipeline step). For job selection,*/**does not include jobs that are not inside a folder. However,*/**does select files not inside a subdirectory.This field is empty by default, which means that all jobs are included.

-

-

The Job Exclude Pattern allows you to exclude an included job. The syntax is the same as the Job Include Pattern. However, an empty value means that nothing is excluded that would otherwise be included (for example, if both fields are empty, the workspace caching feature is enabled for all jobs in the CloudBees CI instance).

Use pipeline steps

Pipelines generally include matching pairs of readCache (restoring a cache created or updated in a previous build, if it exists) and writeCache (creating or updating a cache) steps, but the same cache can be restored multiple times (for example, on different agents).

While readCache typically appears before writeCache in Pipeline definitions, conceptually, writeCache is the first step to actually execute an action (usually in an earlier build).

|

writeCache step

The writeCache step takes the following parameters:

-

name: A mandatory parameter describing the cache, for examplemaven-repo. The name is used to identify the cache in builds of the same job. -

includes: A mandatory, comma-separated list of Ant-style expressions that defines the set of files to include in the cache. This works like the artifacts parameter to thearchiveArtifactsPipeline step. -

excludes: An optional, comma-separated list of Ant-style expressions that defines the set of files to exclude from the cache, if they would otherwise be included.

For example:

writeCache name: 'maven-repo', includes: '.m2/repository/**', excludes: '**SNAPSHOT**'(1)

| 1 | This traverses .m2/repository directory (relative to the current working directory), including all files except those whose relative paths contain SNAPSHOT. Symbolic links are stored as-is. |

The Job Includes Pattern does not support absolute path expressions. To include files outside the current working directory in the cache, use the dir { } step. For example:

// wrong: writeCache name: 'cache', includes: '/tmp/jenkins/**' // correct: dir ('/tmp/jenkins') { writeCache name: 'cache', includes: '**' }

If you need to include temporary (TMP) directories in the writeCache step, set the com.cloudbees.cache.compatibility.S3CompatibleCacheWriter.INCLUDE_TMP_DIRS system property on the controller if you are using a built-in agent, or set the system property on the agent itself. For more information, refer to Managing agents.

readCache step

The readCache step takes a single parameter:

-

name: A mandatory parameter describing the cache, for examplemaven-repo. The name is used to identify the cache in builds of the same job.

Example:

readCache name: 'maven-repo'

This step retrieves the specified cache from storage and restores all files it contains into the current working directory. Files that exist are overwritten. Files that exist but are not part of the cache are left unmodified.

If you use writeCache with a different working directory, run readCache from the same (or an equivalent) working directory. Otherwise, the files it creates will not be restored to the correct directory.

|

Cache contexts

Caches are scoped to the Pipeline that created them. If multiple Pipelines write to the cache with the same name, they are stored in different locations and do not interfere with each other. There is one exception to this rule: builds for pull requests in a multibranch Pipeline will be able to read (but not write) the cache of the job that built the target branch.

Multibranch Pipelines

When multibranch Pipelines build pull requests, if there is no cache for the current job, readCache can use caches created by the branch job that corresponds to the pull request’s target branch (often a branch named main or develop). In this case, the build logs of pull requests will look like the following:

[Pipeline] readCache Checking cache context for My Demo Multibranch Pipeline » PR-1 for cache 'mvn-cache' Cache 'mvn-cache' does not exist in cache context for My Demo Multibranch Pipeline » PR-1 Checking cache context for My Demo Multibranch Pipeline » main for cache 'mvn-cache' Found cache, downloading 277,23 MB ... Downloaded cache 'mvn-cache' from cache context for My Demo Multibranch Pipeline » main with size 277,23 MB in 11 seconds (25,20 MB/s) Extracting cache 'mvn-cache' ... Finished extracting cache 'mvn-cache'

Basic Pipeline Examples

Java project with basic cache usage

This Pipeline is used to build a Jenkins plugin with Maven. It requires an appropriate JDK to be installed and on the PATH, and for the tool maven-3 to be defined in the global tool configuration. To make the build time reduction more easily visible, this Pipeline uses the Timestamper plugin to annotate the build log with timestamps.

timestamps { node('agent') { checkout scmGit(branches: [[name: '*/main']], userRemoteConfigs: [[url: 'https://github.com/jenkinsci/matrix-auth-plugin/']]) readCache name: 'mvn-cache' def mvn = tool name: 'maven-3', type: 'maven' sh "${mvn}/bin/mvn clean verify -Dmaven.repo.local=./maven-repo -DskipTests" writeCache name: 'mvn-cache', includes: 'maven-repo/**' } }

JavaScript project with more complex includes pattern

This Pipeline is used to build the Jenkins plugins site plugins.jenkins.io. It requires yarn to be installed and on the PATH.

timestamps { node('agent') { git 'https://github.com/jenkins-infra/plugin-site' readCache name: 'yarn' sh 'yarn config set globalFolder $PWD/yarn-global' sh 'yarn config set cacheFolder $PWD/yarn-cache' sh 'yarn install' sh 'yarn build' writeCache name: 'yarn', includes: 'yarn-cache/**,yarn-global/**,**/node_modules/**' } }

Multiple caches

This is a similar Pipeline building a Maven project, but it also caches JavaScript dependencies. The JavaScript-related directories being cached are inserted with the source code and also include symbolic links.

timestamps { node { checkout scmGit(branches: [[name: '*/master']], userRemoteConfigs: [[url: 'https://github.com/jenkinsci/blueocean-plugin/']]) readCache name: 'node' readCache name: 'maven' def mvn = tool name: 'maven-3', type: 'maven' sh "${mvn}/bin/mvn clean install -Dmaven.repo.local=./maven-repo" writeCache name: 'node', includes: '**/node_modules/**,**/node/**' writeCache name: 'maven', includes: 'maven-repo/**' } }

Multibranch Pipeline

Multibranch Pipelines can be set up the same way as Pipelines building only a single branch. Each branch and pull request job writes to its own caches and does not interfere with the caches of other jobs. Additionally, pull request jobs can read caches that were created by the job corresponding to their target branch.

In addition to speeding up the first build for any given pull request, the Pipeline can be configured to have quicker pull request builds in general by not writing caches at the end of their build. As shown in the sample Pipeline below, builds of pull requests can use the cache of the job corresponding to their target branch, skipping the creation of their own cache.

timestamps { node { checkout scm readCache name: 'mvn-cache' def mvn = tool name: 'maven-3', type: 'maven' sh "${mvn}/bin/mvn clean verify -Dmaven.repo.local=./maven-repo -DskipTests" if (!env.CHANGE_ID) { writeCache name: 'mvn-cache', includes: 'maven-repo/**' } } }

|

Be sure to adapt the condition for when to invoke

|

Cache integrity

To ensure the integrity of downloaded cache contents, the CloudBees S3 Cache plugin ensures that the readCache downloads are exactly what was previously uploaded before extracting the cache. This protects both from problems during upload and download as well as from changes to S3 bucket contents.

CloudBees Cache Step plugin maintains a set of known cache content (SHA-256 checksums) for each cache. While the writeCache step uploads a cache, the SHA-256 checksums are computed and associated with a randomly generated UUID identifying a specific version of the cache. When downloading the cached content with readCache, the UUID is retrieved from S3, and the actual checksum of the cached content is computed. This checksum is compared to the previously stored checksum for the same content version. If a mismatch occurs, the cache is not extracted, and the build proceeds as if no cached content had been found.

Manage S3 buckets

Cache storage

CloudBees S3 Cache plugin creates a directory-like hierarchical structure in S3 to store workspace caches.

A key (that is, the full path to a file) consists of the following components (in order):

-

The globally configured, optional prefix for files stored in S3. It can be something like

cloudbees/ci-data/and always ends with a trailing/(slash) character. -

The string

cache-followed by the instance ID and a trailing/(slash) character. This ensures that no two CloudBees CI controllers write to the same location in S3, even if they’re configured to use the same bucket. For example:cache-182197b2ef7ea861cc77c5d3eb28cdab/. -

The URL-safe base64-encoded unique ID of the job that created the cache, followed by a trailing

/(slash) character. CloudBees Workspace Cache plugin uses the Unique ID plugin to assign and store unique IDs. For example:T0RBMU1EWmtPV0V0WkRjMllpMDBZalV4TFdGak9U/ -

The URL-safe base64-encoded name of the cache. If the cache name is

maven-repo, then the base64-encoded cache name is:bWF2ZW4tY2FjaGU.In this example, the full object key for the cache would be:

cloudbees/ci-data/cache-182197b2ef7ea861cc77c5d3eb28cdab/T0RBMU1EWmtPV0V0WkRjMllpMDBZalV4TFdGak9U/bWF2ZW4tY2FjaGU.The base64 encoding used here is a URL-safe variant that uses the characters -and_instead of the more common+and/characters. Additionally, there is no padding (=characters) at the end of the string.

Periodic cache cleanup

Periodically, a task runs in the background and cleans up unused caches, and obsolete cache version checksums stored on the CloudBees CI controller file system.

Unused caches (S3 objects)

Unused caches are those for which any of the following apply:

-

The S3 object key cannot be decoded to a job ID and cache name. Refer to Cache storage for more information on the S3 object key structure.

-

The job ID does not match any job that exists on the controller (typically because it has been deleted).

-

The job ID matches a job that is not currently allowed to use workspace cache steps through the global configuration.

-

The S3 object has no attached metadata for the cache version UUID, so the cache would always be rejected. Refer to Cache integrity for more information.

-

The S3 object has attached metadata for the cache version UUID, but no corresponding checksum is recorded on the controller, so the cache would always be rejected. Refer to Cache integrity for more information.

These S3 objects are periodically deleted by the CloudBees CI controller.

| Never store your own data in the same S3 bucket with the same prefix that CloudBees CI uses for workspace caches. Any objects stored in S3 with the same prefix and are not recognized as a valid workspace cache are deleted. |

This task only deletes objects from S3 if S3 Cache is the currently configured Cache Implementation on the System configuration page.

|

Obsolete cache integrity checksums

Once the set of valid caches has been identified (refer to Unused caches (S3 objects)) and their UUIDs recorded, cache checksums for all other UUIDs are deleted from the CloudBees CI controller file system if they pre-date the start of the current periodic cache cleanup operation.

Cache metadata

To make management of the bucket easier, caches include additional user-defined metadata that can be accessed by looking at the object properties in the AWS console.

The following user-defined metadata is stored with each cache to make it easier to trace the source of S3 objects:

-

x-amz-meta-job-nameis the full name of the job that created the cache. -

x-amz-meta-updated-by-buildis the build number of the build that last updated the cache. -

x-amz-meta-jenkins-urlis the URL to the CloudBees CI controller that created this cache.

| There is no corresponding metadata for the cache name, as the S3 object key contains the base64-encoded cache key already. |

Additionally, x-amz-meta-cache-version-uuid is the UUID of the current version of the cache. This is used to associate checksums stored on the controller with the content of the cache downloaded from S3. Refer to Cache integrity for more information.

All of these values use the MIME encoded-word format as required by S3. Values only containing US-ASCII characters will be stored and displayed as is, while values containing non-US-ASCII characters will be encoded using the Q encoding described in RFC 2047. For example, the string dépendances-maven will be shown as =?UTF-8?Q?d=C3=A9pendances-maven?= in the AWS console.

|

To decode MIME encoded-words:

|

Cost considerations

Use of Amazon S3 and GCS incurs additional costs. Refer to the following for more information:

Access patterns

-

writeCachecreates a multipart upload on the controller. The agent uploads individual parts in parallel to pre-signed URLs it obtains from the controller using as manyPUTrequests as there are chunks of up to 20 MB in size each to upload. The controller then completes the upload, or aborts it if an error occurred. For example, uploading a 90 MB cache requires twoPOSTrequests and fivePUTrequests. -

readCachecreates a pre-signed URL and the agent downloads it using a singleGETrequest. -

A periodic background task cleans up obsolete caches in S3, as well as cache version checksums stored locally on the CloudBees CI controller file system. This task runs every 24 hours and lists all caches/objects in S3 (one

GETrequest per 1000 caches/S3 objects) and then requests the metadata of those that pass an initial validity check (oneHEADrequest each). For example, if there are 800 jobs with two caches each on the CloudBees CI controller, this task will send 1602 requests to S3 once per day to obtain the metadata for all 1600 caches. Any caches that are identified as no longer be needed are individually deleted. For more information, refer to Periodic cache cleanup.

Unfinished multipart uploads

Some multipart uploads might not be properly completed or aborted (for example, a crash of the CloudBees CI controller process). The multipart uploads that are not completed or aborted continue to incur storage costs. Discovering and Deleting Incomplete Multipart Uploads to Lower Amazon S3 Costs in the AWS blog explains how to prevent this.