Setup wizard

When a controller running in High Availability (HA) mode starts for the first time, one of the controller replicas acquires a

lock in the shared JENKINS_HOME. This replica is the only one available, and

the lock remains until the Setup wizard is ended by a user.

When the Setup wizard ends, the remaining replicas continue the startup process. During this process the remaining replicas, one by one and automatically, acquire the lock, start, and release the lock until all of them are available.

However, if the controller is created using a CasC bundle, the Setup wizard is not displayed and all the replicas automatically follow the same process described above without any human confirmation. One by one, they acquire the lock, start, and release the lock until all of them are up and running.

Workload distribution in HA

HA distributes the different pipeline builds among the replicas, and if a replica fails, running builds continue and are adopted by another replica.

Starting with version 2.426.1.2, CloudBees CI provides explicit load balancing for controllers running in HA mode.

Explicit load balancing redirects new builds to the controller replica with the least load.

|

CloudBees CI calculates the load using a simple metric that considers the following factors:

|

CloudBees CI provides explicit load balancing in most cases. The table below summarizes supported and unsupported cases:

| Job type | Scheduling strategy | ||

|---|---|---|---|

Interactive trigger (Build Now) |

Replica with the least work load |

||

Scheduled build (Cron job) |

Replica with the least work load |

||

Branch indexing (Multibranch and Organization folder jobs) |

Replica with the least work load |

||

Webhooks (including multibranch events) |

Replica with the least work load |

||

REST API triggers |

Replica with the least work load |

||

Replica with the least work load |

|||

Always the same replica as the upstream build.

To get an HA-compatible |

|||

Replica with the least work load |

|||

Any other trigger type |

Same replica that processed the trigger |

Plugin installation and HA

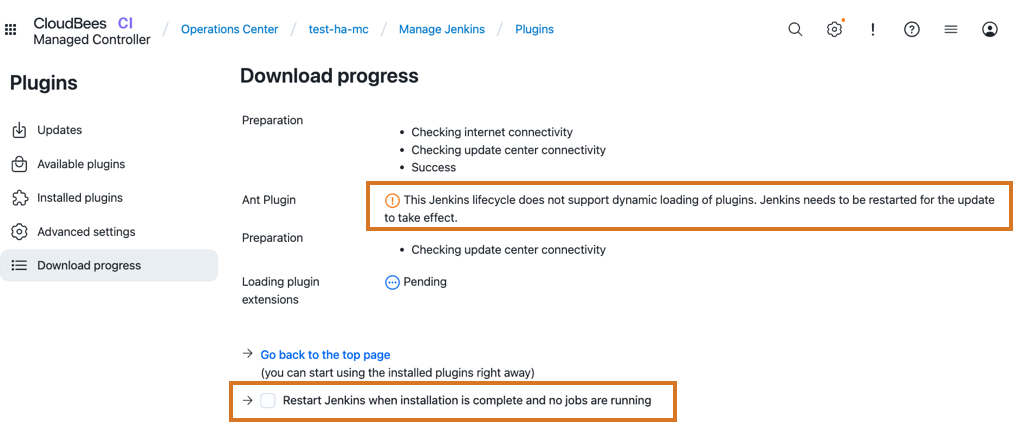

Plugins can be managed and installed from the screen. When using HA with multiple replicas, dynamic loading of plugins (plugin installation without restarting CloudBees CI) is not supported. Therefore, you must restart each replica of the controller to install or upgrade plugins.

In a CloudBees CI on modern cloud platforms with a managed controller running in HA mode, when selecting Restart Jenkins when installation is complete and no jobs are running, a rolling restart is performed, and when completed, new plugin versions are available in all replicas.

In a CloudBees CI on traditional platforms running in HA mode with multiple replicas, you must restart all controller replicas either manually or using your own automation.

| When the controller is running in HA mode with only one replica, the behaviour is the same as a non-HA controller. |

HA and REST-API endpoints

When running a controller in HA mode, requests to API pull-based endpoints may return information about the controller replica that responds to the API request instead of aggregated information about all the controller replicas part of the HA cluster.

Examples of these endpoints are:

-

The

/metricsendpoint provided by the Metrics plugin. -

The

/monitoringendpoint provided by the Monitoring plugin.

For example, when using those plugins, if you make an HTTP API query for JVM heap usage, the returned value would only correspond to the replica that processed the request and not provide insight into other replicas. However, other information, like the number of projects, is accurate because it is automatically synchronized among all the controller replicas.

In general, responses are accurate and display aggregated replica information for:

-

Global settings.

-

List of jobs, folders, etc., and their configuration.

-

List of permanent or static agents and their configuration.

-

Set of completed builds for a given job.

However, with limited exceptions, endpoints display information only about the replica responding to the requests for:

-

JVM information (current heap usage, CPU, etc.)

-

Queue items.

-

List of running builds.

-

List of ephemeral agents connected to the replica.

-

Status of static agents connected to the replica.

CloudBees CI overrides the following Jenkins core endpoints to provide aggregated information about running builds, agents, and queued items.

-

The endpoint

/job/xxx/api/json?tree=builds[number,building,result]returns aggregated information about running builds in all the controller replicas.The

numberfield is mandatory when usingbuildsin thetreeparameter. This field is used to sort the builds after aggregating them from all the replicas.CloudBees CI returns a

400 Bad requesterror if thenumberfield is omitted. -

The endpoint

/computer/api/json?tree=computer[displayName,offline]returns aggregated information about agents connected to all the controller replicas.The

displayNamefield is mandatory when usingcomputerin thetreeparameter. This field uniquely identifies an agent in a Jenkins controller (the same applies to a HA cluster).CloudBees CI returns a

400 Bad requesterror if thedisplayNamefield is omitted. -

The endpoint

/queue/api/json?tree=items[id,task,inQueueSince,params,stuck,url,why,buildableStartMilliseconds,pending,blocked,buildable,actions]returns aggregated information about the queued items in all the controller replicas.

|

|

API endpoints to retrieve information about a single object (build, agent, or queue item) remain valid. HA controllers route such requests to the replica running the build, connected to the agent, or holding the queue item. These requests are handled by the replica as if it were a non-HA controller. The following are examples of such endpoints:

Using the aggregated endpoint results in the following:

CloudBees recommends using specific endpoints to retrieve information about a single object (build, agent, or queue item). Avoid using the aggregated endpoints unless you need aggregated information about all the objects. |

You can also use and configure third-party monitoring solutions like Prometheus using the CloudBees Prometheus Metrics plugin, to provide aggregated information from all the controller replicas.

|

When using pull-based endpoints, whether responses provide aggregated or single-replica information depends on the implementation of the plugins and the entry points that provide the information. CloudBees recommends testing those pull-based entry points beforehand to verify which specific data is returned. The scenario is different for push-based monitoring plugins, where data is directly sent from your CloudBees CI instance to the monitoring application. Under those circumstances, and depending on your specific requirements, the data from the various replicas can be consolidated by sending it to the same container, or not. |

Non-aggregated information in HA controllers, build navigation gestures, and builds in progress

HA controllers provide information about the HA cluster in places like the screen. They also provide aggregated information from all the controller replicas through the API aggregated endpoints, and different locations, such as the Build Queue, the Build Executor Status or the Builds widgets.

However, some elements in the CloudBees CI UI might not provide aggregated information about the HA cluster, such as elements in the Jenkins dashboard that provide information about running builds. For example, the Status of the last build or the Weather report showing aggregated status of recent builds list view columns might not be accurate.

If an element in the UI does not provide accurate information in an HA controller, it is likely because the information reflects only the status of the replica currently serving the request and not the aggregated information of the entire cluster.

CloudBees Pipeline Explorer ensures that navigation gestures like Next Build and Previous Build work seamlessly through builds running in replicas different from the replica holding the session for the user.

Without CloudBees Pipeline Explorer, those gestures skip builds running in other replicas. When the builds end, you can navigate through them as usual regardless of whether you use CloudBees Pipeline Explorer or not.

HA-related known plugin issues

HA and the Jenkins Docker plugin

When the Docker plugin is installed, it defaults to deleting containers that were provisioned on demand using a label reference or the dockerNode step, and are not connected to the current instance.

These containers are considered orphans.

In an HA controller with multiple replicas, replicas other than the one that launched the container might consider the container orphaned and remove it. Orphan container checks occur every 5 minutes. Therefore, builds that take longer than 5 minutes and use agents (containers) provided by the Docker plugin always fail.

To prevent this issue, you can disable this behavior by using the following system property:

-Dcom.nirima.jenkins.plugins.docker.DockerContainerWatchdog.enabled=false

HA and the Jenkins Amazon EC2 plugin

The Amazon EC2 plugin allows Jenkins to provision EC2 agents on demand.

When using this plugin in a controller running in HA mode, the following options must be selected in the Cloud configuration:

-

No delay provisioning

-

Clean up Orphan Nodes

-

Terminate idle agents during shutdown

-

Avoid Using Orphaned Nodes

Additionally, the Idle termination time setting must be configured according to the following guidelines:

-

If Maximum Total Uses is set to a value greater than

1, specify a shorter Idle termination time (for example, ~10 minutes). -

If using Minimum number of spare instances, specify a longer Idle termination time (for example, ≥ 60 minutes).

-

If Maximum Total Uses and Minimum number of spare instances are both set to

0, set the Idle termination time to 1 minute (the minimum supported value).

Using different settings for these options is not HA-compatible and may cause unexpected behaviors in HA controllers.

HA and the Jenkins Swarm plugin

The Swarm plugin allows Jenkins nodes to join a controller, forming an ad-hoc cluster.

This plugin is not compatible with High Availability (HA) and should not be used in controllers running in HA mode.

HA and the Kubernetes plugin

The Kubernetes plugin allows Jenkins to provision agents on demand in a Kubernetes cluster.

The following Pod template settings are not HA-compatible and should not be used in controllers running in HA mode:

-

Time in minutes to retain agent when idle: In an HA controller, Kubernetes agents should be deleted immediately after completing their assigned task. Retaining agents after they become idle may result in orphaned agents if a controller replica exits for any reason.

-

Concurrency Limit: Limits the maximum number of agents the Kubernetes cloud can provision at once. In HA mode, this setting is not synchronized across controller replicas, which may allow agent provisioning to exceed the specified limit.

You can also limit the number of agents that can be provisioned leveraging the Kubernetes cloud capabilities. Refer to the official Kubernetes documentation for more information.