|

CloudBees recommends switching your controllers to High Availability (active/active). HA (active/active) offers better performance, as workloads are shared among the different replicas in a controller, and replicas can fail over with zero downtime. |

|

High Availability (active/passive) on a Windows environment is not supported. |

| To troubleshoot your HA (active/passive) installation, refer to High Availability installation troubleshooting. |

CloudBees CI on traditional platforms comes with the capability to run in a high availability (active/passive) setup, where two or more JVMs form a so-called "HA singleton" cluster, to ensure business continuation for Jenkins. This improves the availability of the service against unexpected problems in the JVM, the hardware that it runs on, etc. When a Jenkins JVM becomes unavailable (for example, when it stops responding, or when it dies), other nodes in the cluster automatically take over the role of the controller, thereby restoring service with minimum interruption. It is also important for users to understand what this feature does not do in its current form. Namely, it is not a symmetric cluster, where participating nodes will share workloads together. At any given point only one of the nodes is performing the controller role (hence "HA singleton"). Because of this, when a failover takes place, users will experience a brief downtime, comparable to someone rebooting a Jenkins controller in a non-HA setup. Builds that were in progress would be lost, too. This guide will walk through the High Level Overview, detail implementation and configuration steps, and finish with troubleshooting tips.

| The described configuration assumes you are using operations center. You can also configure HA with CloudBees CI on traditional platforms standalone controllers. Configuration differences are noted when applicable. |

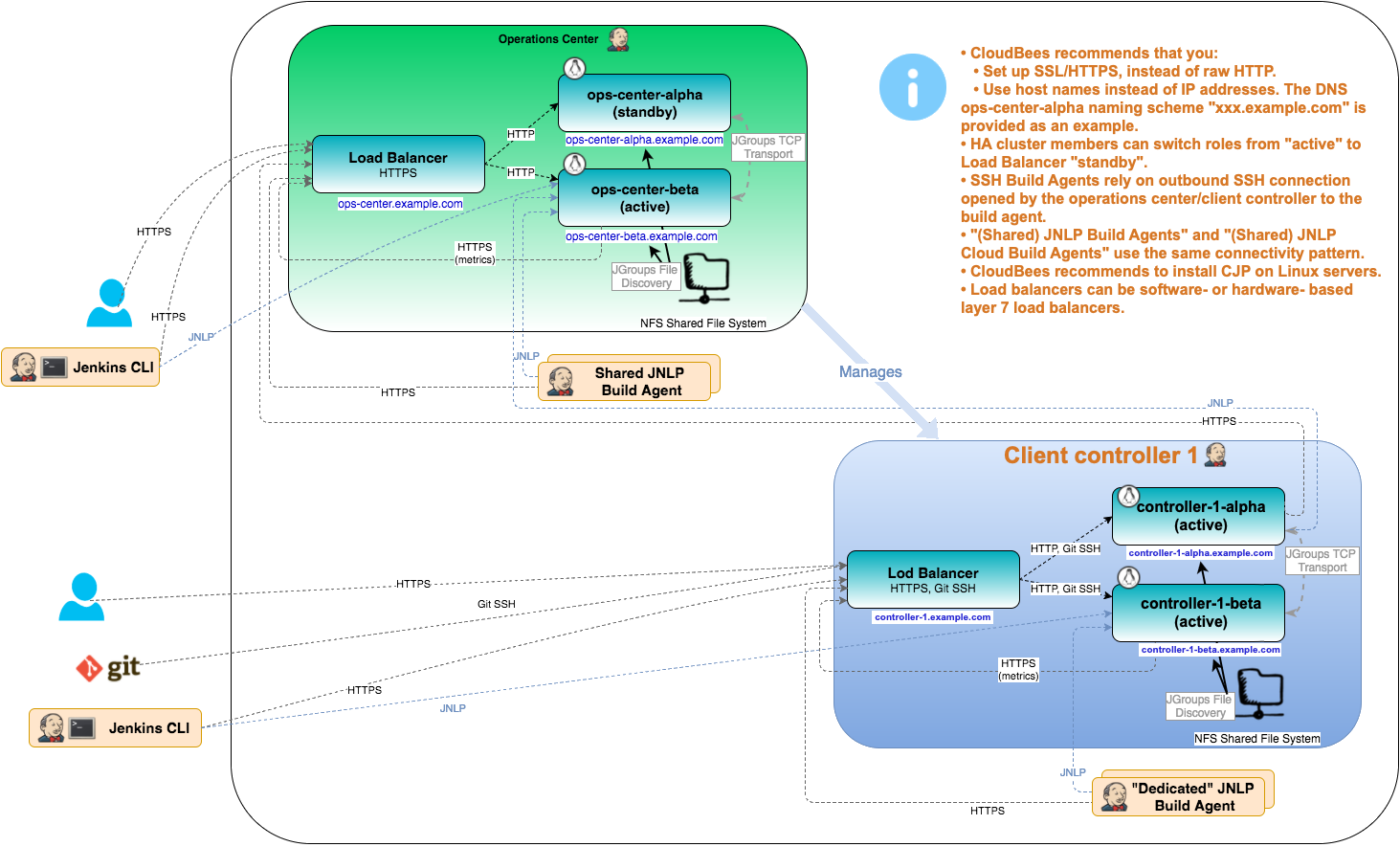

The following diagram outlines the principal design details with routing for each communication protocol.

Alpha and Beta

To create the "HA singleton", it is necessary to have two copies of operations center: Alpha and Beta (active and standby, respectively). In HA mode, the two Jenkins instances cooperatively elect the "primary" JVM, and depending on the outcome of this election, members of a cluster starts or stops the client controller in the same JVM. From the viewpoint of the code inside operations center, this is as if it is started or stopped programmatically. Operations center relies on JGroups for the underlying group membership service.

HA failover

A failover is effectively (1) shutting down the current CloudBees CI JVM, followed by (2) starting it up in another location. Sometimes step 1 doesn’t happen, for example when the current controller crashes. Because these controllers work with the same $JENKINS_HOME, this failover process has the following characteristics:

-

Jenkins global settings, configuration of jobs/users, fingerprints, record of completed builds (including archived artifacts, test reports, etc.), will all survive a failover.

-

User sessions are lost. If your CloudBees CI installation requires users to sign in, they’ll be asked to sign in again.

During the startup phase of the failover, CloudBees CI will not be able to serve inbound requests or builds. Therefore, a failover typically takes a few minutes, not a few seconds.

| For HA client controllers, builds that were in progress will normally not survive a failover, although their records will survive. The builds based on Jenkins Pipeline Jobs will continue to execute. No attempt will be made to re-execute interrupted builds, though the Restart Aborted Builds plugin will list the aborted builds. |

Load balancer

In general, all CloudBees CI traffic should be routed through the load balancer, except the JNLP transport. The JNLP transport is used by client controllers to connect to operations center and by Build Agents configured to use the "Java Web Start" launcher.

The operations center accepts incoming JNLP connections from multiple components: client controllers and agents (usually Shared Agents). On a failover (a new operations center instance gets primary role in the cluster), all those JNLP connections are automatically restored.

An alternative to the proxying of TCP sockets is to use the system property hudson.TcpSlaveAgentListener.hostName to ensure that each Jenkins instance presents the correct X-Jenkins-CLI-Host header in requests.

Either method has implications as to the mutual visibility of the agent nodes and the Jenkins nodes.

This hudson.TcpSlaveAgentListener.hostName system property is available to clients as an HTTP header (X-Jenkins-CLI-Host) which included in all HTTP responses. JNLP clients will use this header to determine which hostname to use for the JNLP protocol. Clients will continue attempting to connect to the JNLP port until a connection is successfully established after failover. If the system property is not provided, the host name of the CloudBees CI root URL is used. The hudson.TcpSlaveAgentListener.hostName must be properly set on all instances for load-balancing to work correctly in an HA configuration

There are many software and hardware load balancer solutions. CloudBees recommends the Open Source solution HAProxy which doubles as a reverse proxy. For truly highly-available setup, HAProxy itself needs to be made highly available. Support for HAProxy is available from HAProxy Technologies Inc.

In general, the Load Balancers in HA will be configured to:

-

Route HTTP and HTTPS traffic

-

Route SSHD TCP traffic

-

Listen for the heartbeat on /ha/health-check

-

(Optional if using HTTPS) Set location of SSL key for HTTPS

This guide will detail the setup and configuration for HAProxy. For guidance on configuring other load balancers, please see:

HTTPS and SSL

In addition to acting as a load balancer and reverse proxy, HAProxy acts as an SSL Termination point for operations center. This allows internal traffic to remain in the default http configuration while providing a secured endpoint for users.

If you do not have access to your environments' SSL key files, please reach out to your operations teams.

If the SSL certificate used by HAProxy is not trusted by default by the operations center JVM, it must be added to the JVM keystores of both operations centers and all client controllers.

If the SSL certificate is used to secure the connection to elasticsearch, it must be added to both operations center JVM keystores.

Note: When making client controllers highly available, if the SSL certificate used by HAProxy is not trusted by default by the operations center JVM, it is recommended that it is to the keystores of both operations centers.

For further information, please refer to How to install a new SSL certificate

Shared storage

Each member node of the operations center HA cluster needs to see a single coherent shared file system that can be read and written simultaneously. That is to say, for node Alpha and Beta in the cluster, if node Alpha creates a file in $JENKINS_HOME, node Beta needs to be able to see it within a reasonable amount of time. A "reasonable amount of time" here means the time window during which you are willing to lose data in case of a failure. This is commonly accomplished with a NFS Shared File System.

To set up the NFS Server please see: NFS Guide

Note: For truly highly-available Jenkins, NFS storage itself needs to be made highly-available. There are many resources on the web describing how to do this. See the Linux HA-NFS Wiki as a starting point.

CloudBees Jenkins HA monitor tool

The CloudBees Jenkins HA monitor tool is an optional tool that can be set up, but is not required for setting up an HA cluster. It is a small background application that executes code when the primary Jenkins JVM becomes unresponsive. Such setup/teardown scripts can only be reliably triggered from outside Jenkins. Those scripts also normally require root privileges to run. It can be downloaded from the jenkins-ha-monitor section of the download site.

Its 3 defining options are as follows:

-

The

-homeoption that specifies the location of$JENKINS_HOME. The monitor tool picks up network configuration and other important parameters from here, so it needs to know this location. -

The

-host-promotionoption that specifies the location of the promotion script, which gets executed when the primary Jenkins JVM moves in from another system into this system as a result of election. In native packages, this file is placed at/etc/jenkins-ha-monitor/promotion.sh. -

The

-host-demotionoption that specifies the demotion script, which is the opposite of the promotion script and gets executed when the primary Jenkins JVM moves from this system into another system. In native packages, this file is placed at/etc/jenkins-ha-monitor/demotion.sh. Promotion and demotion scripts need to be idempotent, in the sense that the monitor tool may run the promotion script on an already promoted node, and the demotion script on an already demoted node. This can happen, for example, when a power outage hits a stand-by node and when it comes back up. The monitor tool runs the demotion script again on this node, since it cannot be certain about the state of the node before the power outage.

Run the tool with the -help option to see the complete list of available options. Configuration file that is read during start of the service is located at /etc/sysconfig/jenkins-ha-monitor.

Setup procedure

The HA setup in operations centers provides the means for multiple JVMs to coordinate and ensure that the Jenkins controller is running somewhere, but it does so by relying on the availability of the storage that houses $JENKINS_HOME and the HTTP reverse proxy mechanism that hides a failover from users who are accessing Jenkins. Aside from NFS as a storage and the reverse proxy mechanism, operations center can run on a wide range of environments. In the following sections, we’ll describe the parameters required from them and discuss more examples of the deployment mode.

The setup procedure follows this basic format:

-

Before you begin

-

Configure Shared Storage

-

Configure operations center and client controllers

-

Install operations center HA monitor tool

-

Configure HAProxy

After these steps are followed, you should follow the Testing and Troubleshooting section.

Configure shared storage on Alpha and Beta

All the member nodes of an HA cluster need to see a single coherent file system that can be read and written to simultaneously. Creating a shared storage mount is outside the scope of this guide. Please see the Shared Storage section, above.

Mount the Shared Storage to Alpha and Beta:

$ mount -t nfs -o rw,hard,intr sierra:/jenkins /var/lib/jenkins

/var/lib/jenkins is chosen to match what the CloudBees Jenkins Enterprise packages use as $JENKINS_HOME. If you change them, update /etc/default/jenkins (on Debian) or /etc/sysconfig/jenkins (on RedHat and SUSE) to have $JENKINS_HOME point to the correct directory.

Before continuing, test that the mountpoint is accessible and permissions are correct on both machines by executing touch /<mount_point>/test.txt on Alpha and then try editing the file on Beta.

Install and configure operations center and client controllers

Choose the appropriate Debian/Red Hat/openSUSE package format depending on the type of your distribution. Install the operations center or client controller service on Alpha and Beta.

Upon installation, both instances of CloudBees CI will start running. To stop them, use the use sudo systemctl stop cloudbees-core-oc (for the operations center) or sudo systemctl stop cloudbees-core-cm (for the controller) command, while we work on the HA setup configuration. If you do not know how to set up a Java Argument on CloudBees CI, refer to this KB article.

When operations center or client controller is being used in an HA configuration, both Alpha and Beta instances must modify the $JENKINS_HOME Jenkins argument to point to the shared NFS mount.

In the setup wizard, be sure to install the CloudBees High Availability Management plugin

(if you are using configuration-as-code, the plugin is named cloudbees-ha).

The basic HA functionality will work without the plugin, but some features such as the management GUI and /ha/health-check endpoint require it.

It is an important performance optimization that the WAR file is not extracted to the $JENKINS_HOME/war directory in the shared filesystem. In a rolling upgrade scenario, an upgrade of the secondary instance followed by a (standby) boot can corrupt this directory. Some configurations may do this by default, but WAR extractions can easily be redirected to a local cache (ideally SSD for better Jenkins core I/O) on the container/VM’s local filesystem with the JENKINS_ARGS properties --webroot=$LOCAL_FILESYSTEM/war --pluginroot=$LOCAL_FILESYSTEM/plugins. For example, on debian installations, where $NAME refers to the name of the jenkins instance: --webroot=/var/cache/$NAME/war --pluginroot=/var/cache/$NAME/plugins

$JENKINS_HOME is read intensively during the start-up. If bandwidth to your shared storage is limited, you’ll see the most impact in startup performance. Large latency causes a similar issue, but this can be mitigated somewhat by using a higher value in the bootup concurrency by a system property -Djenkins.InitReactorRunner.concurrency=8.

To ensure that TCP inbound agents can connect in a HA configuration, each instance must set the system property JENKINS_OPTS=-Dhudson.TcpSlaveAgentListener.hostName= equal to a hostname (preferred and required when using HTTPS) or IP address (unsupported for HTTPS configurations) of that instance that can be resolved and connected to by all client controllers and build agents which use the JNLP connection protocol. This requires more exposure of operations center servers, as all instances must be addressable by all client controllers.

Because we are configuring HTTPS traffic, it is necessary to update the property JAVA_ARGS and add -Djavax.net.ssl.trustStore=path/to/jenkins-truststore.jks -Djavax.net.ssl.trustStorePassword=changeit". To debug ssl issues, add "-Djavax.net.debug=all" Oracle Debugging SSL/TLS Connections and How to install a new SSL certificate

When using the redhat package (RPM) it is highly recommended after the installation to set the option JENKINS_INSTALL_SKIP_CHOWN to false in /etc/sysconfig/jenkins.

This option will prevent in future upgrades to apply a chown on your JENKINS_HOME folder which may take a lot of time (especially for an HA setup where JENKINS_HOME is on remote file system using NFS and will be updated by the upgrade of the two nodes).

|

To start the instances, use sudo systemctl start cloudbees-core-oc (for the operations center) or sudo systemctl start cloudbees-core-cm (for the controller).

Install the HA monitor tool

If any actions are required on the server to ensure that the HA failover completes, you can set up a monitoring service. To do this, log on to Alpha and install the jenkins-ha-monitor package. This monitoring program watches CloudBees CI as root, and when the role transition occurs, it’ll execute the supplied promotion script or the demotion script.

|

JGroups communication folder requirements The HA cluster uses JGroups for communication between nodes, which creates a shared folder for coordination. This folder requires specific permission settings:

Troubleshooting permission issues If you encounter startup errors or communication problems:

This reset process allows JGroups to recreate the communication folder with the correct permissions. |

The monitor tool is packaged into a single jar file that can be executed as java -jar jenkins-ha-monitor.jar. It is also packaged as the jenkins-ha-monitor RPM/DEB packages for an easier installation on the jenkins-ha-monitor section of the download site

To install it, you need to do it as any RPM file, for example:

sudo rpm -i jenkins-ha-monitor-<version>.noarch.rpm

And for uninstalling, you can do it by calling:

sudo rpm -e jenkins-ha-monitor

Configuration file that is read during start of the service is located at /etc/sysconfig/jenkins-ha-monitor. CloudBees Jenkins HA monitor tool logs by default at /var/log/jenkins/jenkins-ha-monitor.log.

Configure HAProxy

Let’s expand on this setup further by introducing an external load balancer and reverse proxy that receives traffic from users, determines the primary JVM based on heartbeat, then direct them to the active primary JVM. CloudBees recommends configuring HTTPS and this guide is tailored for HTTPS. HAProxy can be installed on most Linux systems via native packages, such as apt-get install haproxy or yum install haproxy, however CloudBees recommends that HAProxy version 1.6 (or newer) be installed. For operations center HA, the configuration file (normally /etc/haproxy/haproxy.cfg) should look like the following:

global log 127.0.0.1 local0 log 127.0.0.1 local1 notice maxconn 4096 user haproxy group haproxy # Default SSL material locations ca-base /etc/ssl/certs crt-base /etc/ssl/private # Default ciphers to use on SSL-enabled listening sockets. # For more information, see ciphers(1SSL). This list is from: # https://hynek.me/articles/hardening-your-web-servers-ssl-ciphers/ ssl-default-bind-ciphers FFF+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!MD5:!DSS ssl-default-bind-options no-sslv3 tune.ssl.default-dh-param 2048 defaults log global option http-server-close option log-health-checks option dontlognull timeout http-request 10s timeout queue 1m timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-keep-alive 10s timeout check 500 default-server inter 5s downinter 500 rise 1 fall 1 #redirect HTTP to HTTPS listen http-in bind *:80 mode http redirect scheme https code 301 if !{ ssl_fc } listen https-in # change the location of the pem file bind *:443 ssl crt /etc/ssl/certs/your.pem mode http option httplog option httpchk HEAD /ha/health-check option forwardfor option http-server-close # alpha and beta should be replaced with hostname (or ip) and port # 8888 is the default for CJOC, 8080 is the default for {CMs} server alpha alpha:8888 check server beta beta:8888 check reqadd X-Forwarded-Proto:\ https listen ssh bind 0.0.0.0:2022 mode tcp option tcplog option httpchk HEAD /ha/health-check # alpha and beta should be replaced with hostname (or ip) and port # 8888 is the default for CJOC, 8080 is the default for {CMs} server alpha alpha:2022 check port 8888 server beta beta:2022 check port 8888

The global section contains stock settings and defaults has been configured with typical timeout settings. You must configure the listen blocks to your particular environment. To determine the port configuration of an existing installation, please reference the How to add Java arguments to Jenkins guide.

The "Default SSL material locations" and bind *:443 ssl crt /etc/ssl/certs/server.bundle.pem sections define the paths to the necessary ssl keyfiles.

Together, the https-in and http-in sections determine the bulk of the configuration necessary for operations center routing. These listen blocks tell HAProxy to forward traffic to two servers alpha and beta, and periodically check their health by sending a GET request to /ha/health-check. Unlike active nodes, standby nodes do not respond positively to this health check, and that’s how HAProxy determines traffic routing.

The ssh services section is configured to forward tcp requests. For these services, HAProxy uses the same health check on the application port. This ensures that all services fail over together when the health check fails.