Cluster operations is a facility to perform maintenance operations on various items in operations center, such as client controllers and update centers. Different operations are applicable to various items such as performing backups or restarts on client controllers, or upgrading or installing plugins in update centers.

The main way of running these operations is either via a custom job type, or some preset operations embedded at different locations in the CloudBees CI UI.

Cluster Operations jobs

You create a Cluster Operations job in the same way as you would any other job in CloudBees CI, by selecting New Item in the view you want to create it in, giving it a name, and selecting Cluster Operations as the item type.

Concepts

A Cluster Operations job can contain one or more operations that are executed in sequence one after the other when the project runs.

An operation has:

-

A type that it can operate on, for example, a client controller or Update Center.

-

A set of target items that it will operate on that is obtained from a selected source and reduced by a set of configured filters. The target items will be operated on in parallel, and the max number of parallel items can be configured in the Advanced Operation Options section.

-

A list of steps to perform in sequence on each target item.

The available sources, filters, and steps depend on the target type that the operation supports.

Tutorial

To create a cluster operation:

|

To run the operation successfully, the user requires the |

-

On the root level or within a folder of operations center, select New Item.

-

Specify a name for the cluster operation, for example "Quiet down all controllers".

-

Select Cluster Operations as the item type.

You will then be directed to the configuration screen for the newly created job.

-

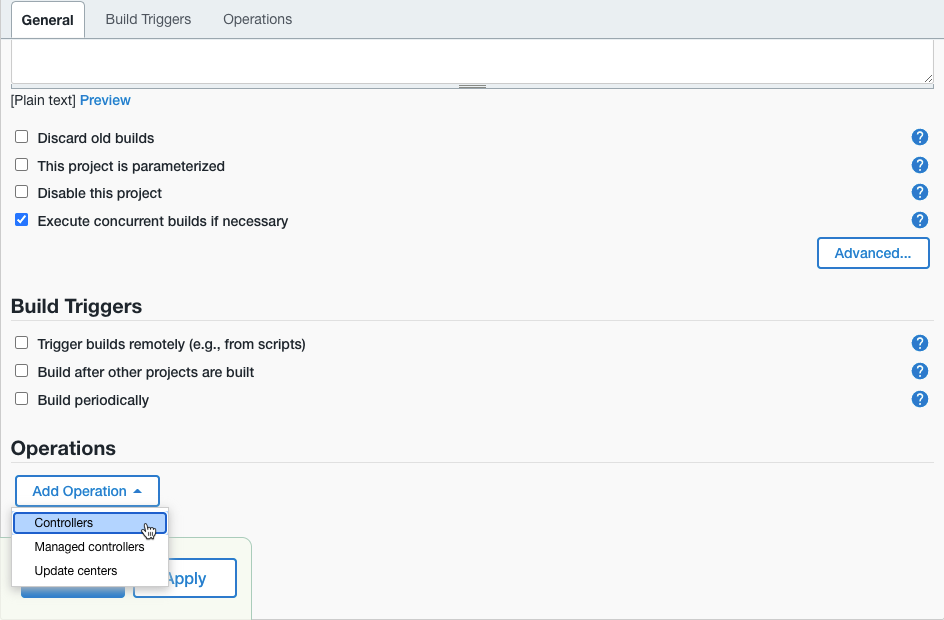

Select Add Operation > Controllers to add a new operation with the client controller target type.

Figure 1. Create a new cluster operation

Figure 1. Create a new cluster operation -

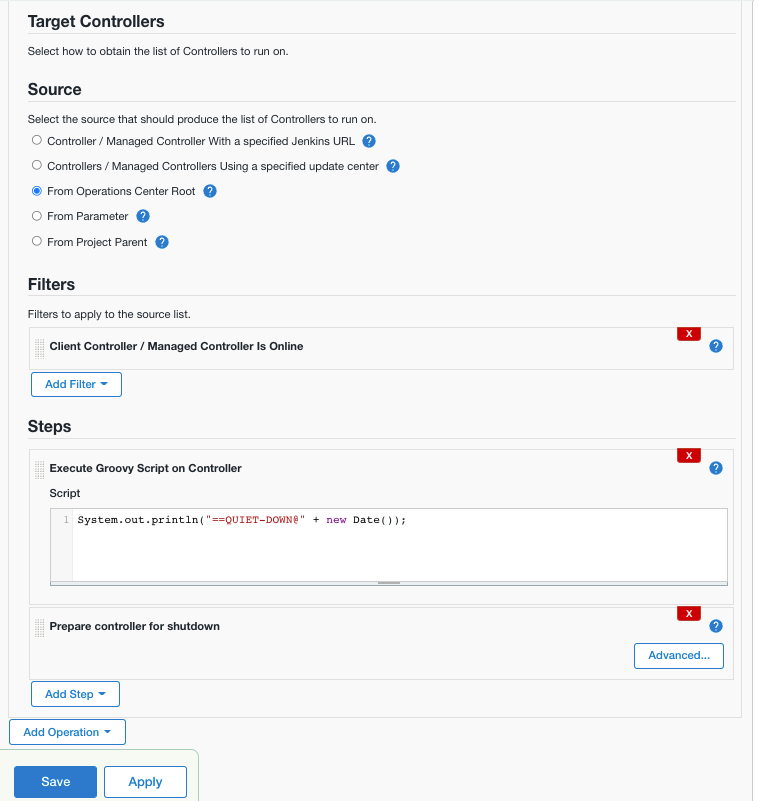

Under Source, select From Operations Center Root.

-

Select .

This will select all client controllers in operations center that are online when the operation is run.

We have now specified what to run the operation on and next we will specify what to run on them by adding two steps.

-

Select and enter the following code:

System.out.println("==QUIET-DOWN@" + new Date());This will print the text and the current date and time to the log on the CloudBees CI controller which can be handy for audit later on.

-

Select .

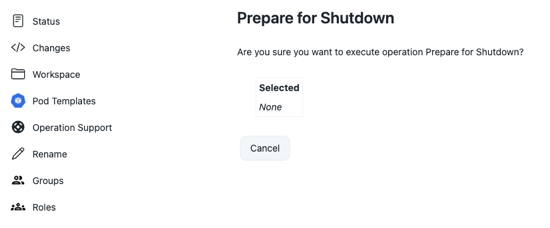

This step performs functions similar to what you would get if you selected Prepare for Shutdown on the Manage Jenkins page on each controller.

Your configuration should look something like the following when you’re done:

Figure 2. Create a new cluster operation

Figure 2. Create a new cluster operation -

Select Save.

On starting, this cluster operation runs each client controller in parallel, and the standard notice "Jenkins is going to shut down" is displayed on each client controller.

Control how to fail

Sometimes it’s desirable to modify how a failure affects the rest of the operation flow. On the configuration screen for the cluster operation job, in each Operation section, there is an Advanced button. Selecting it reveals some advanced control functions like max number of controllers to run in parallel, but also Failure Mode and Fail As.

-

Fail As: This option is a way to set the CloudBees CI build result that the run will get. Build result options include Failure, Abort, Unstable, and so on.

-

Failure Mode: This option controls what happens to the rest of the run if an operation step on an item fails.

-

Fail Immediately: This option will abort anything in progress and fail immediately.

-

Fail Tidy: This option waits for anything currently running to finish, and then fail. (All operations in the queue are cancelled.)

-

Fail At The End: This option will let everything run to the end, and then fail.

-

Ad-hoc manual cluster operations

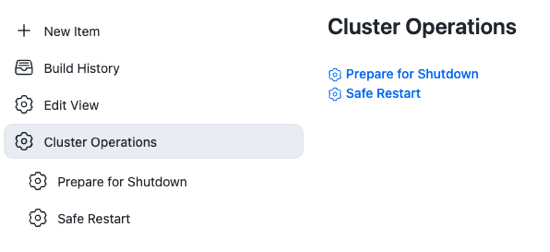

Operations center comes with a couple of preset cluster operations that can be run on selected client controllers directly from the side panel of a list view or client controller page.

To locate the list of preset cluster operations:

-

Select in the upper-right corner to navigate to the Manage Jenkins page.

-

Select Cluster Operations.

Run from a list view

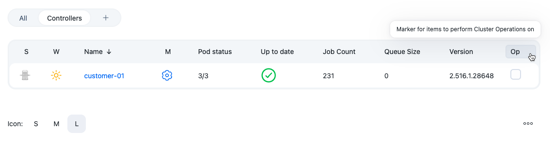

Cluster operations provides a new list view column type called ClusterOp Item Selector and appears by default as the right column on new list views and the All view.

For preexisting list views before cluster operations, you’d need to add the column by editing the view. As with all list views (except the All view), you can customize the columns to change the order they are displayed.

Mark the client controllers that you want to run the operation on by selecting the appropriate checkbox in the Op column; the selection on each view will be remembered throughout your session.

Select Cluster Operations, in the left pane, to open the context menu that contains the available operations for the view.

You can get to the project page of the preset operation, if you are an administrator, by selecting the gear icon next to the operation name.

Selecting the operation’s name, either via the context menu or the separate list page, takes you to the run page, where you can run the operation, or specify the parameters for this operation. The run page also contains the list of selected client controllers, with those not applicable for this run shown with a strike-out through their names, and a simple explanation of why they are not applicable. The client controllers can be available for a particular run either because a given controller is the wrong type for the operation or because a configured filter removed it from the resource pool. Some operations, for example, are only designed to run on online controllers, so any offline controllers will be filtered out.

| The list of controllers and whether operations will run on them or not are part of a preliminary display; the list is recalculated once an operation actually runs. The status of the client controllers (online or offline) might change between the display and when the operation is run. |

Run from a client controller manage page

The process to run an operation from a client controller is similar to the one described in Run from a list view. The only difference is that as you are only operating on a single client controller, no selection on a list view is involved. To access the operations:

-

On the operations center dashboard, select the gear icon next to the controller.

Operation run results and logs

Each run of a cluster operation job is accessible from the project page in the left panel like any normal CloudBees CI job. On the run page you can see the operations that were executed, the items (client controllers or update centers) that they ran on, and the result in the form of a colored ball (success/failure) as well as a link to the log files for each run.

Console Output in the left panel shows the overall console log for all operations. To see the individual console output of each operation on a client controller or update center you can go via the log link next to each item on the run page or via a link for each in the overall console output.