HA installation instructions overview

You can install High Availability on any Kubernetes cluster. The following table contains links to the specific platform installation instructions, and the installation pre-requirements for each one of them.

| Platform | General install guide | Pre-installation requirements |

|---|---|---|

Azure Kubernetes Service (AKS) |

||

Amazon Elastic Kubernetes Service (EKS) |

||

Google Kubernetes Engine (GKE) |

||

Red Hat OpenShift (OpenShift) |

||

Kubernetes |

|

Storage requirements

As High Availability (HA) requires real-time shared storage between replicas, it does not support multi-region replication. In addition, there are specific storage requirements to use HA. Please review the specific pre-installation requirements for your platform. |

|

Managed controller with custom YAML definitions

Managed controllers require |

HA in a CloudBees CI on modern cloud platforms

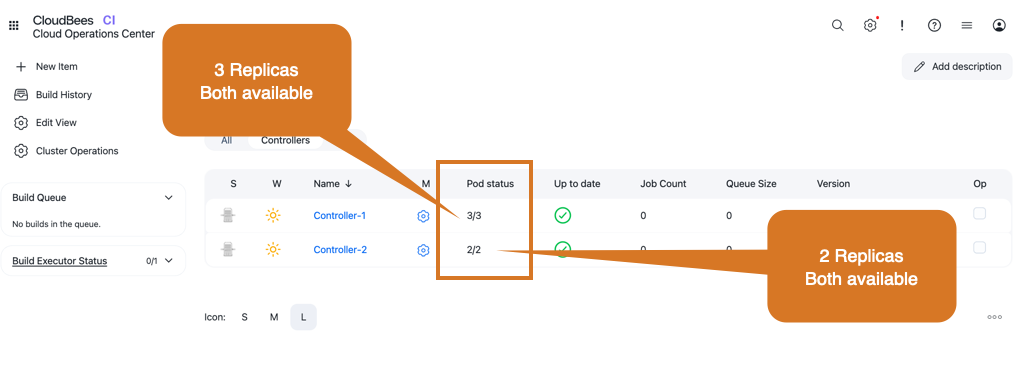

When a managed controller is running in HA mode, the Pod status column appears on the operations center dashboard. This column displays how many controller replicas exist for each one of the managed controllers, and how many of them are available.

Start a managed controller in HA mode using the UI

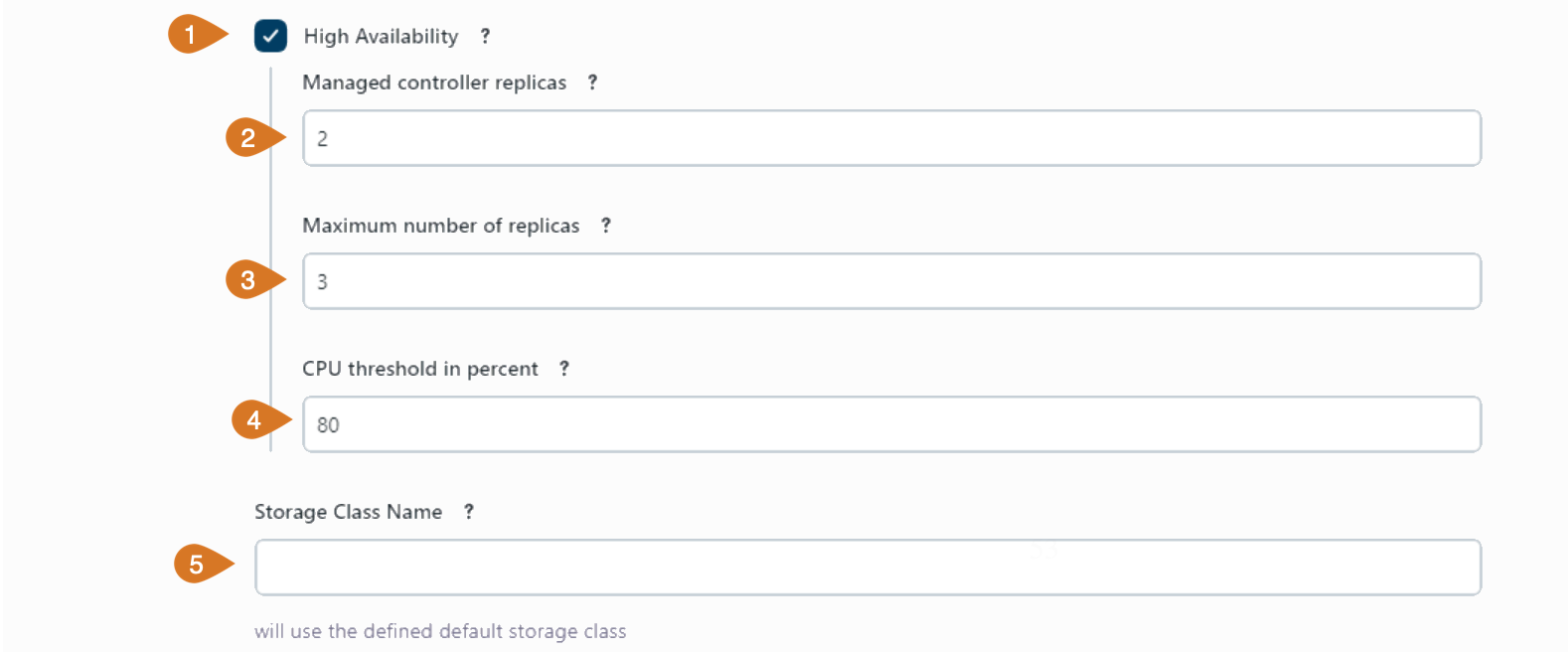

Admin users can easily create managed controllers that run in HA mode by using the UI. Create or configure the managed controller as usual and in the configuration screen, as shown in the following example:

-

Enable the High Availability mode.

-

Enter the number of desired replicas in the Managed controller replicas field.

-

Enter the Maximum number of replicas if you want auto-scaling. The Metrics server is required in your cluster if you modify this value to enable auto-scaling. Set it to zero to disable auto-scaling.

-

Set the CPU threshold in percent to trigger upscaling or downscaling if auto-scaling has been configured.

-

Select the Storage Class Name for the replica’s shared file system. If it is empty, use the default storage class defined in your Kubernetes cluster.

-

Select Use new health check to provide a more reliable failure detection mechanism for managed controllers running in HA mode. This option, when enabled, performs additional checks on elements of the HA cluster, such as the Hazelcast nodes and the controller replicas.

|

Modifying the number of replicas or the container image of a running managed controller updates the managed controller deployment automatically, and it does not require a restart from the operations center. |

|

Team controllers do not support HA, but team controllers can be migrated to managed controllers by following Migrate managed controllers and team controllers. |

Custom PodDisruptionBudget for HA managed controllers

The default configuration for managed controllers running in HA mode defines a PodDisruptionBudget with the minAvailable property set to 1.

This means that at least one replica must always be available to ensure the HA controller availability even during voluntary disruptions, such as node maintenance or upgrades.

CloudBees CI administrators can customize the values for the PodDisruptionBudget for managed controllers running in HA mode by providing a custom YAML definition in the managed controller configuration screen.

The following example shows a custom PodDisruptionBudget YAML example that sets the minAvailable property to 2:

apiVersion: policy/v1 kind: PodDisruptionBudget metadata: name: ${name} spec: minAvailable: 2(1)

| 1 | New value for the minAvailable property. The default value is 1. |

HA and caches in CloudBees CI on modern cloud platforms

Controllers running in HA mode automatically configure cache-related properties to use per-replica temporary folder locations.

Folders containing these caches are located outside the shared $JENKINS_HOME folder to avoid conflicts.

These folders are used by:

-

The GitHub branch source, to minimize REST API calls.

-

Generic Git repositories to store the repository cache.

-

Both Multibranch Pipelines and standalone Pipelines using the Pipeline script from SCM option, when using plain Git repository references, in certain configurations.

-

Pipeline Groovy libraries when not used in clone mode (with or without caching mode enabled), to maintain checkouts of library SCM repositories.

-

CloudBees Pluggable Storage, to download archived builds from the cloud object storage provider. For more information, refer to CloudBees Pluggable Storage temporary folders.

In CloudBees CI on modern cloud platforms these temporary folders are located within each replica’s container under the /tmp directory.

If /tmp fills up with caches, depending on the platform where CloudBees CI is installed, it can be possible to request a larger volume.

As an alternative, it is possible to use a generic ephemeral volume of any desired size with any desired storage class (only read-write-once needs to be supported), as you can see in the following example:

containers: - name: jenkins volumeMounts: - name: tmp mountPath: /tmp volumes: - name: tmp ephemeral: volumeClaimTemplate: spec: accessModes: - ReadWriteOnce storageClassName: <storage-class-name> (1) resources: requests: storage: 200Gi

| 1 | Use the desired storage class name for the ephemeral volume. |

|

Kubernetes does not recognize this volume as ephemeral storage, so the pod will not be terminated even if the volume runs out of free space. |

Single replica HA cluster

When creating a managed controller in HA mode, you can set the number of replicas to one. A single replica HA cluster cannot distribute the workload among the different replicas, but for CloudBees CI on modern cloud platforms, this configuration still provides the following HA benefits:

-

Rolling restarts.

-

Rolling upgrades.

|

In a single-replica HA cluster, if the controller fails, the controller is not available during the recovery process. |

Horizontal auto-scaling

Horizontal auto-scaling is available for managed controllers running in HA mode. It uses Kubernetes Horizontal Pod Autoscaling.

The Horizontal Pod Autoscaling (HPA) controller monitors resource utilization and adjusts the scale of its target to match your configuration settings. For example, if utilization exceeds your defined threshold, the autoscaler increases the number of replicas. You can learn how to set up auto-scaling for your managed controller here.

|

Kubernetes Horizontal Pod Autoscaling (HPA) is part of the Helm charts provided by CloudBees. However, to use auto-scaling with CloudBees CI High Availability (HA), you need to install the required Metrics server in your cluster. CloudBees CI’s High Availability (HA) has been tested using |

CloudBees recommends performance testing to determine appropriate thresholds that do not affect response time.

For upscale events:

-

No rebalance of builds is performed.

-

Builds continue to run on existing replicas.

-

New builds are dispatched between replicas. Refer to Build scheduling and explicit load balancing for more information.

-

Due to sticky session usage, any existing session remains on the same replica.

-

New sessions are distributed randomly between replicas.

For downscale events:

-

Builds from removed replicas are adopted by the remaining replicas. Refer to Build scheduling and explicit load balancing for more information.

-

Web sessions associated with a removed replica are redirected to a remaining replica.

Upscaling means there are increased builds to serve that consume greater resources. The scheduling of new controller replicas are blocked when the cluster reaches capacity.

To ensure that you have enough resources, CloudBees recommends the following:

-

Use the Kubernetes cluster autoscaler, to ensure that the cluster has enough resources to accommodate the new replicas.

-

Consider the use of dedicated node pools for controllers.

-

Assign a lower priority class to agent pods, so that controller pods are scheduled first. This allows controller pods to evict agent pods, if necessary.