|

All the Pipeline examples in this section are created assuming that the agent with the required tools to complete the build is properly selected. If you plan to test them using ephemeral agents (Kubernetes-based or Docker agents), review Select an agent for your Pipeline job. |

While Jenkins has always allowed rudimentary forms of chaining Freestyle Jobs together to perform sequential tasks, additional plugins have been used to implement complex behaviors utilizing Freestyle Jobs such as the Copy Artifact, Parameterized Trigger, and Promoted Builds plugins. Pipeline makes this concept a first-class citizen in Jenkins.

Building on the core Jenkins value of extensibility, Pipeline is also extensible both by users with Pipeline Shared Libraries and by plugin developers.

Create stages

Intra-organizational (or conceptual) boundaries are captured through a primitive called "stages." A deployment pipeline consists of various stages, where each subsequent stage builds on the previous one. The idea is to spend as few resources as possible early in the pipeline and find obvious issues, rather than spend a lot of computing resources for something that is ultimately discovered to be broken.

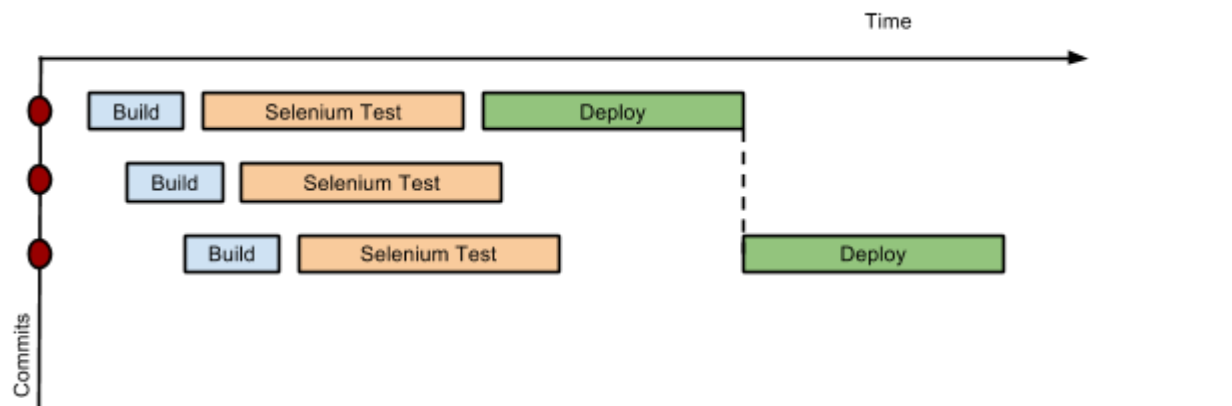

Consider a simple pipeline with three stages. A naive implementation of this pipeline can sequentially trigger each stage on every commit. Thus, the deployment step is triggered immediately after the Selenium test steps are complete. However, this would mean that the deployment from commit two overrides the last deployment in motion from commit one. The right approach is for commits two and three to wait for the deployment from commit one to complete, consolidate all the changes that have happened since commit one and trigger the deployment. If there is an issue, developers can easily figure out if the issue was introduced in commit two or commit three.

Jenkins Pipeline provides this functionality by enhancing the stage primitive. For example, a stage can have a concurrency level of one defined to indicate that at any point only one thread should be running through the stage. This achieves the desired state of running a deployment as fast as it should run.

stage('Production') { node { unarchive mapping: ['target/x.war' : 'x.war'] deploy 'target/x.war', 'production' echo 'Deployed to http://localhost:8888/production/' } }

Implement gates and approvals

Continuous delivery means having binaries in a release ready state whereas continuous deployment means pushing the binaries to production - or automated deployments. Although continuous deployment is a sexy term and a desired state, in reality organizations still want a human to give the final approval before bits are pushed to production. This is captured through the "input" primitive in Pipeline. The input step can wait indefinitely for a human to intervene.

input message: "Does http://localhost:8888/staging/ look good?"

Customize deployment of artifacts to staging/production

Deployment of binaries is the last mile in a pipeline. The numerous servers employed within the organization and available in the market make it difficult to employ a uniform deployment step. Today, these are solved by third-party deployer products whose job is to focus on deployment of a particular stack to a data center. Teams can also write their own extensions to hook into the Pipeline job type and make the deployment easier.

Meanwhile, job creators can write a plain Groovy function to define any custom steps that can deploy (or undeploy) artifacts from production.

def deploy(war, id) { sh "cp ${war} /tmp/webapps/${id}.war" }