This content applies only to CloudBees CI on modern cloud platforms.

In CloudBees CI, Pipelines produce a variety of artifacts, including Pipeline build logs and log metadata.

By default, Pipeline build logs are stored in the $JENKINS_HOME directory.

Over time, these Pipeline build logs can consume a significant amount of space and eventually lead to slower backup, restore, and disaster recovery times.

To mitigate these issues, you may have configured aggressive build retention policies to retain only the most recent builds.

However, these policies prevent you from retaining a comprehensive build history for troubleshooting and auditing purposes.

CloudBees Pluggable Storage addresses these problems by providing a scalable approach for storing Pipeline build logs in cloud object storage, allowing you to:

- Reduce controller disk usage

-

Minimize the size of your

$JENKINS_HOMEdirectory to speed up backup, restore, and disaster recovery operations. - Improve operability and maintenance

-

Centralize management of Pipeline build logs for all managed controllers through a unified cloud object storage provider. Pipeline build logs stored with CloudBees Pluggable Storage can be accessed in all contexts within CloudBees CI, including the default Console Output page, CloudBees Pipeline Explorer, the Pipeline Steps view, other Pipeline visualization plugins, and any programmatic log access via supported API calls.

- Improve compliance

-

Retain a comprehensive build history and leverage audit capabilities to meet compliance requirements.

CloudBees Pluggable Storage architecture

CloudBees Pluggable Storage is a standalone service that runs in a Kubernetes cluster. You only need to configure cloud credentials once for the CloudBees Pluggable Storage service in the operations center namespace, and you do not need to configure cloud credentials for each individual managed controller.

Once you enable the CloudBees Pluggable Storage service in the CloudBees CI Helm chart, the operations center passes a system property to each managed controller that is configured to use CloudBees Pluggable Storage. This system property specifies the URL of the CloudBees Pluggable Storage service. Communication between managed controllers and the CloudBees Pluggable Storage service is handled automatically and is internal to the cluster. The CloudBees Pluggable Storage service creates presigned URLs, which managed controllers use to upload and download Pipeline build logs to and from cloud object storage.

The following diagrams show the architecture of CloudBees Pluggable Storage for single and multiple cluster deployments. For more information, refer to Deploy CloudBees CI across multiple Kubernetes namespaces and clusters.

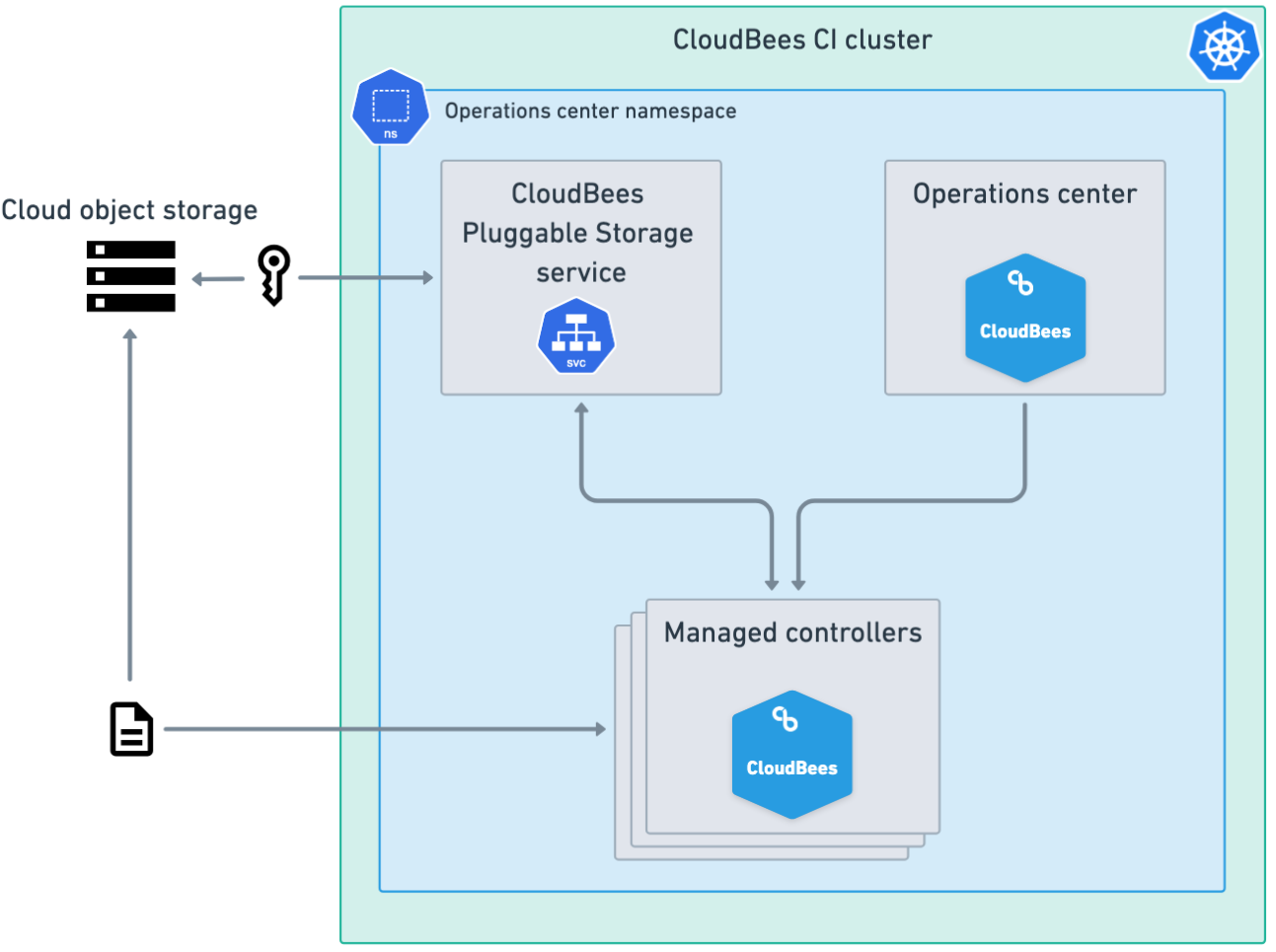

Single cluster with a single namespace

For a single cluster with a single namespace, the operations center and all managed controllers are deployed in the same Kubernetes cluster in the operations center namespace.

In this configuration, enable the CloudBees Pluggable Storage service in the cluster’s values.yaml file for the operations center namespace.

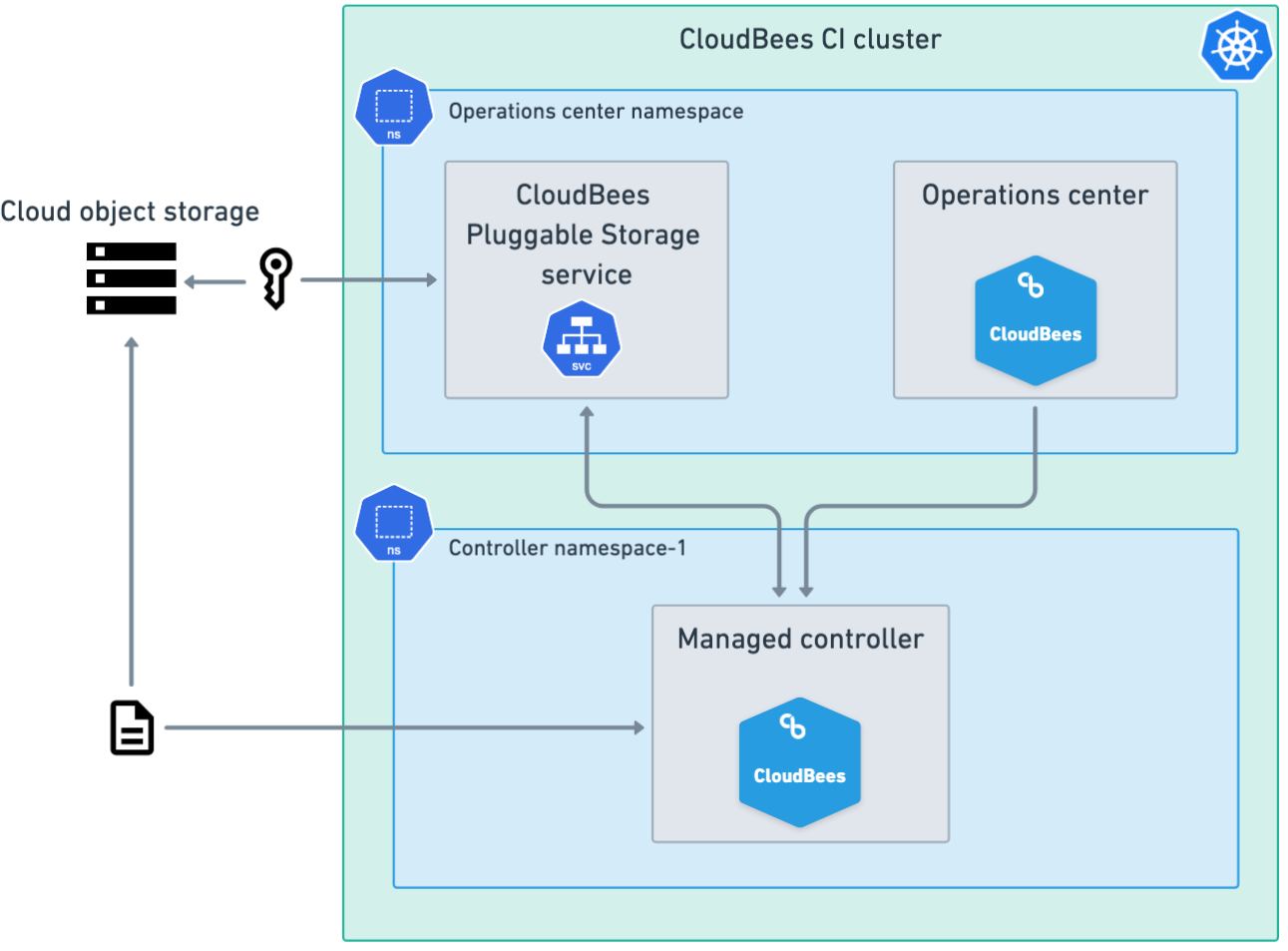

Single cluster with multiple namespaces

For a single cluster with multiple namespaces, the operations center and all managed controllers are deployed in the same Kubernetes cluster, but the operations center and each managed controller are in separate namespaces.

In this configuration, enable the CloudBees Pluggable Storage service only in the values.yaml file for the operations center namespace, leaving the CloudBees Pluggable Storage service disabled in the other namespaces.

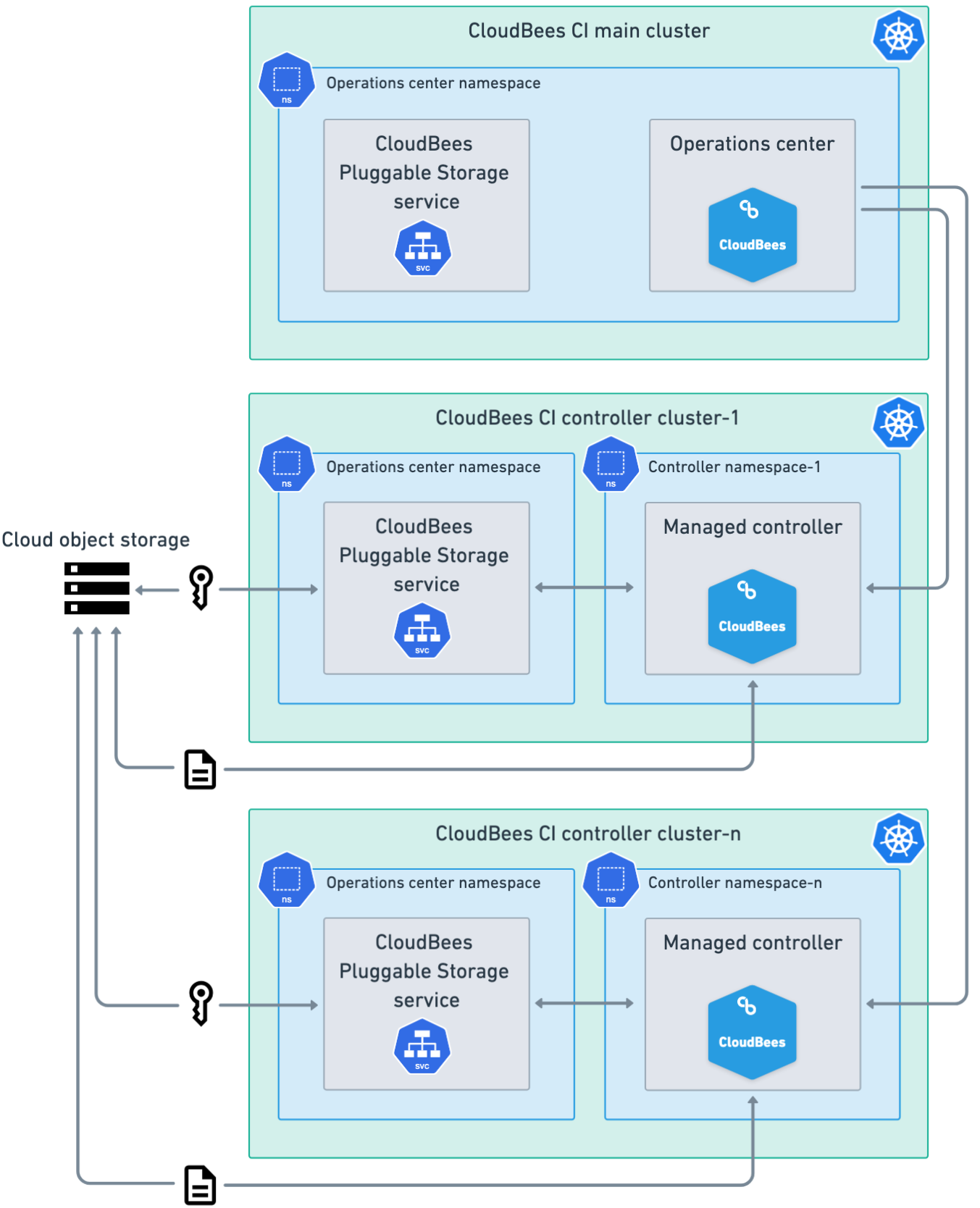

Multiple clusters

For multiple clusters, the operations center and managed controllers are deployed in different Kubernetes clusters.

In this configuration, enable the CloudBees Pluggable Storage service in each cluster’s values.yaml file for the operations center namespace and configure authentication for the cloud object storage provider in each cluster.

| For multiple clusters, you must repeat the configuration steps for each cluster. |

Configure CloudBees Pluggable Storage to archive Pipeline build logs

You must complete the following steps to configure CloudBees Pluggable Storage to archive Pipeline build logs:

|

Cross-controller Move/Copy/Promote operations are not supported. |

(Optional) Create a volume for the /tmp folder

CloudBees Pluggable Storage uses temporary folders to download archived builds from the cloud object storage provider. The downloaded files are stored in a temporary folder while they are being used, and deleted after 30 minutes if not accessed. All cached files in the temporary folder are also deleted when the managed controller is restarted.

These temporary folders are located within each controller’s container under the /tmp directory, which utilizes Kubernetes node storage by default.

In HA/HS configurations, each controller has one or more replicas, and each replica’s container has its own /tmp directory.

If you have a small node storage allocation or tend to have large build logs, you can create a dedicated volume for the /tmp folder to avoid running out of disk space.

If /tmp fills up with caches, depending on the platform where CloudBees CI is installed, it is possible to request a larger volume.

As an alternative, it is possible to use a generic ephemeral volume of any desired size with any desired storage class (only ReadWriteOnce needs to be supported). For example:

| 1 | Use the desired storage class name for the ephemeral volume. |

|

Kubernetes does not recognize this volume as ephemeral storage, so the pod will not be terminated even if the volume runs out of free space. |

Create a bucket or container and configure the managed Kubernetes service

To configure the CloudBees Pluggable Storage service to communicate with your cloud object storage provider, you must first create a bucket or container for archiving Pipeline build logs and then configure your managed Kubernetes service.

The following configurations are supported:

| To avoid increased costs, decreased performance, and potential network latency issues, CloudBees strongly recommends that you use the same cloud object storage provider that you are already using to run managed controllers. For example, if you are running managed controllers in Amazon EKS, you should use Amazon S3 or if you are running managed controllers in Google Kubernetes Engine (GKE), you should use Google Cloud Storage. |

|

Configure Amazon S3 and Amazon EKS

To configure CloudBees CI to store Pipeline build logs in Amazon S3, you must create the Amazon S3 bucket, and then set up an EKS Pod Identity or configure IAM roles for service accounts (IRSA) in Amazon EKS.

To configure Amazon S3 and Amazon EKS:

-

In Amazon S3, create a bucket for storing Pipeline build logs, or use an existing bucket. For more information, refer to Creating a general purpose bucket.

If you are using an existing bucket or container, set the storageObjectNamePrefixkey in yourvalues.yamlfile to specify a prefix for storing Pipeline build logs. This helps avoid naming conflicts with other applications that use the same bucket or container. For more information, refer to Enable the CloudBees Pluggable Storage service. -

In Amazon EKS, you must either set up an EKS Pod Identity or configure IAM roles for service accounts.

-

When creating the IAM policy for CloudBees Pluggable Storage, the minimum required permissions are

s3:GetBucketLocation,s3:ListBucket,s3:PutObjectands3:GetObject. The following examples are minimal IAM policies providing the required permissions, with and without the optional prefix configured for the S3 bucket:Without optional prefix:

1 Replace my-storage-bucketwith the name of your Amazon S3 bucket.With optional prefix:

1 Replace my-storage-bucketwith the name of your Amazon S3 bucket.2 Replace optional-prefixwith the optional prefix that is configured using thePluggableStorageService.storageObjectNamePrefixkey in yourvalues.yamlfile. For more information, refer to Cloud object storage path structure. -

When assigning an IAM role to a Kubernetes ServiceAccount, you must specify the CloudBees Pluggable Storage service account name that you will use in your CloudBees CI

values.yamlfile and the Kubernetes namespace for your cluster.-

pluggable-storage-serviceis the default name of the CloudBees Pluggable Storage service account defined in the CloudBees CI Helm chart. If you need to modify the default service account name, you can update it via therbac.pluggableStorageServiceServiceAccountNamekey in yourvalues.yamlfile. For more information, refer to Enable the CloudBees Pluggable Storage service. -

For the namespace, specify the operations center namespace.

-

-

IRSA only: When annotating the Kubernetes ServiceAccount with the Amazon Resource Name (ARN) of the IAM role, CloudBees recommends that you add the annotations using the

rbac.pluggableStorageServiceServiceAccountAnnotationskey in the CloudBees CI Helm chart. For more information, refer to Enable the CloudBees Pluggable Storage service.

-

Configure Google Cloud Storage and GKE

To configure CloudBees CI to store Pipeline build logs in Google Cloud Storage, you must create the bucket, and then set up Workload Identity Federation for GKE.

To configure Google Cloud Storage and GKE:

-

In Google Cloud Storage, create a bucket for storing Pipeline build logs, or use an existing bucket. For more information, refer to Create a bucket.

If you are using an existing bucket or container, set the storageObjectNamePrefixkey in yourvalues.yamlfile to specify a prefix for storing Pipeline build logs. This helps avoid naming conflicts with other applications that use the same bucket or container. For more information, refer to Enable the CloudBees Pluggable Storage service. -

In GKE, you must link the Kubernetes ServiceAccount to IAM. For more information, refer to Link Kubernetes ServiceAccounts to IAM.

-

When linking the Kubernetes ServiceAccount to IAM, you must specify the CloudBees Pluggable Storage service account name that you will use in your CloudBees CI

values.yamlfile and the Kubernetes namespace for your cluster.-

pluggable-storage-serviceis the default name of the CloudBees Pluggable Storage service account defined in the CloudBees CI Helm chart. If you need to modify the default service account name, you can update it via therbac.pluggableStorageServiceServiceAccountNamekey in yourvalues.yamlfile. For more information, refer to Enable the CloudBees Pluggable Storage service. -

For the namespace, specify the operations center namespace.

-

-

When annotating the Kubernetes ServiceAccount, CloudBees recommends that you add the annotations using the

rbac.pluggableStorageServiceServiceAccountAnnotationskey in the CloudBees CI Helm chart. For more information, refer to Enable the CloudBees Pluggable Storage service. -

When configuring the IAM roles, the following permissions are required:

storage.buckets.get storage.multipartUploads.* storage.objects.create storage.objects.get storage.objects.list iam.serviceAccounts.signBlob

-

Configure Microsoft Azure Blob Storage and Azure Kubernetes Service

To configure CloudBees CI to store Pipeline build logs in Microsoft Azure Blob Storage, you must create a storage container and configure workload identity for Azure Kubernetes Service (AKS).

To configure Microsoft Azure Blob Storage and AKS:

-

In Microsoft Azure, create a storage account or use an existing one. For more information, refer to Create an Azure storage account.

-

In Microsoft Azure, create a new container in your storage account for storing Pipeline build logs, or use an existing container. For more information, refer to Create a container.

-

Configure workload identity for the CloudBees Pluggable Storage service’s Kubernetes ServiceAccount. For more information, refer to Deploy and configure workload identity on an Azure Kubernetes Service cluster.

-

When linking the Kubernetes ServiceAccount to the federated identity credential, you must specify the CloudBees Pluggable Storage service account name that you will use in your CloudBees CI

values.yamlfile and the Kubernetes namespace for your cluster.-

pluggable-storage-serviceis the default name of the CloudBees Pluggable Storage service account defined in the CloudBees CI Helm chart. If you need to modify the default service account name, you can update it via therbac.pluggableStorageServiceServiceAccountNamekey in yourvalues.yamlfile. For more information, refer to Enable the CloudBees Pluggable Storage service. -

For the namespace, specify the operations center namespace.

-

-

When annotating the Kubernetes ServiceAccount, CloudBees recommends that you add the annotations using the

rbac.pluggableStorageServiceServiceAccountAnnotationskey in the CloudBees CI Helm chart. For more information, refer to Enable the CloudBees Pluggable Storage service. -

Assign the following roles to the managed identity:

-

Storage Blob Data Contributor: To read and write blobs and blocks.

-

Storage Blob Delegator: To create Shared Access Signature (SAS) tokens for controllers to upload and download blobs and blocks.

-

-

Configure Amazon S3-compatible services

CloudBees Pluggable Storage also supports cloud object storage providers that are fully compatible with the Amazon S3 API.

|

To configure Amazon S3-compatible services:

-

Create a bucket in your Amazon S3-compatible service, or use an existing bucket.

If you are using an existing bucket or container, set the storageObjectNamePrefixkey in yourvalues.yamlfile to specify a prefix for storing Pipeline build logs. This helps avoid naming conflicts with other applications that use the same bucket or container. For more information, refer to Enable the CloudBees Pluggable Storage service. -

Create a Kubernetes Secret that contains the static credentials used to authenticate with the cloud object storage provider. For example, issue the following command to create a Kubernetes Secret:

kubectl create secret generic s3-aws-secret --from-literal=AWS_ACCESS_KEY_ID="<my-aws-access-key-id>" --from-literal=AWS_SECRET_ACCESS_KEY="<my-aws-secret-access-key>"If successful, a Kubernetes Secret named

s3-aws-secretis created. You will add this Kubernetes Secret to thePluggableStorageService.storageOptionsS3.staticCredentialsSecretkey in yourvalues.yamlfile in the operations center namespace. -

Record the API endpoint URL for the cloud object storage provider. You will add this URL to the

PluggableStorageService.storageOptionsS3.endpointOverridekey in yourvalues.yamlfile.

Enable the CloudBees Pluggable Storage service

After you have created a bucket or container and configured the managed Kubernetes service, you must enable the CloudBees Pluggable Storage service. CloudBees Pluggable Storage is included with the CloudBees CI Helm chart.

To enable the CloudBees Pluggable Storage service:

-

Update your CloudBees CI Helm chart

values.yamlfile to enable CloudBees Pluggable Storage in the operations center namespace. Refer to CloudBees Pluggable Storage Helm chart values. -

Pass the required

values.yamlfile tohelm installorhelm upgrade. For more information, refer to Install CloudBees CI on modern cloud platforms on Kubernetes or Upgrade CloudBees CI on modern cloud platforms.

CloudBees Pluggable Storage Helm chart values

The following example shows how to configure the CloudBees CI Helm chart values.yaml file to enable the CloudBees Pluggable Storage service and specify a cloud object storage provider.

|

The CloudBees CI documentation covers only the most relevant Helm chart options and does not list every possible option, including generic Kubernetes pass-through configurations. For advanced configurations, review the comments and default values in the Helm chart. |

| 1 | Set the value to true to enable the CloudBees Pluggable Storage service. |

| 2 | Enter the name of your cloud object storage provider; either AMAZON_S3, GOOGLE_CLOUD_STORAGE, or AZURE_BLOB_STORAGE. |

| 3 | Enter the name of your bucket or container. |

| 4 | Optional.

Enter a prefix to be added to the names of all objects stored in the bucket.

The prefix should always end with a trailing / (slash) character. |

| 5 | Enter the expiration time, in minutes, for the presigned URLs sent to controllers for uploading and downloading files to/from the cloud object storage provider. |

| 6 | Enter the name of the AWS region for the bucket. |

| 7 | Enter the name of a Kubernetes Secret that contains the AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, and optionally AWS_SESSION_TOKEN.

This parameter is required for Amazon S3-compatible providers. |

| 8 | Enter the API endpoint URL for the Amazon S3-compatible service. This parameter is required for Amazon S3-compatible providers. |

| 9 | Set the value to true to use path-style access for the cloud object storage API endpoint URL. Set to false to use virtual-hosted-style access. |

| 10 | Set the value to true to restrict network calls to IPv6 only when accessing the Amazon EC2 Instance Metadata Service (IMDS) endpoint and update the AWS_EC2_METADATA_SERVICE_ENDPOINT_MODE environment variable to IPv6.

Set to false to restrict network calls to IPv4 only when accessing the Amazon EC2 Instance Metadata Service (IMDS) endpoint. |

| 11 | Enter the endpoint URI for the Microsoft Azure Blob Storage container. This parameter is required for Microsoft Azure Blob Storage. |

| 12 | The number of CloudBees Pluggable Storage service replicas that are running at a single time. |

| 13 | The name of the Kubernetes ServiceAccount that CloudBees Pluggable Storage will run as. |

| 14 | Enter service account annotations to enable applications, such as Workload Identity Federation for GKE. |

The following values can be used to configure CloudBees Pluggable Storage:

| Parameter | Description | ||

|---|---|---|---|

|

Enable the CloudBees Pluggable Storage service. Default: |

||

|

The cloud object storage provider. Default:

|

||

|

The name of the storage bucket or container to use for Pipeline build logs. Default: |

||

|

Optional.

Enter a prefix to be added to the names of all objects stored in the bucket.

The prefix should always end with a trailing |

||

|

Controls expiration time, in minutes, for the presigned URLs sent to controllers for uploading and downloading files to/from the cloud object storage provider. Default: |

||

|

The name of the AWS region for the bucket (for example, |

||

|

The name of a Kubernetes Secret that contains the keys

|

||

|

The API endpoint URL for the Amazon S3-compatible service. Default:

|

||

|

Whether to use path-style access for the cloud object storage API endpoint URL, instead of virtual-hosted-style access. Default: |

||

|

Whether to restrict network calls to IPv6 when accessing the Amazon EC2 Instance Metadata Service (IMDS) endpoint.

When set to |

||

|

The endpoint URI for the Microsoft Azure Blob Storage container (for example,

|

||

|

The number of CloudBees Pluggable Storage service replicas that are running at a single time.

Requests are load balanced across all replicas.

Adding replicas increases redundancy and enables rolling restarts without CloudBees Pluggable Storage downtime. Default: |

||

|

The name of the Kubernetes ServiceAccount the CloudBees Pluggable Storage will run as. Default: |

||

|

Service account annotations to enable applications, such as Workload Identity Federation for GKE. Default: |

Enable CloudBees Pluggable Storage on managed controllers

Once you have enabled the CloudBees Pluggable Storage service, you must enable CloudBees Pluggable Storage on one or more managed controllers. Once enabled, the managed controllers communicate with the cloud object storage provider via the CloudBees Pluggable Storage service.

Enable CloudBees Pluggable Storage in the UI

To enable CloudBees Pluggable Storage on managed controllers in the UI:

-

Sign on to the operations center as a user with Administer privileges.

-

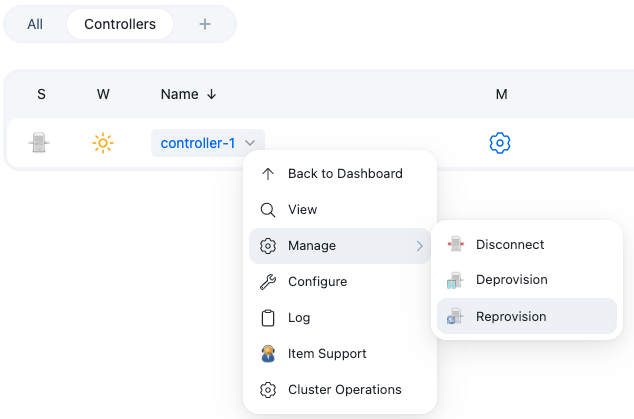

From the operations center dashboard, select the down arrow to the right of the managed controller’s name, and then select .

Figure 4. Reprovision the managed controller

Figure 4. Reprovision the managed controller -

When prompted, select Yes to reprovision the controller.

-

Once the managed controller has been reprovisioned, sign on to the managed controller as a user with Administer privileges.

-

Select in the upper-right corner to navigate to the Manage Jenkins page.

-

Select System.

-

Scroll down to CloudBees Pipeline Explorer, which is enabled by default.

-

Select CloudBees Pluggable Storage for Pipeline build logs.

-

Select Save.

-

Run a Pipeline build. After the build is complete, the Pipeline build log is archived and uploaded to the cloud object storage provider.

-

Navigate to your external storage provider, and verify that there are two objects: a log file and a log metadata file. For more information, refer to Cloud object storage path structure.

-

Navigate back to CloudBees CI and view the log file.

Enable CloudBees Pluggable Storage using Configuration as Code

To enable CloudBees Pluggable Storage on managed controllers using CasC:

-

Add the following to your managed controller’s

jenkins.yamlfile:unclassified: cloudbeesPipelineExplorer: pluggableStorageEnabled: true -

Apply the updated bundle to the managed controller. For more information, refer to Update a CasC bundle.

-

Run a Pipeline build. After the build is complete, the Pipeline build log is archived and uploaded to the cloud object storage provider.

-

Navigate to your external storage provider, and verify that there are two objects: a log file and a log metadata file. For more information, refer to Cloud object storage path structure.

-

Navigate back to CloudBees CI and view the log file.

Cloud object storage path structure

CloudBees Pluggable Storage creates a directory-like hierarchical structure in the cloud object storage provider to store build logs.

A key (the full path to the object) consists of the following components in order:

-

The globally configured, optional prefix that you specify using the

PluggableStorageService.storageObjectNamePrefixkey in yourvalues.yamlfile. The prefix should always end with a trailing/(slash) character. If no prefix is specified, this component is omitted, and objects are stored at the bucket root. For more information, refer to Enable the CloudBees Pluggable Storage service.For example, if you specify

logs/builds/as the prefix, this component islogs/builds/. -

The controller Kubernetes ServiceAccount namespace, followed by a trailing

/(slash) character.For example, if the controller Kubernetes ServiceAccount is in the

controller-namespace-1namespace, this component iscontroller-namespace-1/. -

The controller Kubernetes ServiceAccount name, followed by a trailing

/(slash) character.For example, if the controller Kubernetes ServiceAccount name is

controller-1, this component iscontroller-1/. -

A unique job ID that is randomly generated and persistent, followed by a trailing

/(slash) character.For example, if the unique job ID is

bXktcGlwbGluZS1qb2I, this component isbXktcGlwbGluZS1qb2I/. -

The build number, followed by a trailing

/(slash) character.For example, if the build number is

42, this component is42/. -

The type of file: either

logorlog-metadata.

In this example, the full object keys are:

logs/builds/controller-namespace-1/controller-1/bXktcGlwbGluZS1qb2I/42/log

logs/builds/controller-namespace-1/controller-1/bXktcGlwbGluZS1qb2I/42/log-metadata

Cost considerations

Use of Amazon S3, Google Cloud Storage, and Microsoft Azure Blob Storage incurs additional costs. Refer to the following for more information:

Access patterns

-

The CloudBees Pluggable Storage service creates a multipart upload on behalf of the controller, which uploads individual parts in parallel to presigned URLs it obtains from the CloudBees Pluggable Storage service using

PUTrequests. The controller then completes the upload, or aborts it if an error occurred. -

The CloudBees Pluggable Storage service creates presigned URLs for controllers to perform the actual downloads of Pipeline build logs. The controller then downloads the log using a single

GETrequest.

Unfinished multipart uploads

Some multipart uploads may not be properly completed or aborted (for example, due to a crash of the CloudBees CI controller process). Multipart uploads that are not completed or aborted continue to incur storage costs. Refer to Discovering and Deleting Incomplete Multipart Uploads to Lower Amazon S3 Costs in the AWS blog for tips to prevent this.